当前位置:网站首页>cuMemcpyHtoDAsync failed: invalid argument

cuMemcpyHtoDAsync failed: invalid argument

2022-07-21 19:43:00 【AI vision netqi】

tenorrt Operation error reporting :

pycuda._driver.LogicError: cuMemcpyHtoDAsync failed: invalid argument

-------------------------------------------------------------------

PyCUDA ERROR: The context stack was not empty upon module cleanup.

-------------------------------------------------------------------

A context was still active when the context stack was being

cleaned up. At this point in our execution, CUDA may already

have been deinitialized, so there is no way we can finish

cleanly. The program will be aborted now.

Use Context.pop() to avoid this problem.

-------------------------------------------------------------------

tensorrt Inference code :

import sys

sys.path.append('../../tools/')

import cv2

import time

import numpy as np

import tensorrt as trt

import pycuda.driver as cuda

import pycuda.autoinit

print('trt version',trt.__version__)

TRT_LOGGER = trt.Logger()

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

# Allocates all buffers required for an engine, i.e. host/device inputs/outputs.

def allocate_buffers(engine, context):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for i, binding in enumerate(engine):

size = trt.volume(context.get_binding_shape(i))

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

# Append the device buffer to device bindings.

bindings.append(int(device_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

# This function is generalized for multiple inputs/outputs.

# inputs and outputs are expected to be lists of HostDeviceMem objects.

def do_inference(context, bindings, inputs, outputs, stream, batch_size):

# Transfer input data to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

context.execute_async(batch_size=batch_size, bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

# use numpy rewrite softmax

def softmax(out_np, dim):

s_value = np.exp(out_np) / np.sum(np.exp(out_np), axis=dim, keepdims=True)

return s_value

class FaceClassify(object):

def __init__(self, configs):

self.engine_path = configs.face_classify_engine

self.input_size = configs.classify_input_size

self.image_size = self.input_size

self.MEAN = configs.classify_mean

self.STD = configs.classify_std

self.engine = self.get_engine()

self.context = self.engine.create_execution_context()

def get_engine(self):

# If a serialized engine exists, use it instead of building an engine.

f = open(self.engine_path, 'rb')

runtime = trt.Runtime(TRT_LOGGER)

return runtime.deserialize_cuda_engine(f.read())

def detect(self, image_src, cuda_ctx = pycuda.autoinit.context):

cuda_ctx.push()

start_all=time.time()

IN_IMAGE_H, IN_IMAGE_W = self.image_size

# Input

img_in = cv2.cvtColor(image_src, cv2.COLOR_BGR2RGB)

img_in = cv2.resize(img_in, (IN_IMAGE_W, IN_IMAGE_H), interpolation=cv2.INTER_LINEAR)

img_in = np.transpose(img_in, (2, 0, 1)).astype(np.float32) # (3, 240, 240)

img_in /= 255.0 # normalization [0, 1]

# mean = (0.485, 0.456, 0.406)

mean0 = np.expand_dims(self.MEAN[0] * np.ones((IN_IMAGE_H, IN_IMAGE_W)), axis=0)

mean1 = np.expand_dims(self.MEAN[1] * np.ones((IN_IMAGE_H, IN_IMAGE_W)), axis=0)

mean2 = np.expand_dims(self.MEAN[2] * np.ones((IN_IMAGE_H, IN_IMAGE_W)), axis=0)

mean = np.concatenate((mean0, mean1, mean2), axis=0)

# std = (0.229, 0.224, 0.225)

std0 = np.expand_dims(self.STD[0] * np.ones((IN_IMAGE_H, IN_IMAGE_W)), axis=0)

std1 = np.expand_dims(self.STD[1] * np.ones((IN_IMAGE_H, IN_IMAGE_W)), axis=0)

std2 = np.expand_dims(self.STD[2] * np.ones((IN_IMAGE_H, IN_IMAGE_W)), axis=0)

std = np.concatenate((std0, std1, std2), axis=0)

img_in = ((img_in - mean) / std).astype(np.float32)

img_in = np.expand_dims(img_in, axis=0) # (1, 3, 240, 240)

img_in = np.ascontiguousarray(img_in)

start=time.time()

# Dynamic input

self.context.active_optimization_profile = 0

origin_inputshape = self.context.get_binding_shape(0)

origin_inputshape[0], origin_inputshape[1], origin_inputshape[2], origin_inputshape[3] = img_in.shape

self.context.set_binding_shape(0, (origin_inputshape)) # If each input size Dissimilarity , According to the inputs Of size Change the corresponding context Medium size

inputs, outputs, bindings, stream = allocate_buffers(self.engine, self.context)

# Do inference

inputs[0].host = img_in

trt_outputs = do_inference(self.context, bindings=bindings, inputs=inputs, outputs=outputs,

stream=stream, batch_size=1)

print('infer time',time.time()-start,trt_outputs)

if cuda_ctx:

cuda_ctx.pop()

labels_sm = softmax(trt_outputs, dim=0)

labels_max = np.argmax(labels_sm, axis=1)

print('time_a',time.time()-start_all)

return labels_max.item() ,trt_outputs

if __name__ == '__main__':

class Params:

pass

opt = Params()

opt.face_classify_engine = 'efficientnet_b1.trt'

opt.classify_input_size = [128 ,128]

opt.classify_mean = [0.5 ,0.5 ,0.5]

opt.classify_std = [0.5 ,0.5 ,0.5]

face =FaceClassify(opt)

image_src =cv2.imread(r'987.jpg')

# image_src =cv2.imread(r'F:\project\detect\yolov5\tensorrt\yolo-tensorrt_dll_trt8\sln\x64\Release\16_1.jpg')

for i in range(10):

labels_max ,trt_outputs =face.detect(image_src)

print(trt_outputs)

print(labels_max)

reason , The data is not formatted as float32 type ,

resolvent :

img_in = ((img_in - mean) / std).astype(np.float32)The answers of netizens can also be referred to :

My personal feeling is that the input data does not match the address applied for by the model data entry :

Enter picture data shape incorrect , Maybe not (N, C, H, W)

Input image data dtype incorrect This is the case with me , Because I am pytorch turn ONNX Re turn tensorRT Of , stay ONNX The input in is not supported float64 by , Only single precision data formats are supported , And I am tensorRT The input in does not turn like this , Input float64 Pictures of the , So wrong reporting , Change it to float32 It's stable .

Link to the original text :https://blog.csdn.net/GungnirsPledge/article/details/108428651

The article also has solutions .

边栏推荐

- 342 NLP open source datasets in Chinese and English are shared

- Résoudre l'erreur signalée: uncaught typeerror: impossible de lire les propriétés sous - jacentes (lire « installer»)

- Bram for FPGA logic resource evaluation (taking Xilinx as an example)

- Click the model mode box and the other areas will not disappear except the mode box

- PHP如何实现在用户注册时查找数据库中是否有相同用户名

- MySQL (2)

- onmousemove=alert(1) style='width

- DOM event flow (event capture and event bubbling)

- Luogu p3313 [sdoi2014] Travel Solution

- Audience analysis and uninstall analysis have been comprehensively upgraded, and HMS core analysis service version 6.6.0 has been updated

猜你喜欢

22张图带你深入剖析前缀、中缀、后缀表达式以及表达式求值

学习笔记-Explain的介绍

Ripple test of DC DC switching power supply

FTXUI基础笔记(checkbox复选框组件)

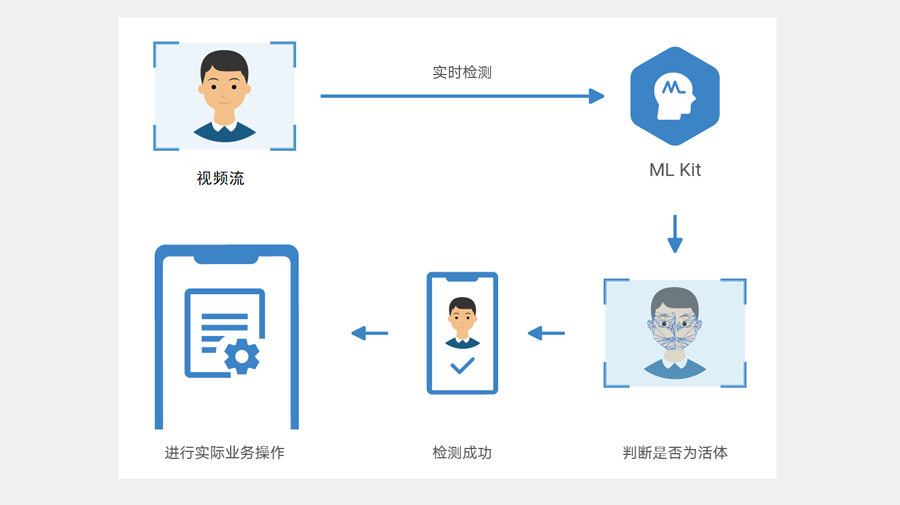

动作活体检测能力,构建安全可靠的支付级“刷脸”体验

MySQL (2)

Preparation of hemoglobin albumin nanoparticles encapsulated by Lumbrokinase albumin nanoparticles / erythrocyte membrane

笔试强训第19天

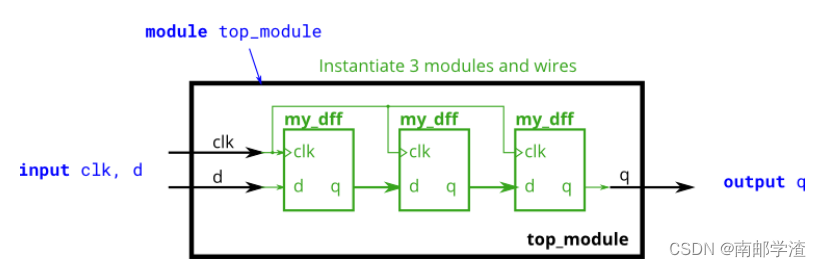

Verilog grammar basics HDL bits training 03

Technical post | the three most common network card software problems of a40i are analyzed for you one by one

随机推荐

45. Record the training process of orienmask and the process of deploying Yunshi technology depth camera

FTXUI基础笔记(checkbox复选框组件)

MySQL advanced (b)

Kubernetes technology and Architecture (V)

引用URL的标签属性href与src的区别是什么?

trivy 源码分析(扫描镜像中的漏洞信息)

维密萎靡,曾经“性感”现在真的“凉了”!

序列模型(一)- 循环序列模型

Ripple test of DC DC switching power supply

除去不必要的字段

Integrated design of signal processing system - minimum order IIR digital high pass filter

The ability to detect movement in vivo and build a safe and reliable payment level "face brushing" experience

Verilog语法基础HDL Bits训练 03

无线定位技术实验二 TDOA最小二乘定位法

The ability to detect movement in vivo and build a safe and reliable payment level "face brushing" experience

Weimi is depressed. Once "sexy", now it is really "cool"!

Web3流量聚合平台Starfish OS,给玩家元宇宙新范式体验

支持向量机(理解、推导、matlab例子)

342个中、英文等NLP开源数据集分享

orangePi3 lts