当前位置:网站首页>Text detection - traditional

Text detection - traditional

2022-07-22 02:54:00 【wzw12315】

Character detection is a very important part in the process of character recognition , The main goal of text detection is to detect the position of text area in the picture , To facilitate subsequent character recognition , Only when the text area is found , To identify its content .

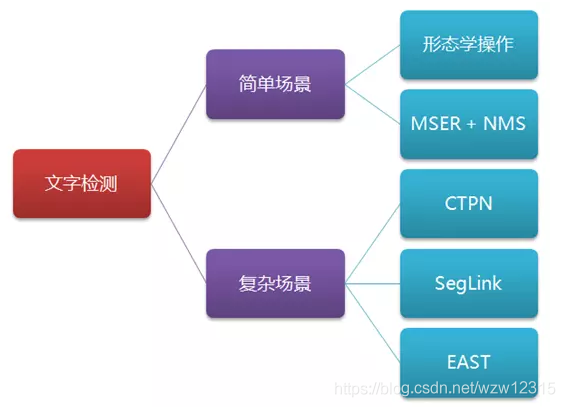

There are two main scenarios for text detection , One is a simple scene , The other is complex scenes . among , Text detection in simple scenes is relatively simple , For example, like book scanning 、 Screen capture 、 Or high definition 、 Regular photos, etc ; And complex scenes , It mainly refers to natural scenes , It's a little bit more complicated , Like a billboard on the street 、 Product packaging box 、 Instructions on the equipment 、 Trademarks and so on , There is a complex background 、 The light is flickering 、 Angle tilt 、 To distort 、 Lack of clarity, etc , Text detection is more difficult .

Simple scene 、 Text detection methods commonly used in complex scenes , Including morphological operations 、MSER+NMS、SWT、CTPN、SegLink、EAST Other methods :

1、 Simple scene : Morphological operation

By using image morphology in computer vision , Including inflation 、 Basic operation of corrosion , The text detection of simple scene can be realized , For example, detect the position of the text area in the screenshot

in ,“ inflation ” It is to expand the highlighted part of the image , Make the white area more ;“ corrosion ” The highlight of the image is nibbled , Make black areas more . By inflating 、 A series of operations of corrosion , The outline of the text area can be highlighted , And eliminate some border lines , Then find out the position of the text area through the method of finding the outline . The main steps are as follows :

- Read the picture , And turn it into a grayscale image

- Image binarization , Or reduce noise first and then binarization , In order to simplify the handling of

- inflation 、 Corrosion operation , Highlight the outline 、 Eliminate border lines

- Find the outline , Remove borders that don't fit the text

- Returns the result of text detection

import numpy as np

import cv2

def traditional_image_processing(image):

# Convert to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Use sharpening , Highlight the high-frequency features of the image , It seems useless

#gray = cv2.filter2D(gray, -1,kernel=np.array([[0, -1, 0], [-1, 5, -1], [0, -1, 0]], np.float32)) # Filter the image , Sharpening operation

#gray = cv2.filter2D(gray, -1, kernel=np.array([[0, -1, 0], [-1, 5, -1], [0, -1, 0]], np.float32))

# utilize Sobel Edge detection generates a binary graph

sobel = cv2.Sobel(gray, cv2.CV_8U, 0, 1, ksize=3)

cv2.imshow("sobel",sobel)

#gradY = cv2.Sobel(sobel, ddepth=cv2.CV_8U, dx=0, dy=1,ksize=3)

#sobel = cv2.subtract(sobel, gradY) # Image fusion using subtraction ?

# Two valued

ret, binary = cv2.threshold(sobel, 0, 255, cv2.THRESH_OTSU + cv2.THRESH_BINARY)

# inflation 、 corrosion

element1 = cv2.getStructuringElement(cv2.MORPH_RECT, (30, 9))

element2 = cv2.getStructuringElement(cv2.MORPH_RECT, (24, 6))

# Inflate once , Let the outline stand out

dilation = cv2.dilate(binary, element2, iterations=1)

# Corrode once , Get rid of the details

erosion = cv2.erode(dilation, element1, iterations=1)

# Expand again , Make the outline more obvious

dilation2 = cv2.dilate(erosion, element2, iterations=2)

# Find outline and filter text area

region = []

_,contours, hierarchy = cv2.findContours(dilation2, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

for i in range(len(contours)):

cnt = contours[i]

# Calculate the contour area , And screen out small areas

area = cv2.contourArea(cnt)

if (area < 1000):

continue

# Find the smallest rectangle

rect = cv2.minAreaRect(cnt)

print("rect is: ")

print(rect)

# box Is the coordinates of four points

box = cv2.boxPoints(rect)

box = np.int0(box)

# Calculate the height and width

height = abs(box[0][1] - box[2][1])

width = abs(box[0][0] - box[2][0])

# According to the characters , Sift through the thin rectangles , Leave the flat

if (height > width * 1.3):

continue

region.append(box)

# Draw the outline

for box in region:

cv2.drawContours(img, [box], 0, (0, 255, 0), 2)

cv2.imshow('img', img)

if __name__ == '__main__':

img = cv2.imread('22.png', cv2.IMREAD_COLOR)

traditional_image_processing(img)

cv2.waitKey(0)2、 Simple scene :MSER+NMS Detection method

MSER(Maximally Stable Extremal Regions, Maximum stable extremum region ) It is a popular traditional method of text detection ( Compared with deep learning AI For text detection ), In tradition OCR Widely used in , In some cases , Fast and accurate .

MSER The algorithm is in 2002 Bring up the , It is mainly based on the idea of watershed . The idea of watershed algorithm comes from topography , Treat images as natural landforms , The gray value of each pixel in the image represents the altitude of the point , Each local minimum and region is called a catchment basin , The boundary between the two catchment basins is the watershed .

MSER The process is like this , Take different threshold value of a gray image for binary processing , Threshold from 0 to 255 Increasing , This increasing process is like the rising water surface of a piece of land , As the water level goes up , Some of the lower areas will be gradually flooded , A bird's-eye view of the sky , The earth becomes land 、 Two parts of the water , And the waters are expanding . In this “ Diffuse water ” In the process of , Some of the connected areas in the image change little , It didn't even change , Then this region is called the maximum stable extremum region . On an image with words , Text area due to color ( Gray value ) It's consistent , So in the horizontal plane ( threshold ) In the process of continuous growth , It won't be “ Flood ”, It is not until the threshold value increases to the gray value of the text itself “ Flood ”. This algorithm can be used to roughly locate the position of the text area in the image .

It sounds like a very complicated process , Fortunately OpenCV Built in MSER The algorithm of , Can be called directly , Greatly simplifies the processing process .

def mser_image_processing(image):

gray = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)

visual = image.copy()

original = gray.copy()

mser = cv2.MSER_create()

regions,_=mser.detectRegions(gray)

hulls = [cv2.convexHull(p.reshape(-1,1,2)) for p in regions]

cv2.polylines(image,hulls,1,(0,255,0))

cv2.imshow("image",image)

keep=[]

for c in hulls:

x,y,w,h = cv2.boundingRect(c)

keep.append([x,y,x+w,y+h])

#cv2.rectangle(visual,(x,y),(x+w,y+h),(255,255,0),1)

keep = np.array(keep)

boxes = nms(keep,0.5)

for box in boxes:

cv2.rectangle(visual, (box[0], box[1]), (box[2], box[3]), (255, 0, 0), 1)

cv2.imshow("hulls",visual)

# NMS Method (Non Maximum Suppression, Non maximum suppression )

def nms(boxes, overlapThresh):

if len(boxes) == 0:

return []

if boxes.dtype.kind == "i":

boxes = boxes.astype("float")

pick = []

# Take four coordinate arrays

x1 = boxes[:, 0]

y1 = boxes[:, 1]

x2 = boxes[:, 2]

y2 = boxes[:, 3]

# Calculate the area array

area = (x2 - x1 + 1) * (y2 - y1 + 1)

# Sort by score ( If there is no confidence score , It can be sorted by coordinates from small to large , Such as the coordinates in the lower right corner )

idxs = np.argsort(y2)

# To traverse the , And delete duplicate boxes

while len(idxs) > 0:

# Put the bottom right box in pick Array

last = len(idxs) - 1

i = idxs[last]

pick.append(i)

# Find the maximum and minimum coordinates in the remaining boxes

xx1 = np.maximum(x1[i], x1[idxs[:last]])

yy1 = np.maximum(y1[i], y1[idxs[:last]])

xx2 = np.minimum(x2[i], x2[idxs[:last]])

yy2 = np.minimum(y2[i], y2[idxs[:last]])

# Calculate the ratio of overlapping area to corresponding box , namely IoU

w = np.maximum(0, xx2 - xx1 + 1)

h = np.maximum(0, yy2 - yy1 + 1)

overlap = (w * h) / area[idxs[:last]]

# If IoU Greater than the specified threshold , Delete

idxs = np.delete(idxs, np.concatenate(([last], np.where(overlap > overlapThresh)[0])))

return boxes[pick].astype("int")

if __name__ == '__main__':

img = cv2.imread('13.png', cv2.IMREAD_COLOR)

mser_image_processing(img)

cv2.waitKey(0)detection result :

边栏推荐

猜你喜欢

有一说一,要搞明白优惠券架构是如何演化的,只需10张图!

45W performance release +2.8k OLED full screen ASUS lingyao x 142022 elite temperament efficient weapon

quartz简单用法及其es-job

Leetcode 104. 二叉树的最大深度

I, AI doctoral student, online crowdfunding research topic

Doctor application | the City University of Hong Kong's Liu Chen teacher group recruits doctors / postgraduates / Masters /ra

阿里云技术专家杨泽强:弹性计算云上可观测能力构建

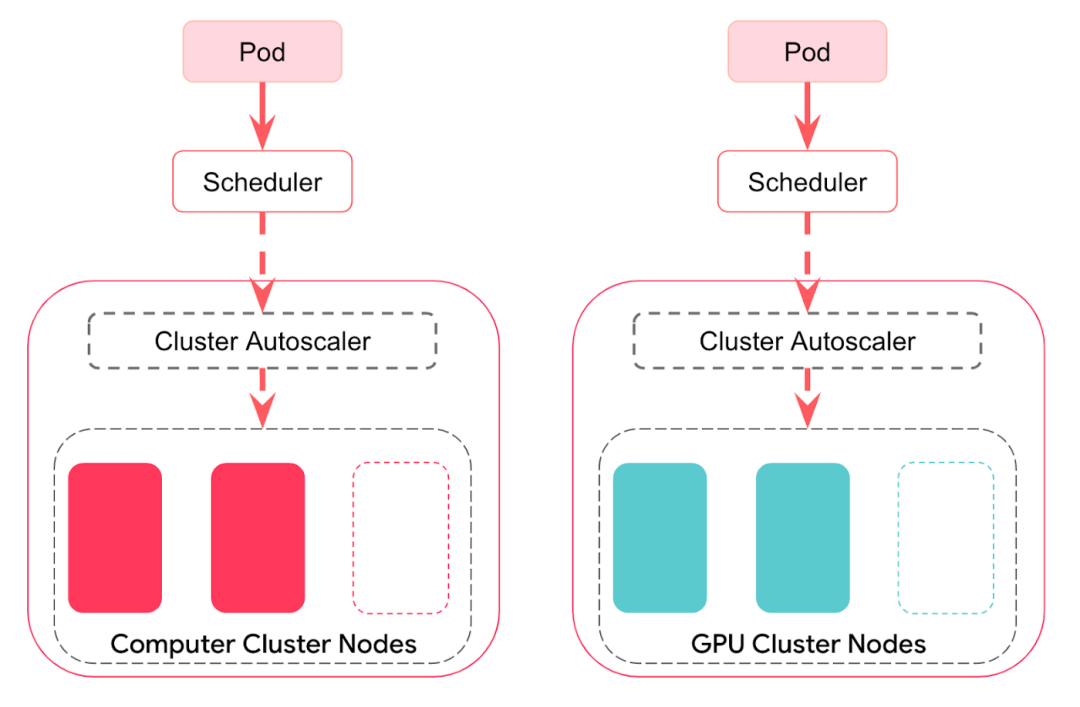

How airbnb realizes dynamic expansion of kubernetes cluster

荐号 | 真正的卓越者,都在践行“人生最优策略”,推荐这几个优质号

45W性能释放+2.8K OLED全面屏 华硕灵耀X 14 2022精英气质高效利器

随机推荐

COM编程入门1-创建项目并编写接口

OpenCV:如何去除票据上的印章

Bootloader series I -- Analysis of ARM processor startup process

2019杭电多校 第九场 6684-Rikka with Game【博弈题】

How airbnb realizes dynamic expansion of kubernetes cluster

2019牛客暑期多校训练营(第六场)D-Move 【暴力枚举】

Raspberry pie 3B builds Flink cluster

接口测试经典面试题:Session、cookie、token有什么区别?

Number of pairs (dynamic open point)

网页监控----Mjpg‐streamer移植

Unity2D~对周目解密小游戏练习(三天完成)

Mutex和智能指针替代读写锁

Codeforces Round #578 (Div. 2) C - Round Corridor 【数论+规律】

Why can redis single thread be so fast

2019牛客暑期多校训练营(第七场)B-Irreducible Polynomial 【数论】

MySQL进阶

Baiyuechen research group of Fudan University is looking for postdoctoral and scientific research assistants

Opencv: how to remove the seal on the bill

MySQL45讲笔记-字符串前缀索引&MySQL刷脏页分析

quartz簡單用法及其es-job