当前位置:网站首页>Raspberry pie 3B builds Flink cluster

Raspberry pie 3B builds Flink cluster

2022-07-22 02:46:00 【Hua Weiyun】

Welcome to visit mine GitHub

Here we classify and summarize all the original works of Xinchen ( Including supporting source code ):https://github.com/zq2599/blog_demos

- Today's actual battle is to use two raspberry pies 3B To form a Flink1.7 Cluster environment , The mode is independent cluster (Cluster Standalone);

Operation steps

- Preparing the operating system ;

- install JDK;

- To configure host;

- install Flink1.7;

- Configuration parameters ;

- Set up two raspberry pies for each other SSH Password free login ;

- start-up Flink Cluster environment ;

- Deploy a Flink application , Verify that the environment is OK ;

Raspberry pie operating system

- The operating systems of both raspberry pies are 64 position Debian, For detailed installation steps, please refer to 《 Raspberry pie 3B install 64 Bit operating system ( Raspberry pie does not need to be connected to the monitor keyboard and mouse )》;

install JDK

- install JDK The operation steps of are in 《 Raspberry pie 3B install 64 Bit operating system ( Raspberry pie does not need to be connected to the monitor keyboard and mouse )》 There is a detailed description of , Let's not go over it here , Note that both machines should be installed ;

install Flink1.7

- install Flink The operation of is done on both raspberry pies , The operation steps are exactly the same , as follows :

- stay Flink Download from the official website , The address is :https://flink.apache.org/downloads.html

- Here's the picture , Select the version in the red box :

- Download the Flink The installation package file is flink-1.7.0-bin-hadoop28-scala_2.11.tgz, Put it in this position :/usr/local/work;

- stay /usr/local/work Execute command under directory tar -zxvf flink-1.7.0-bin-hadoop28-scala_2.11.tgz decompression , A folder will be generated :flink-1.7.0

The condition of the machine

- Two raspberry pies 3B Of IP Address 、hostname Such information is shown in the following list :

| IP Address | hostname | identity |

|---|---|---|

| 192.168.1.102 | dubhe | Clustered master, namely job node |

| 192.168.1.104 | merak | Clustered slave, namely task node |

To configure

- Be careful , The next four steps are configuration , Please be there. dubhe、merak Perform once on each of these two machines , The steps and contents of the operation are exactly the same :

- open /etc/hosts file , Add the following two lines ( Please follow your machine IP Write according to the situation ):

192.168.1.102 dubhe192.168.1.104 merak- Open file /usr/local/work/flink-1.7.0/conf/flink-conf.yaml, The following four configurations have been modified :

jobmanager.rpc.address: dubhejobmanager.heap.size: 512mtaskmanager.heap.size: 512mtaskmanager.numberOfTaskSlots: 2jobmanager.rpc.address yes master The address of ,jobmanager.heap.size yes jobmanager Heap upper limit of ,taskmanager.heap.size yes taskmanager Heap upper limit of ,taskmanager.numberOfTaskSlots yes taskmanager Upper slot Number ;

Open file /usr/local/work/flink-1.7.0/conf/masters, Only the following line is reserved , Express dubhe do master:

dubhe:8081- Open file /usr/local/work/flink-1.7.0/conf/slaves, Only the following line is reserved , Express merak do slave:

merak- thus , Configuration complete , It's time to start the cluster ;

Start cluster

- stay dubhe Execute the following command to start the entire cluster :

/usr/local/work/flink-1.7.0/bin/start-cluster.sh- The console prompts for merak Of root Account and password , Enter and enter , Start up :

[email protected]:~# /usr/local/work/flink-1.7.0/bin/start-cluster.shStarting cluster.Starting standalonesession daemon on host [email protected]'s password: Starting taskexecutor daemon on host merak.- Enter the address in the browser :http://192.168.1.102:8081 , You can see the web page , As shown in the figure below :

- thus , The setup and startup of the cluster environment have been completed , Next is testing and verification ;

To configure SSH Password free login ( An optional step )

- When starting the cluster, you need to enter merak Password , A little trouble , In addition to stop-cluster.sh When stopping the cluster, you also need to enter merak Password , Therefore, it is recommended that you configure these two machines SSH Password free login , For specific operation steps, please refer to the article 《Docker Next , Realize the interaction between multiple machines SSH Password free login 》, Although the title is "Docker Next ", But the settings in this article also work on raspberry pie ;

Verify the cluster environment

To verify whether the cluster environment is normal , I've got one Flink application , This application is based on official classics demo Transformed , You can consume real-time messages from Wikipedia , Calculate each editor in real time 15 Number of bytes modified in seconds , The application is built into a file wikipediaeditstreamdemo-1.0-SNAPSHOT.jar, Download address :https://download.csdn.net/download/boling_cavalry/10870815

PS: Downloading resources will cost you one CSDN integral , The author wants to set it as free download , But one point is the minimum requirement of the system ;

Will download okay wikipediaeditstreamdemo-1.0-SNAPSHOT.jar file , Submit via website , The operation steps are as follows :

- Click in the red box below Upload Button , Submit a document :

- Follow the steps below to set parameters , The red box 2 Medium com.bolingcavalry.StreamingJob Is the application class name , Red box 3 Is parallelism , There are only two current environments task slot, So set it to 2, Click the red box 4 Medium Submit After the button, the task is started :

- The following figure is the output result of real-time calculation of Wikipedia data :

About this Flink Application details , You can refer to the article 《Flink actual combat : consumption Wikipedia Real time message 》;

thus ,Flink The cluster setup and related validation have been completed , Due to hardware limitations, complex calculations and processing cannot be done , But this environment is quite suitable for learning and experiment , I hope this article can provide you with some reference when setting up the environment ;

Welcome to Huawei cloud blog : Xinchen, programmer

On the way to study , You are not alone , Xinchen's original works are accompanied all the way …

边栏推荐

- How the computer accesses the Internet (I) message generation DNS

- rust 文件读写操作

- Chapter 2 software process and thought section 1 Foundation

- Directory and file management

- MySQL advanced (XIV) implementation method of batch update and batch update of different values of multiple records

- Basic principle and configuration of switch

- un7.20:如何同时将关联表中的两个属性展示出来?

- 请教个问题,按照快速上手的来,flink SQL 建表后查询,出来表结构,但是有个超时报错,怎么办?

- three hundred and thirteen billion one hundred and thirty-one million three hundred and thirteen thousand one hundred and twenty-three

- Nature | 杨祎等揭示宿主内进化或可导致肠道共生菌致病

猜你喜欢

数据治理过程中会遇到那些数据问题?

交换机基本原理与配置

![[H3C device networking configuration]](/img/e7/d18d6b03e43fdfcf578f654520b162.png)

[H3C device networking configuration]

代码表征预训练语言模型学习指南:原理、分析和代码

Precautions for selecting WMS storage barcode management system

With a wave of operations, I have improved the efficiency of SQL execution by 10000000 times

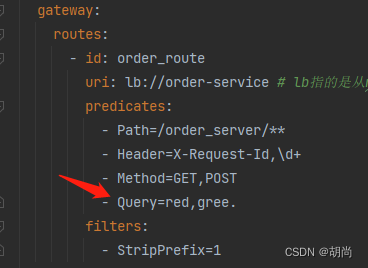

Gateway route assertion factory, filter factory, cross domain processing

It takes only 10 pictures to figure out how the coupon architecture evolved!

Vmware Workstation Pro虚拟机网络三种网卡类型及使用方式

Leetcode 104. Maximum depth of binary tree

随机推荐

[Development Tutorial 4] crazy shell · humanoid hip-hop robot PC upper computer online debugging

Interview Beijing XX technology summary

Chinese herbal medicine recognition based on deep neural network

Network address translation (NAT)

mysql.h: No such file or directory

Leetcode 104. 二叉树的最大深度

MySQL性能优化(三):深入理解索引的这点事

Resolved (selenium operation Firefox browser error) typeerror:__ init__ () got an unexpected keyword argument ‘firefox_ options‘

网络安全(4)

SQL server数据库增量更新是根据 where 子句来识别的吗? 那做不到流更新吧? 每个表要

Fluent introduces the graphic verification code and retains the sessionid

Why does a very simple function crash

小米12S Ultra产品力这么强老外却买不到 雷军:先专心做好中国市场

Force buckle 1260 2D mesh migration

Network wiring and number system conversion

选择WMS仓储条码管理系统的注意事项

【微信小程序】camera系统相机(79/100)

CTF problem solving ideas

QT | QT project documents Detailed explanation of pro file

调用百度AI开放平台实现图片文字识别