当前位置:网站首页>The difference between gbdt and XGB, and the mathematical derivation of gradient descent method and Newton method

The difference between gbdt and XGB, and the mathematical derivation of gradient descent method and Newton method

2020-11-06 01:28:00 【Elementary school students in IT field】

Why the gradient descent method and Newton method are introduced ?

Two algorithms are mentioned here GBDT and XGBoost, All two are boosting Model .

GBDT and xgb The objective function of is different , At the same time, aiming at the error function in the objective function L(θ) There are also differences in the way of fitting :

- GBDT Let's use the first order Taylor expansion to expand two terms , Make an approximation

- xgboost Let's use the second order Taylor expansion to expand the three terms , Make an approximation

Words mean , - GBDT The gradient descent method is used to optimize in function space

- XGBoost Using Newton's method to optimize in function space

The final objective function only depends on the first and second derivatives of each data point on the error function .

The error function can be customized , Let's say the square loss function : ( y i , y i ) = ( y i − y i ) 2 (yi,y^i) = (yi−y^i)2 (yi,yi)=(yi−yi)2, or logistic Loss function

More introductions and suggestions to listen to :https://study.163.com/course/courseMain.htm?courseId=1006401020&share=2&shareId=400000000645014

1. The derivation of the gradient descent method

Gradient descent method is widely used in machine learning and deep learning , The general course or textbook will explain the gradient descent method in a visualized way ( Quadratic curve 、 Lower convex surface, etc ), I think we all know how to use visualization to illustrate the effectiveness of gradient descent method . here , I'm not going to repeat this kind of figurative explanation . I use mathematical derivation to prove the effectiveness of the gradient descent method .

We should all know the Taylor expansion of function of one variable , The formula is as follows :

f ( x ) = f ( x 0 ) + f ’ ( x 0 ) 1 ! ( x − x 0 ) + f ’ ’ ( x 0 ) 2 ! ( x − x 0 ) 2 + f ’ ’ ’ ( x 0 ) 3 ! ( x − x 0 ) 3 + … f(x)=f(x_0)+\frac{f’(x_0)}{1!}(x-x_0)+\frac{f’’(x_0)}{2!}(x-x_0)^2+\frac{f’’’(x_0)}{3!}(x-x_0)^3+… f(x)=f(x0)+1!f’(x0)(x−x0)+2!f’’(x0)(x−x0)2+3!f’’’(x0)(x−x0)3+…

Let's just take the first two terms of the formula on the right , That's one “ About equal to ” Result :

f ( x ) = f ( x 0 ) + f ’ ( x 0 ) 1 ! ( x − x 0 ) f(x)=f(x_0)+\frac{f’(x_0)}{1!}(x-x_0) f(x)=f(x0)+1!f’(x0)(x−x0)

remember : Δ x = x − x 0 \Delta x=x-x_0 Δx=x−x0, The above formula becomes :

f ( x ) = f ( x 0 ) + f ’ ( x 0 ) 1 ! Δ x f(x)=f(x_0)+\frac{f’(x_0)}{1!}\Delta x f(x)=f(x0)+1!f’(x0)Δx

Our goal is to make the following equation hold in the iterative process , In other words, it is hoped that the function value will gradually decrease during the iteration process , To describe in mathematical language is : f ( x n + 1 ) ≤ f ( x n ) f(x_{n+1})\leq f(x_{n}) f(xn+1)≤f(xn)

Easy to think of , It should be constructed :

Δ x = − f ’ ( x 0 ) \Delta x=-f’(x_0) Δx=−f’(x0)

here :

f ( x ) = f ( x 0 ) − f ’ ( x 0 ) 2 f(x)=f(x_0)-f’(x_0)^2 f(x)=f(x0)−f’(x0)2

Write it in iterative form :

f ( x n + 1 ) = f ( x n ) − f ’ ( x n ) 2 f(x_{n+1})=f(x_{n})-f’(x_{n})^2 f(xn+1)=f(xn)−f’(xn)2

because f ’ ( x ) 2 ≥ 0 f’(x)^2\geq 0 f’(x)2≥0, We have completed the proof of the effectiveness of gradient descent . The formula of parameter iterative updating summarized from the above steps is as follows :

f ( x n + 1 ) = f ( x n ) − f ’ ( x n ) 2 f(x_{n+1})=f(x_{n})-f’(x_{n})^2 f(xn+1)=f(xn)−f’(xn)2

The above steps prove the effectiveness of gradient descent on a function of one variable . It is easy to generalize to multivariate functions . in addition , In multivariate functions , It can also be added that the gradient direction is the fastest direction of descent .

See : You know Why does gradient descent find a minimum ?

2. Newton method

That's the gradient descent method , By the way, the derivation of Newton's method . Because Newton's method is also derived from Taylor expansion .

Keep watching Taylor unfold :

f ( x ) = f ( x 0 ) + f ’ ( x 0 ) 1 ! ( x − x 0 ) + f ’ ’ ( x 0 ) 2 ! ( x − x 0 ) 2 + f ’ ’ ’ ( x 0 ) 3 ! ( x − x 0 ) 3 + … f(x)=f(x_0)+\frac{f’(x_0)}{1!}(x-x_0)+\frac{f’’(x_0)}{2!}(x-x_0)^2+\frac{f’’’(x_0)}{3!}(x-x_0)^3+… f(x)=f(x0)+1!f’(x0)(x−x0)+2!f’’(x0)(x−x0)2+3!f’’’(x0)(x−x0)3+…

still , Let's take the right front 2 term :

f ( x ) = f ( x 0 ) + f ’ ( x 0 ) 1 ! ( x − x 0 ) f(x)=f(x_0)+\frac{f’(x_0)}{1!}(x-x_0) f(x)=f(x0)+1!f’(x0)(x−x0)

Take derivatives on both sides of the equation :

f ’ ( x ) = f ’ ( x 0 ) + f ’ ’ ( x 0 ) 1 ! ( x − x 0 ) f’(x)=f’(x_0)+\frac{f’’(x_0)}{1!}(x-x_0) f’(x)=f’(x0)+1!f’’(x0)(x−x0)

f ’ ( x ) = f ’ ( x 0 ) + f ’ ’ ( x 0 ) 1 ! Δ x f’(x)=f’(x_0)+\frac{f’’(x_0)}{1!}\Delta x f’(x)=f’(x0)+1!f’’(x0)Δx

According to the nature of calculus , f ( x ) f(x) f(x) When you take the minimum , Yes f ’ ( x ) = 0 f’(x)=0 f’(x)=0, Let's put this property into the equation above , Yes :

0 = f ’ ( x 0 ) + f ’ ’ ( x 0 ) 1 ! Δ x 0=f’(x_0)+\frac{f’’(x_0)}{1!}\Delta x 0=f’(x0)+1!f’’(x0)Δx

Δ x = − f ’ ( x 0 ) f ’ ’ ( x 0 ) \Delta x=-\frac{f’(x_0)}{f’’(x_0)} Δx=−f’’(x0)f’(x0)

In this way, we get the parameter iterative updating formula of Newton method as follows :

x n + 1 = x n − f ’ ( x n ) f ’ ’ ( x n ) x_{n+1}=x_{n}-\frac{f’(x_n)}{f’’(x_n)} xn+1=xn−f’’(xn)f’(xn)

3. The difference between the gradient descent method and Newton's method

From the above proof process, we can see that , Although both gradient descent method and Newton method can be derived by Taylor expansion , But there's a little bit of a difference in the way the reasoning is based .

In practice , Newton method and gradient descent method are widely used in machine learning . The difference between the two is actually written in many blogs , such as : gradient descent or Quasi Newton method ?

4. Quasi Newton method

In the parameter iterative updating formula of Newton method above , We can see f ’ ’ ( x 0 ) f’’(x_0) f’’(x0) It's in the denominator . remember , The mathematical derivation above is a function of one variable , For multivariate functions , The existence of this denominator is equivalent to calculating Hessian The inverse of the matrix , It's very difficult and time-consuming . therefore , Many variations of Newton's algorithm have appeared , This kind of deformation is called quasi Newton algorithm .BFGS It's an iterative method to approximate the Hessian matrix . and BFGS We need to store the approximate Hessian matrix , So there's an improved version L-BFGS.

版权声明

本文为[Elementary school students in IT field]所创,转载请带上原文链接,感谢

边栏推荐

- ES6 essence:

- 带你学习ES5中新增的方法

- Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

- Summary of common algorithms of linked list

- Our best practices for writing react components

- Group count - word length

- Summary of common string algorithms

- 加速「全民直播」洪流,如何攻克延时、卡顿、高并发难题?

- ipfs正舵者Filecoin落地正当时 FIL币价格破千来了

- 一篇文章带你了解CSS3圆角知识

猜你喜欢

What to do if you are squeezed by old programmers? I don't want to quit

Architecture article collection

axios学习笔记(二):轻松弄懂XHR的使用及如何封装简易axios

一篇文章教会你使用Python网络爬虫下载酷狗音乐

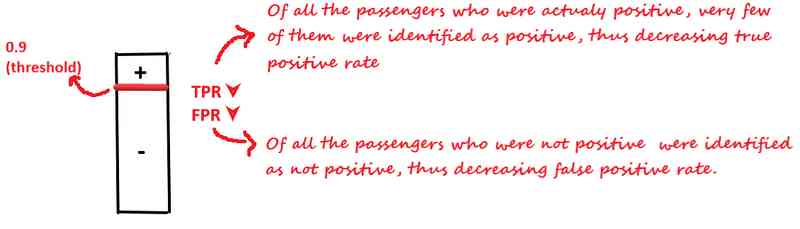

How to select the evaluation index of classification model

ES6学习笔记(二):教你玩转类的继承和类的对象

PHPSHE 短信插件说明

Python saves the list data

How to use Python 2.7 after installing anaconda3?

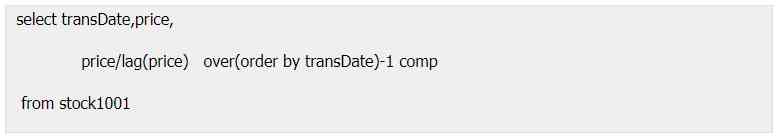

Calculation script for time series data

随机推荐

带你学习ES5中新增的方法

PHPSHE 短信插件说明

6.6.1 localeresolver internationalization parser (1) (in-depth analysis of SSM and project practice)

Synchronous configuration from git to consult with git 2consul

Python download module to accelerate the implementation of recording

High availability cluster deployment of jumpserver: (6) deployment of SSH agent module Koko and implementation of system service management

After reading this article, I understand a lot of webpack scaffolding

一篇文章教会你使用HTML5 SVG 标签

Did you blog today?

EOS创始人BM: UE,UBI,URI有什么区别?

Python Jieba segmentation (stuttering segmentation), extracting words, loading words, modifying word frequency, defining thesaurus

Interface pressure test: installation, use and instruction of siege pressure test

Summary of common string algorithms

2019年的一个小目标,成为csdn的博客专家,纪念一下

6.1.1 handlermapping mapping processor (1) (in-depth analysis of SSM and project practice)

ipfs正舵者Filecoin落地正当时 FIL币价格破千来了

React design pattern: in depth understanding of react & Redux principle

Save the file directly to Google drive and download it back ten times faster

Filecoin主网上线以来Filecoin矿机扇区密封到底是什么意思

一篇文章带你了解CSS3圆角知识