当前位置:网站首页>Batch gradient descent, random gradient descent and mini batch gradient descent

Batch gradient descent, random gradient descent and mini batch gradient descent

2022-07-21 02:19:00 【Little aer】

List of articles

gradient descent

Gradient descent is also known as batch gradient descent (Batch Gradient Descent), The method used is to calculate a epoch( Every epoch All sample sizes are included ) Of all samples in Loss and , Averaging losses is the current epoch The loss value of , Then calculate the gradient for back propagation 、 Parameters are updated .

Stochastic gradient descent

Stochastic gradient descent (SGD, Now? SGD The meaning has changed , It doesn't mean random gradient descent ), The method used is to a epoch Each sample was tested Loss solve , Then calculate the gradient for back propagation 、 Parameters are updated

mini-batch gradient descent

mini-batch gradient descent , also called SGD, Now you see in the code SGD Generally speaking, it means mini-batch gradient descent , It doesn't mean random gradient descent . The method used is to put a epoch For all samples in the, press batch_size division , If batch_size=128, Then the calculation of loss is based on batch_size=128 Calculate the loss and average it , Then calculate the gradient for back propagation 、 Parameters are updated .

summary

Total sample =m,batch_size=128

| Stochastic gradient descent | mini-batch gradient descent | gradient descent | |

|---|---|---|---|

| Loss Unit of account | 1<= | batch_size<= | m |

| advantage | The update speed of parameters is greatly accelerated , Because after calculating the of each sample Loss The parameter will be updated once | Every epoch Calculate... From all samples Loss, Calculated in this way Loss It can better represent the performance of the current classifier in the whole training set , The direction of the gradient obtained can also better represent the direction of the global minimum point . If the loss function is convex , Then the global optimal solution can be found in this way . | |

| shortcoming | 1. The amount of computation is large and cannot be parallelized . Batch gradient descent can be calculated by matrix operation and parallel calculation Loss, however SGD Gradient calculation and parameter descent are performed every time a sample is traversed , Unable to perform effective parallel computing .2. It is easy to fall into local optimization, resulting in the decline of model accuracy . Because a single sample Loss Cannot replace global Loss, In this way, the calculated gradient direction will also deviate from the globally optimal direction . However, due to the large number of samples , Overall Loss Will remain low , It's just Loss There will be large fluctuations in the change curve of . | All samples need to be used to calculate each time Loss, When the number of samples is very large, even if there is only limited parallel computing , And in each of the epoch Calculate all samples Loss Only update parameters once after , That is, only one gradient descent operation , Very inefficient . | |

| Combining the advantages of random gradient descent and gradient descent , Avoid shortcomings |

边栏推荐

- C language implementation and search set

- [upload range 1-11] basic level: characteristics, analysis and utilization

- BOM browser object model (Part 1) - overview, common events of window object, JS execution mechanism (close advertisement after 5 seconds, countdown case, send SMS countdown case)

- Leetcode sword finger offer 32 - I. print binary tree from top to bottom

- leetcode 剑指 Offer 26. 树的子结构

- See through the "flywheel effect" of household brands from the perspective of three winged birds

- Merge and sort targeted questions

- leetcode 剑指 Offer 11. 旋转数组的最小数字

- kettle

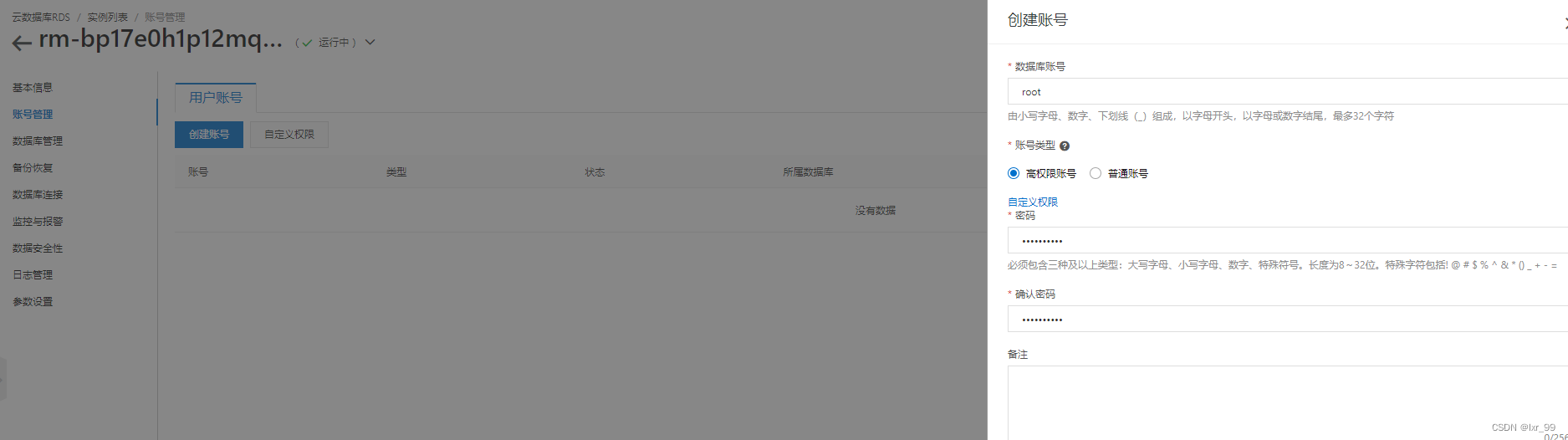

- MySQL metabase & Account Management & Engine

猜你喜欢

【培训课程专用】TEE组件介绍

Infinite connection · infinite collaboration | the first global enterprise communication cloud conference WECC is coming

scala Breaks.break()、Breaks.breakable()、控制抽象

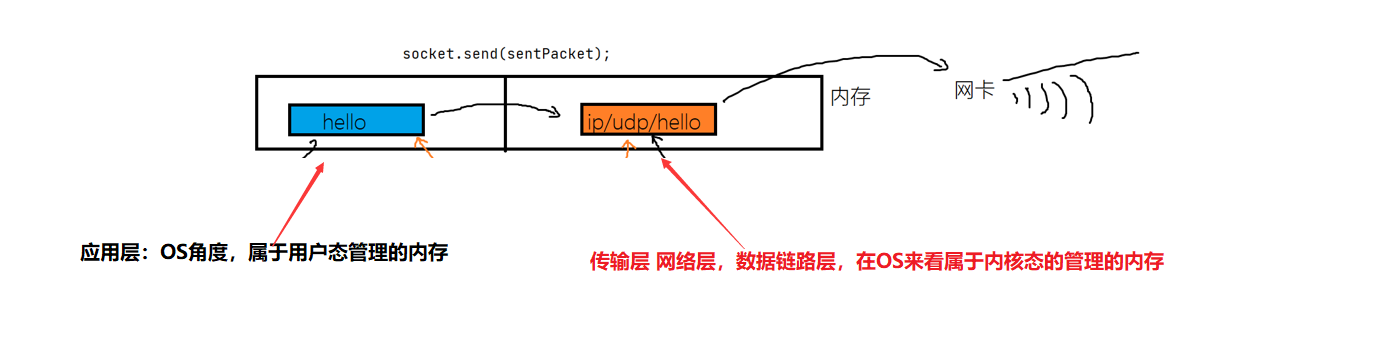

Protocol details of network principles

分享一个好玩的JS小游戏

scala 函数&方法、函数&方法的实现原理

ECS和云数据库管理

Doris Connector 结合 Flink CDC 实现 MySQL 分库分表 Exactly Once精准接入

【C语言进阶】---- 自定义类型详解

Regular expression tutorial notes

随机推荐

36- [go] IO stream of golang

Animation function encapsulation (slow motion animation)

Leetcode sword finger offer 26 Substructure of tree

ResNet知识点补充

leetcode 剑指 Offer 32 - I. 从上到下打印二叉树

mySQL元数据库&账户管理&引擎

MySQL - partition column (horizontal partition vertical partition)

网络与VPC之动手实验

Records from July 18, 2022 to July 25, 2022

kettle

Laravel定时任务

EXCEL的去重去除某个字段后全部操作

DP knapsack problem

[upload range 1-11] basic level: characteristics, analysis and utilization

Three traversals of binary tree in C language

MySQL metabase & Account Management & Engine

Mysql -分区列(横向分区 纵向分区)

进程和线程

Recommend a screenshot tool -snipaste

部分语音特征记录