当前位置:网站首页>Machine learning Basics (4) filters

Machine learning Basics (4) filters

2022-07-22 12:04:00 【@[email protected]】

Catalog

1.3 Convolution filling padding

1.7 The meaning of convolution

2.1. Square box filtering and mean filtering

1. Convolution

1.1. What is convolution

In image processing , Input an image f(x,y), Specially designed convolution kernel g(x,y) After convolution , The output image will get blurred , Edge strengthening and other effects . Image convolution is the process of continuous multiplication and summation when the convolution kernel slides through pixels on the image line .

1.2. Convolution step

The step length is the step length of the convolution kernel moving on the image . The default is 1, In steps of 1, More pixels can be processed , Can fully scan pictures

1.3 Convolution filling padding

After convolution, the length and width of the picture will become smaller . If you want to keep the picture size unchanged , We need to fill around the picture 0. padding It means filled 0 The number of turns .

If the step size is 1, be ,P=(F-1)/2 F It's the convolution size

1.4 Convolution size

Picture convolution , Convolution kernels are generally odd , such as 3 * 3, 5 * 5, 7 * 7. Why is it usually odd , For the following two considerations :

According to the above padding Calculation formula , If you want to keep the picture size unchanged , Using even convolution kernel , such as 4 * 4, Fill... Will appear 1.5 Circle zero .

Filters in odd dimensions have centers , It is convenient to point out the position of the filter , namely OpenCV Anchor point in convolution .

1.6 Convolution API

filter2D(src, ddepth, kernel[, dst[, anchor[, delta[, borderType]]]])

ddepth Is the bit depth of the image after convolution , That is, the data type of the picture after convolution , General set to -1, The representation is consistent with the original drawing type .

kernel It's the size of the convolution kernel , Use tuples or ndarray Express , The data type must be float type .

anchor Anchor point , That is, the center point of the convolution kernel , Is an optional parameter , The default is (-1,-1)

delta Optional parameters , Represents an additional value added after convolution , Equivalent to the deviation in the linear equation , The default is 0.

borderType Boundary type . Generally, there is no .

The return value is the processed image data

Sample code :

# OpenCV Image convolution operation

import cv2

import numpy as np

# Import image

img = cv2.imread('./dog.jpeg')

# It's equivalent to averaging every point in the original picture , So the image becomes blurred .

kernel = np.ones((5, 5), np.float32) / 25

# ddepth = -1 The data type representing the picture remains unchanged

dst = cv2.filter2D(img, -1, kernel)

# Obviously, the image after convolution is blurred .

cv2.imshow('img', np.hstack((img, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()1.7 The meaning of convolution

Different convolution kernels , Different processing effects can be achieved . The purpose of convolution operation is to extract the features of the image .

Take a look at the following blog

Basic knowledge of convolution operation _ Wang Xiaopeng's blog -CSDN Blog _ Convolution operation

2. wave filtering

No matter what filtering , Are convolution operations , The convolution kernel is used to convolute the image data to get the corresponding data . Image filtering , That is, under the condition of preserving the details of the image as much as possible Suppress the noise of the target image , It is an indispensable operation in image preprocessing , The quality of its processing effect will directly affect the effectiveness and reliability of subsequent image processing and analysis .

2.1. Square box filtering and mean filtering

boxFilter(src, ddepth, ksize[, dst[, anchor[, normalize[, borderType]]]]) Square box filter .

The form of convolution kernel of square box filter is as follows :

normalize = True when , a = 1 / (W * H) The width and height of the filter

normalize = False yes . a = 1

In general, we use normalize = True The situation of . At this time Square box filter Equivalent to Mean filtering

blur(src, ksize[, dst[, anchor[, borderType]]]) Mean filtering .

Case code :

import cv2

import numpy as np

# Import image

img = cv2.imread('./dog.jpeg')

# Square box filter

dst=cv2.boxFilter(img,-1,(5,5),normalize=True)

# kernel = np.ones((5, 5), np.float32) / 25

# ddepth = -1 The data type representing the picture remains unchanged

dst = cv2.blur(img, (5, 5))

# Obviously, the image after convolution is blurred .

cv2.imshow('img', img)

cv2.imshow('dst', dst)

cv2.waitKey(0)

cv2.destroyAllWindows()2.2. Gauss filtering

Gaussian filtering is to use the convolution check image conforming to Gaussian distribution for convolution operation . So the focus of Gaussian filtering is how to calculate the convolution kernel that conforms to Gaussian distribution , Gaussian template .

GaussianBlur(src, ksize, sigmaX[, dst[, sigmaY[, borderType]]])

kernel The size of the Gaussian kernel .

sigmaX, X Standard deviation of axis

sigmaY, Y Standard deviation of axis , The default is 0, At this time sigmaY = sigmaX

If not specified sigma value , Will be from ksize Width and height of sigma.

Choose different sigma Values give different smoothing effects , sigma The bigger it is , The more obvious the smoothing effect .

Code case :

# Gauss filtering

import cv2

import numpy as np

# Import image

img = cv2.imread('./gaussian.png')

dst = cv2.GaussianBlur(img, (5, 5), sigmaX=1)

cv2.imshow('img', np.hstack((img, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()2.3 median filtering

The principle of median filtering is very simple , Let's say there's an array [1556789], Take the middle value ( Median ) As the result value after convolution . Median filter on pepper noise ( Also called salt and pepper noise ) Obvious effects .

Be careful , Median filter api,ksize Integer is used , It's not a tuple .

# median filtering

import cv2

import numpy as np

# Import image

img = cv2.imread('./papper.png')

# Notice the ksize It's just a number

dst = cv2.medianBlur(img, 5)

cv2.imshow('img', np.hstack((img, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()

2.4. Bilateral filtering

Bilateral filtering is essentially Gaussian filtering , The difference between bilateral filtering and Gaussian filtering is : Bilateral filtering uses both position information and pixel information to define the weight of the filtering window . Gaussian filtering only uses position information .. Bilateral filter can save the image edge details and filter out the noise of low frequency components , But the efficiency of bilateral filter is not too high , It takes longer time than other filters .

Bilateral filtering You can keep the edges , At the same time, the region within the edge can be smoothed .

The function of bilateral filtering is equivalent to beauty .

dst=bilateralFilter(src, d, sigmaColor, sigmaSpace[, dst[, borderType]])

d: Convolution kernel size

sigmaColor It's calculation Pixels Information usage sigma

sigmaSpace It's calculation Space Information usage sigma

wave filtering N The bigger, the flatter, the more blurred (2*N+1) sigmas The larger the space, the more blurred sigmar Similarity factor

. int d: Represents the diameter range of each pixel neighborhood in the filtering process . If this value is not positive , Then the function starts with the fifth parameter sigmaSpace Calculate the value .

. double sigmaColor: Color space filter sigma value , The larger the value of this parameter , It means that the wider the neighborhood of the pixel, the more colors will be mixed together , Produce large semi equal color areas .

. double sigmaSpace: Of filters in coordinate space sigma value , If the value is large , It means that distant pixels with similar colors will affect each other , So that enough similar colors in a larger area can get the same color . When d>0 when ,d Specifies the neighborhood size and is associated with sigmaSpace Facial features , otherwise d Proportional to sigmaSpace.

# Bilateral filtering

import cv2

import numpy as np

# Import image

img = cv2.imread('./lena.png')

dst = cv2.bilateralFilter(img, 7, 20, 50)

cv2.imshow('img', np.hstack((img, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()3. operator

The operator is in the high pass filter , It is mainly used for edge extraction and generally turns to noise reduction , Gray image reprocessing is better , The previous filtering is mainly noise reduction , Beautiful .

3.1 Sobel (sobel) operator

Edge is Where the pixel value jumps , Is one of the salient features of the image , In image feature extraction , Object detection , Pattern recognition plays an important role .

How does the human eye recognize image edges ?

For example, there is a picture , There is a line in the picture , It's very bright on the left , It's dark on the right , The human eye can easily recognize this line as the edge . That is to say Where the gray value of a pixel changes rapidly .

sobel Operator on image Find the first derivative . The greater the first derivative , It indicates that the greater the change of pixels in this direction , The stronger the edge signal is .

Because the gray value of the image is a discrete number , sobel The operator uses the discrete difference operator to calculate the approximate gradient of the illumination value of image pixels .

The image is two-dimensional , Along the width / Height in two directions . We use two convolutions to check the original image for processing :

# x Axis direction , What you get is the vertical edge

dx_Img = cv2.Sobel(img, cv2.CV_64F, dx=1, dy=0, ksize=5)

dy_Img = cv2.Sobel(img, cv2.CV_64F, dx=0, dy=1, ksize=5)

cv2.CV_64F Bit depth writing -1 It's fine too dx dy ksize Convolution kernel size

Code case :

# sobel operator .

import cv2

import numpy as np

# Import image

img = cv2.imread('./chess.png')#

# x Axis direction , What you get is the vertical edge

dx = cv2.Sobel(img, cv2.CV_64F, 1, 0, ksize=5)

dy = cv2.Sobel(img, cv2.CV_64F, 0, 1, ksize=5)

# available numpy Addition of , Directly integrate two pictures

# dst = dx + dy

# Also available opencv Addition of

dst = cv2.add(dx, dy)

cv2.imshow('dx', np.hstack((dx, dy, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()3.2 Shar (Scharr) operator

Scharr(src, ddepth, dx, dy[, dst[, scale[, delta[, borderType]]]])

When the kernel size is 3 when , above Sobel The kernel may produce obvious errors ( After all ,Sobel The operator just takes an approximation of the derivative ). To solve this problem ,OpenCV Provides Scharr function , But this function only works on the size of 3 The kernel of . The operation of this function is related to Sobel As fast as a function , But the results are more accurate .

Scharr Operator and Sobel Is very similar , Just use different kernel value , Magnified the pixel transformation :

Scharr operator Only support 3 * 3 Of kernel So there was no kernel Parameters .

Scharr The operator can only find x Direction or y The edge of the direction .

Sobel Operator's ksize Set to -1 Namely Scharr operator .

Scharr Good at finding small edges , It's usually used less .

Code case :

# sobel operator .

import cv2

import numpy as np

# Import image

img = cv2.imread('./lena.png')#

# x Axis direction , What you get is the vertical edge

dx = cv2.Scharr(img, cv2.CV_64F, 1, 0)

# y Axis direction , What you get is the horizontal edge

dy = cv2.Scharr(img, cv2.CV_64F, 0, 1)

# available numpy Addition of , Directly integrate two pictures

# dst = dx + dy

# Also available opencv Addition of

dst = cv2.add(dx, dy)

cv2.imshow('dx', np.hstack((dx, dy, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()3.3 Laplace operator

Sobel operator is to simulate the first derivative , The bigger the derivative, the stronger the transformation , The more likely it is the edge . Laplace operator can find " At the edge " Of Second derivative =0, We can use this feature to find the edge of an image . Note that there is a problem , The second derivative is 0 The position of may also be meaningless . We need to reduce noise

Laplacian(src, ddepth[, dst[, ksize[, scale[, delta[, borderType]]]]])

You can find the edges in both directions at the same time

Sensitive to noise , Generally, it needs to be done first Denoise Then call Laplace

Code case :

# Laplace

import cv2

import numpy as np

# Import image

img = cv2.imread('./chess.png')#

dst = cv2.Laplacian(img, -1, ksize=3)

cv2.imshow('dx', np.hstack((img, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows().3.4. edge detection Canny

Canny Edge detection algorithm yes John F. Canny On 1986 A multi-level edge detection algorithm developed in , It is also considered by many people as the of edge detection Optimal algorithm , The three main evaluation criteria of optimal edge detection are :

Low error rate : Identify as many actual edges as possible , At the same time, the false alarm caused by noise shall be reduced as much as possible .

High positioning : The marked edge should be as close as possible to the actual edge in the image .

Minimum response : Edges in an image can only be identified once .

Canny The general steps of edge detection :

Denoise . Edge detection is easily affected by noise , Denoising is usually needed before edge detection , Gaussian filtering is generally used to remove noise .

Calculate the gradient : For the smoothed image sobel Operators calculate gradients and directions .

Non maximum suppression :

After obtaining the gradient and direction , Traversal image , Remove all points that are not boundaries .

Implementation method : Traverse pixels one by one , Judge whether the current pixel is the maximum value with the same direction gradient among the surrounding pixels .

The following figure , spot A,B,C In the same direction , The gradient is perpendicular to the edge .

Judgment point A Is it A,B,C Local maximum in , If it is , Keep this point ; otherwise , It is suppressed ( Zeroing )

Canny(img, minVal, maxVal, ...)

Boundary setting is difficult : The smaller the threshold , The more details , There are resources on the Internet , You can set this value

Code case :

# Canny

import cv2

import numpy as np

# Import image

img = cv2.imread('./lena.png')#

# The smaller the threshold , The more details

lena1 = cv2.Canny(img, 100, 200)

lena2 = cv2.Canny(img, 64, 128)

cv2.imshow('lena', np.hstack((lena1, lena2)))

cv2.waitKey(0)

cv2.destroyAllWindows()版权声明

本文为[@[email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/203/202207212104060335.html

边栏推荐

- Qt:键盘事件和鼠标事件、定时器小实例

- windows服务器安全设置怎样操作,要注意什么?

- In his early 30s, he became a doctoral director of Fudan University. Chen Siming: I want to write both codes and poems

- NASA's "the strongest supercomputing in history" was put into use, crushing the old supercomputing overlord Pleiades

- MySQL 服务器进程 mysqld的组成

- Blog forum management system based on ssm+mysql+easyui+

- [mathematics of machine learning 01] countable sets and uncountable sets

- uniapp封装请求

- Relational operator 3 (Gretel software - Jiuye training)

- 机器学习基础篇(5)之形态学

猜你喜欢

【开发教程5】开源蓝牙心率防水运动手环-电池电量检测

Can you solve the pain points of interface testing now

PR

加密市场飙升至1万亿美元以上 不过是假象?加密熊市远未见底

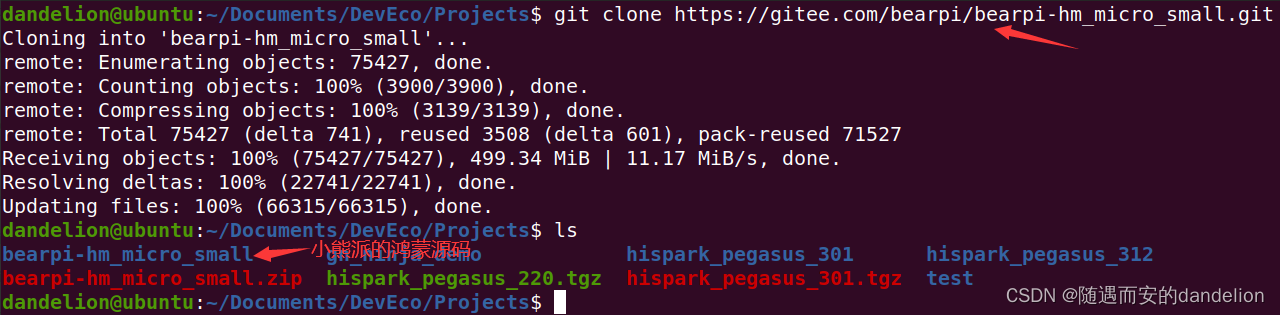

小熊派BearPi-HM_Micro_Small之Hello_World

Multithreading and high concurrency Day10

SCA在得物DevSecOps平台上应用

ctfhub(rce智慧树)

Part 01: distributed registry

Meta: predict the potential impact of the metauniverse from the development and innovation of mobile technology

随机推荐

图神经网络驱动的交通预测技术:探索与挑战

SSM项目整合【详细】

第01篇:分布式注册中心

The difference between request forwarding and request redirection

尾递归调用过程梳理

[C exercise] convert the spaces in the string into "%20"

Figure neural network driven traffic prediction technology: Exploration and challenge

GaitSet源代码解读(三)

9.5~10.5 GHz频段室内离体信道的测量与建模

我国智慧城市场景中物联网终端评测与认证体系研究

Online education course classroom management system based on ssm+mysql+bootstrap

Online shopping mall e-commerce system based on ssm+mysql+bootstrap+jquery

EN 1504-4: structural bonding of concrete structure products - CE certification

加密市场飙升至1万亿美元以上 不过是假象?加密熊市远未见底

Bear pie bearpi HM_ Micro_ Hello of small_ World

多线程与高并发day10

全球地下水模拟与监测:机遇与挑战

Redis distributed lock

Day010 循环结构

面向海洋观监测传感网的移动终端位置隐私保护研究