当前位置:网站首页>Two dimensional convolution Chinese microblog emotion classification project

Two dimensional convolution Chinese microblog emotion classification project

2022-07-21 04:55:00 【Don't wait for shy brother to develop】

Two dimensional convolution Chinese microblog emotion classification project

1、 Data set description

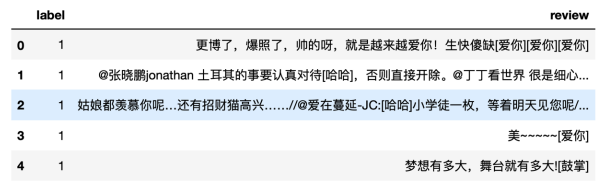

Here is a Chinese microblog emotion classification project . The data set I use here is collected from Sina Weibo 12 Ten thousand data , Half of the positive and negative samples . In the label 1 Express a positive comment ,0 Express negative comments . The data source is https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/weibo_sen ti_100k/intro.ipynb. If you have other data , Other data can also be used .

The data we use this time needs to be processed by ourselves , So we need to segment sentences , After word segmentation, each Words are numbered according to frequency . The word segmentation tool we want to use here is Stuttering participle , Stuttering participle is a good use Chinese word segmentation tool , The installation mode is to open the command prompt , Then type the command :

pip install jieba

After installation, in python Directly in the program import jieba You can use it .

2、 Two dimensional convolution Chinese emotion classification practice

# Install stuttering participle

# pip install jieba

import jieba

import pandas as pd

import numpy as np

from tensorflow.keras.layers import Dense, Input, Dropout

from tensorflow.keras.layers import Conv2D, GlobalMaxPool2D, Embedding, concatenate

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import Model

import tensorflow.keras.backend as K

from sklearn.model_selection import train_test_split

import json

# pip install plot_model

from plot_model import plot_model

from matplotlib import pyplot as plt

# Batch size

batch_size = 128

# Training cycle

epochs = 3

# Word vector length

embedding_dims = 128

# Number of filters

filters = 32

# The first half of this data is a positive sample , The second half is a negative sample

data = pd.read_csv('weibo_senti_100k.csv')

# Before looking at the data 5 That's ok

data.head(),data.tail()

# Calculate the number of positive samples

poslen = sum(data['label']==1)

# Calculate the number of negative samples

neglen = sum(data['label']==0)

print(' Number of positive samples :', poslen)

print(' Number of negative samples :', neglen)

# Test the use of stuttering participles

print(list(jieba.cut(' Parents must have Liu Yong's mentality , Keep learning , Keep improving ')))

# Define the participle function , The incoming x Carry out word segmentation

cw = lambda x: list(jieba.cut(x))

# apply Pass in a function , hold cw Function applied to data['review'] Each line

# Save the result of word segmentation to data['words'] in

data['words'] = data['review'].apply(cw)

# Before viewing the data again 5 That's ok

data.head()

# Calculate the maximum number of words in a piece of data

max_length = max([len(x) for x in data['words']])

# The result of printing is 202, The number of words in the longest sentence is not too much

# Then 202 As a standard , Fill in the length of all sentences to 202 The length of

# For example, the longest sentence is 2000, Then some sentences are too long , We can set a smaller value as the standard length of all sentences

# Setting up 1000, So over 1000 Only take the front of the sentence 1000 Word , Insufficient 1000 Fill the sentence with 1000 The length of

print(max_length)

# hold data['words'] All of the list All become string format

texts = [' '.join(x) for x in data['words']]

texts

# View a comment , Now the data is in string format , And words are separated by spaces

# This is to meet the format requirements of the following data processing , The next step is to use Tokenizer Process the data

texts[4]

# Instantiation Tokenizer, Set the maximum number of words in the dictionary to 30000

# Tokenizer It will automatically filter out some symbols, such as :!"#$%&()*+,-./:;<=>[email protected][\\]^_`{|}~\t\n

tokenizer = Tokenizer(num_words=30000)

# Pass in our training data , Build a dictionary , The number of words is set according to the word frequency , The higher the frequency , The smaller the number ,

tokenizer.fit_on_texts(texts)

# Convert words into numbers , The number is greater than 30000 Words will be filtered out

sequences = tokenizer.texts_to_sequences(texts)

# Set the sequence to max_length The length of , exceed max_length Partial abandonment of , Less than max_length Then fill 0

# padding='pre' Fill in the front of the sentence ,padding='post' Fill in after the sentence

X = pad_sequences(sequences, maxlen=max_length, padding='pre')

# X = np.array(sequences)

# Get the dictionary

dict_text = tokenizer.word_index

# Look up words in the dictionary dui yi

dict_text[' Dream ']

# hold token_config Save to json In file , The model prediction stage can use

file = open('token_config.json','w',encoding='utf-8')

# hold tokenizer become json data

token_config = tokenizer.to_json()

# preservation json data

json.dump(token_config, file)

print(X[4])

# Define Tags

# 01 Positive sample ,10 It's a negative sample

positive_labels = [[0, 1] for _ in range(poslen)]

negative_labels = [[1, 0] for _ in range(neglen)]

# Merge tags

Y = np.array(positive_labels + negative_labels)

print(Y)

# Sharding data sets

x_train,x_test,y_train,y_test = train_test_split(X, Y, test_size=0.2)

# Define a functional model

# Define model inputs ,shape-(batch, 202)

sequence_input = Input(shape=(max_length,))

# Embedding layer ,30000 Express 30000 Word , The vector corresponding to each word is 128 dimension

embedding_layer = Embedding(input_dim=30000, output_dim=embedding_dims)

# embedded_sequences Of shape-(batch, 202, 128)

embedded_sequences = embedding_layer(sequence_input)

# embedded_sequences Of shape Turned into (batch, 202, 128, 1)

embedded_sequences = K.expand_dims(embedded_sequences, axis=-1)

# The convolution kernel size is 3, The number of columns must be equal to the word vector length

cnn1 = Conv2D(filters=filters, kernel_size=(3,embedding_dims), activation='relu')(embedded_sequences)

cnn1 = GlobalMaxPool2D()(cnn1)

# The convolution kernel size is 4, The number of columns must be equal to the word vector length

cnn2 = Conv2D(filters=filters, kernel_size=(4,embedding_dims), activation='relu')(embedded_sequences)

cnn2 = GlobalMaxPool2D()(cnn2)

# The convolution kernel size is 5, The number of columns must be equal to the word vector length

cnn3 = Conv2D(filters=filters, kernel_size=(5,embedding_dims), activation='relu')(embedded_sequences)

cnn3 = GlobalMaxPool2D()(cnn3)

# Merge

merge = concatenate([cnn1, cnn2, cnn3], axis=-1)

# Full link layer

x = Dense(128, activation='relu')(merge)

# Dropout layer

x = Dropout(0.5)(x)

# Output layer

preds = Dense(2, activation='softmax')(x)

# Defining models

model = Model(sequence_input, preds)

plot_model(model, dpi=200)

# Define the cost function , Optimizer

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['acc'])

# Training models

history=model.fit(x_train, y_train,

batch_size=batch_size,

epochs=epochs,

validation_data=(x_test, y_test))

# Save the model

model.save('cnn_model.h5')

3、 Model to predict

from keras.models import load_model

from keras.preprocessing.text import tokenizer_from_json

from keras.preprocessing.sequence import pad_sequences

import jieba

import numpy as np

import json

# load tokenizer

json_file=open('token_config.json','r',encoding='utf-8')

token_config=json.load(json_file)

tokenizer=tokenizer_from_json(token_config)

# Load model

model=load_model('cnn_model.h5')

# Emotional prediction

def predict(text):

# Word segmentation of sentences

cw=list(jieba.cut(text))# [' The weather today ', ' really ', ' good ', ',', ' I want to ', ' Go to ', ' Play a ball ']

# list Turn the string , Between the elements ' ' separate

texts=' '.join(cw) # character string ' The weather today really good , I want to Go to Play a ball '

# print([texts])# [' The weather today really good , I want to Go to Play a ball ']

# Convert words into numbers , The number is greater than 30000 Words will be filtered out

sequences=tokenizer.texts_to_sequences([texts]) # [texts] Is to turn a string into a list

# model.input_shape by (None, 202),202 Is the sequence length when training the model

# Set the sequence to 202 The length of , exceed 202 Partial abandonment of , Less than 202 Then fill 0

sequences=pad_sequences(sequences=sequences

,maxlen=model.input_shape[1] # 202

,padding = 'pre')

# Model to predict shape(1,2)

predict_result=model.predict(sequences)

# Take out predict_result The index corresponding to the maximum value of the element in

result=np.argmax(predict_result)

if(result==1):

print(' Positive emotions ')

else:

print(' Negative emotions ')

if __name__ == '__main__':

predict(' It's a beautiful day , I'm going to play ')

predict(" A large room of people , As a result, the water supply was cut off early in the morning , I collapsed until now [ Crazy ]")

predict(" I'm very happy today ")

# pass

There are a lot of emoticons in the raw data , Such as [ love you ],[ ha-ha ],[ applause ],[ lovely ] etc. , The corresponding words in these emoticons represent the emotion of this sentence to a large extent . So the reason why we have achieved such high accuracy in this project , It has a lot to do with the emoticons here . If you use other data sets to classify emotions , Should also get good results , But it should be hard to get 98% Such a high accuracy .

边栏推荐

猜你喜欢

RNA 24. Timer, an online gadget for TCGA based analysis of immune infiltrating cells in SCI articles

DNA 11. Identify mutation hotspots on the three-dimensional structure of tumor proteins (hotspot3d)

DOM XSS的原理与防护

Access数据库对象包括哪六个?Access与 Excel 最重要的区别是什么?

Isn't it too much to play Gobang in idea?

threejst物体匀速移动

【MySQL】临时表 &视图

![[FPGA tutorial case 32] communication case 2 - FSK modulation signal generation based on FPGA](/img/88/88148d297ad6e234389e0f1da5f7de.png)

[FPGA tutorial case 32] communication case 2 - FSK modulation signal generation based on FPGA

![[MySQL] temporary table & View](/img/10/e68af137f860e38b181a1f75aa4610.png)

[MySQL] temporary table & View

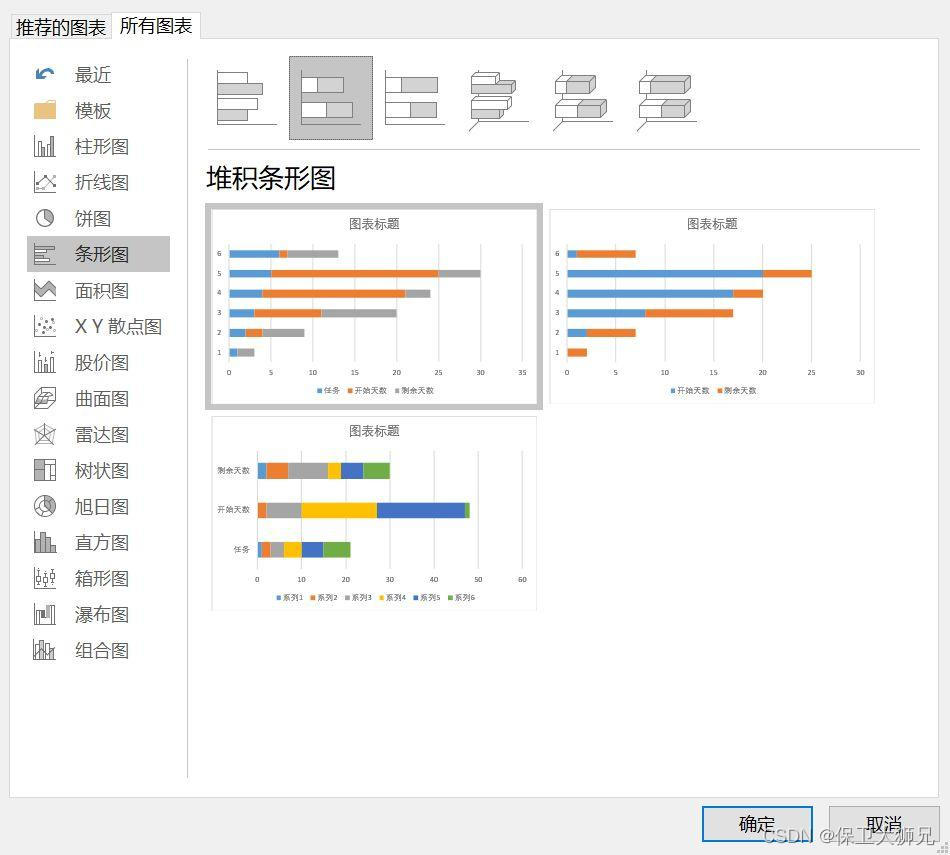

Use Excel to make Gantt chart to track project progress (with drawing tutorial)

随机推荐

《PolarDB for Postgres SQL 》主要讲了什么?

掌握这些插件,分分钟提高你的办公效率90%!

Deep parsing of custom types

动态调试JS代码

IDEA中如何连接数据库并显示数据库信息。

Send a book at the end of the article | Douban 9.4 points, "Hello, world" originated from this book!

【MySQL】临时表 &视图

深度解析字符串和内存函数

À propos des outils d'édition XML

编写一个Book类,该类至少有name和price两个属性。

[leetcode] 150 evaluation of inverse Polish expression

[FPGA tutorial case 32] communication case 2 - FSK modulation signal generation based on FPGA

String length of C language programming skills

How to cultivate real data analysis thinking? Attached with practical cases

The realization of Sanzi game

FigDraw 15. SCI 文章绘图之多组学圈图(OmicCircos)

RNA 20. SCI 文章中单样本免疫浸润分析 (ssGSEA)

RNA 23. Risk factor association diagram of Cox model of expressed genes in SCI articles (ggrisk)

Define a class to describe a circle, then generate a circle object, and finally output the radius, diameter and area.

文末送书|豆瓣9.4分,“hello,world”起源于这本书!