当前位置:网站首页>[pytorch deep learning practice] learning notes section III gradient decline

[pytorch deep learning practice] learning notes section III gradient decline

2022-07-22 21:05:00 【Creak, creak, gurgle, gurgle】

start

- I studied last March PyTorch Deep learning practice Course , At that time, I took notes in Youdao notes and practiced . I haven't touched it for a long time now, and I've forgotten ...orz

I reviewed what I wrote at that time Completed route —pytorch Deep learning hyperspectral image classification in environment article , Decide to tidy up your notes , Also in order to review quickly . Hope to follow the order , Fill all the holes , Put a flag, Review all the pits this week .Let’s

go! - This series of blogs are PyTorch Deep learning practice Study notes for this course . Because the teacher uploaded ppt, I will not intercept ppt Introduce the principle .

- I suggest listening to a class , Follow the practice section , Pay attention to code implementation details . In this way, you can start complex projects after bit accumulation .

This lesson is one of the most basic , Is the loss function defined by oneself cost And calculate the gradient grad, Can better understand the principle . In the future, in-depth learning projects are directly used torch Inside packages The encapsulation function of .

import matplotlib.pyplot as plt

# prepare the training set

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

# initial guess of weight

w = 1.0

# define the model linear model y = w*x

def forward(x):

return x*w

#define the cost function MSE

def cost(xs, ys):

cost = 0

for x, y in zip(xs,ys):

y_pred = forward(x)

cost += (y_pred - y)**2

return cost / len(xs) # cost It counts all the samples MSE Average loss

# define the gradient function gd

def gradient(xs,ys):

grad = 0

for x, y in zip(xs,ys):

grad += 2*x*(x*w - y) # All samples are predicted and actual loss Yes w The derivation of Derivative function

return grad / len(xs) # Average the gradients of all samples

epoch_list = []

cost_list = []

print('predict (before training)', 4, forward(4))

for epoch in range(100):

cost_val = cost(x_data, y_data)

grad_val = gradient(x_data, y_data)

w-= 0.01 * grad_val # 0.01 learning rate Update here w For the next one epoch Recalculate predict result

print('epoch:', epoch, 'w=', w, 'loss=', cost_val)

epoch_list.append(epoch)

cost_list.append(cost_val)

print('predict (after training)', 4, forward(4))

plt.plot(epoch_list,cost_list)

plt.ylabel('cost')

plt.xlabel('epoch')

plt.show()

Running results :

predict (before training) 4 4.0

predict (after training) 4 7.999998569488525

Add

The gradient descent method is designed above , That is, the average loss of all samples is calculated .

Random gradient descent method (SGD) And gradient descent method The main difference is :

1、 The loss function consists of cost() Change to loss().cost Is to calculate the loss of all training data ( Need... In the loop cost+=),loss Is to calculate the loss of a training function . Corresponding to the source code, there are two missing for loop .( stay def loss and grad Remove for loop , While in training epoch Into two for The cycle of .)

2、 Gradient function gradient() Change from calculating the gradient of all training data to calculating the gradient of one training data .

3、 The random gradient in this algorithm mainly refers to , Take one training data at a time to train , Then update the gradient parameters . In this algorithm, the gradient is updated in total 100(epoch)x3 = 300 Time ( Each sample calculation needs to be updated w). In the gradient descent method, the gradient is updated in total 100(epoch) Time ( It is the average of all samples in a round ).

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x*w

# calculate loss function

def loss(x, y):

y_pred = forward(x)

return (y_pred - y)**2

# define the gradient function sgd

def gradient(x, y):

return 2*x*(x*w - y)

epoch_list = []

loss_list = []

print('predict (before training)', 4, forward(4))

for epoch in range(100):

for x,y in zip(x_data, y_data):

grad = gradient(x,y)

w = w - 0.01*grad # update weight by every grad of sample of training set

print("\tgrad:", x, y,grad)

l = loss(x,y)

print("progress:",epoch,"w=",w,"loss=",l)

epoch_list.append(epoch)

loss_list.append(l)

print('predict (after training)', 4, forward(4))

plt.plot(epoch_list,loss_list)

plt.ylabel('loss')

plt.xlabel('epoch')

plt.show()

result :

It can be seen that SGD Faster convergence , The training cycle is short .

by petty thief

If you stick here , Please don't stop , The scenery at the top of the mountain is more charming ! The good play is still in the back . come on. !

边栏推荐

- Redis series 11 -- redis persistence

- Session和Cookie的关系与区别

- 微信小程序Cannot read property 'setData' of null錯誤

- RPM包管理—YUM在线管理--YUM命令

- Django中使用Mysql数据库

- [LTTng学习之旅]------开始之前

- Airtest conducts webui automated testing (selenium)

- BUUCTF闯关日记04--[ACTF2020 新生赛]Include1

- BUUCTF闯关日记--[网鼎杯 2020 青龙组]AreUSerialz

- 人类群星网站收集计划--Michael Kerrisk

猜你喜欢

Multithreading 04 -- order of threads

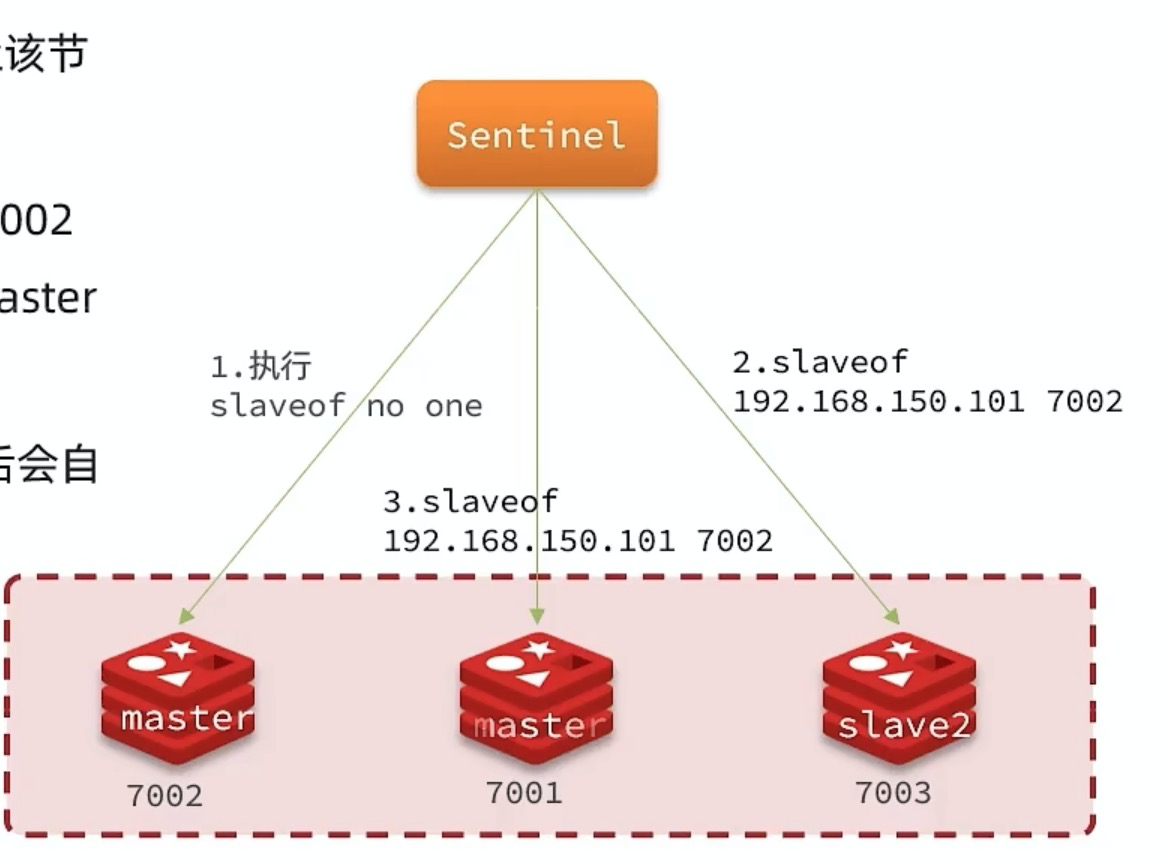

Redis series 13 -- redis Sentinel

Set colSpan invalidation for TD of table

![BUUCTF闯关日记--[MRCTF2020]Ez_bypass1](/img/b9/9243018c1fa4e57d91e01169ccf240.png)

BUUCTF闯关日记--[MRCTF2020]Ez_bypass1

![NSSCTF-01-[SWPUCTF 2021 新生赛]gift_F12](/img/11/89f4ff71a4ac22d2f7a12fd245b91f.png)

NSSCTF-01-[SWPUCTF 2021 新生赛]gift_F12

(七)vulhub专栏:Log4j远程代码执行漏洞复现

多线程01--创建线程和线程状态

Chapter 2: Minio stand-alone version, using the client to back up files

![BUUCTF闯关日记--[极客大挑战 2019]HardSQL1](/img/cf/48ac539ed2911a83abff2dc9c1bbaa.png)

BUUCTF闯关日记--[极客大挑战 2019]HardSQL1

Write a 3D banner using JS

随机推荐

Interview question series (I): data comparison and basic type of disassembly and assembly box

[LTTng学习之旅]------环境搭建

pkg-config 查找库和用于编译

Spark学习之SparkSQL

多线程04--线程的有序性

流程控制—if语句

Redis 系列15--Redis 缓存清理

Seata first met

字符截取命令

application&富文本编辑器&文件上传

[LTTng学习之旅]------Trace View初探

JVM principle and performance tuning

多线程04--线程的原子性、CAS

Commonly used operators of spark

嵌入式系统学习笔记

项目中手机、姓名、身份证信息等在日志和响应数据中脱敏操作

[LTTng学习之旅]------LTTng的Feature

Airtest conducts webui automated testing (selenium)

项目上线,旧数据需要修改,写SQL太麻烦,看Excel配合简单SQL的强大功能

字符处理命令