当前位置:网站首页>Function principle of pytoch network training process: source code analysis optimizer.zero_ grad()loss.backward()optimizer.step()

Function principle of pytoch network training process: source code analysis optimizer.zero_ grad()loss.backward()optimizer.step()

2022-07-22 20:32:00 【Dull as dull】

Function principle of common parameter training process

1 executive summary

In use pytorch Training model , Usually in the loop epoch In the process of , Constantly cycle through all training data sets .

Used in turn optimizer.zero_grad(),loss.backward() and optimizer.step() Three functions , As shown below :

model = MyModel()

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.001, momentum=0.9, weight_decay=1e-4)

for epoch in range(1, epochs):

for i, (inputs, labels) in enumerate(train_loader):

output= model(inputs)

loss = criterion(output, labels)

# compute gradient and do SGD step

optimizer.zero_grad()

loss.backward()

optimizer.step()

( Module with updated learning rate lr_scheduler It's unnecessary, so I won't talk about it here for the time being , You can see the following articles if you want to know :pytorch Adjust the learning rate dynamically , The learning rate drops automatically , according to loss falling Mainly focused on The first 6 section )

In general , The function of these three functions is to return the gradient to zero first (optimizer.zero_grad()), Then the gradient of each parameter is calculated by back propagation (loss.backward()), Finally, a one-step parameter update is performed by gradient descent (optimizer.step()).

Next, we will understand the specific implementation process of these three functions through the source code . Before that , First, briefly explain the parameter variables commonly used in functions :

param_groups:Optimizer classWhen instantiating, aparam_groups list, The elements in the list areparam_groupDictionaries , hereparam_groupThe number of dictionaries isparam_groups listThe length of , That's the variablenum_groupsThe meaning of .param_groupDictionaries : The length of this dictionary is 6, Containsparams,lr,momentum,dampening,weight_decay,nesterovthis 6 Group key value pairs .param_group['params']Key values in the dictionaryparams: An iterator consisting of model parameters , Model parameters are instantiationsOptimizer classPass in and register the member attribute in the model_parametersin , Each parameter is atorch.nn.parameter.Parameterobject .

2 optimizer.zero_grad()

The code is as follows ( Example ):

def zero_grad(self):

r"""Clears the gradients of all optimized :class:`torch.Tensor` s."""

for group in self.param_groups:

for p in group['params']:

if p.grad is not None:

p.grad.detach_()

p.grad.zero_()

optimizer.zero_grad() The function will traverse all parameters of the model , The parameters mentioned here are all described in the previous general introduction torch.nn.parameter.Parameter Type variable . That is, after that p. adopt p.grad.detach_() Method truncates the gradient flow of back propagation , Re pass p.grad.zero_() The function sets the gradient value of each parameter to 0, That is, the last gradient record is cleared .

Because the training process usually uses mini-batch Method , call backward() The gradient must be cleared before the function , Because if the gradient is not cleared ,pytorch The gradient calculated last time and the gradient calculated this time will be accumulated .

benefits

When our hardware limitations cannot use larger bachsize when , The code is constructed into multiple batchsize Do it once. optimizer.zero_grad() Function call , This allows you to use multiple calculations of smaller bachsize Instead of , More convenient .

Disadvantage

Clear the gradient every time : Come in one batch The data of , Calculate the primary gradient , Update the network .

summary

- Under normal circumstances , Every

batchIt needs to be called onceoptimizer.zero_grad()function , Clear the gradient of the parameter ; - You can have more than one

batchCall it onceoptimizer.zero_grad()function , This is equivalent to increasingbatch_size.

3 loss.backward()

PyTorch Back propagation of ( namely tensor.backward()) It's through autograd package To achieve ,autograd package Will be based on tensor The corresponding gradient is automatically calculated by mathematical operation .

say concretely ,torch.tensor yes autograd package The base class , If you set tensor Of requires_grads by True, Will start tracking this tensor All the operations above .

If you use tensor.backward(), All gradients will be calculated automatically ,tensor The gradient of will add up to its .grad Go inside . If it doesn't work tensor.backward() Words , The gradient value will be None, therefore loss.backward() To write in optimizer.step() Before .

4 optimizer.step()

With SGD For example ,torch.optim.SGD().step() Source code is as follows :

def step(self, closure=None):

"""Performs a single optimization step. Arguments: closure (callable, optional): A closure that reevaluates the model and returns the loss. """

loss = None

if closure is not None:

loss = closure()

for group in self.param_groups:

weight_decay = group['weight_decay']

momentum = group['momentum']

dampening = group['dampening']

nesterov = group['nesterov']

for p in group['params']:

if p.grad is None:

continue

d_p = p.grad.data

if weight_decay != 0:

d_p.add_(weight_decay, p.data)

if momentum != 0:

param_state = self.state[p]

if 'momentum_buffer' not in param_state:

buf = param_state['momentum_buffer'] = torch.clone(d_p).detach()

else:

buf = param_state['momentum_buffer']

buf.mul_(momentum).add_(1 - dampening, d_p)

if nesterov:

d_p = d_p.add(momentum, buf)

else:

d_p = buf

p.data.add_(-group['lr'], d_p)

return loss

step() function The function of is to perform an optimization step , The value of the parameter is updated by the gradient descent method . Because gradient descent is based on gradient , So in the implementation optimizer.step() function It should be carried out before loss.backward() function To calculate the gradient .

Be careful :

Different optimizers .step() The specific process of the function is basically similar

The main difference lies in the different optimization methods , Will pass the calculated gradient , Use different formulas to update parameters , That's it

How the specific formula is different or what the difference is can be referred to

LAST reference

optimizer.zero_grad(),loss.backward(),optimizer.step() How it works | Code farm home

边栏推荐

猜你喜欢

LeetCode160 & LeetCode141——double pointers to solve the linked list

栈实现(C语言)

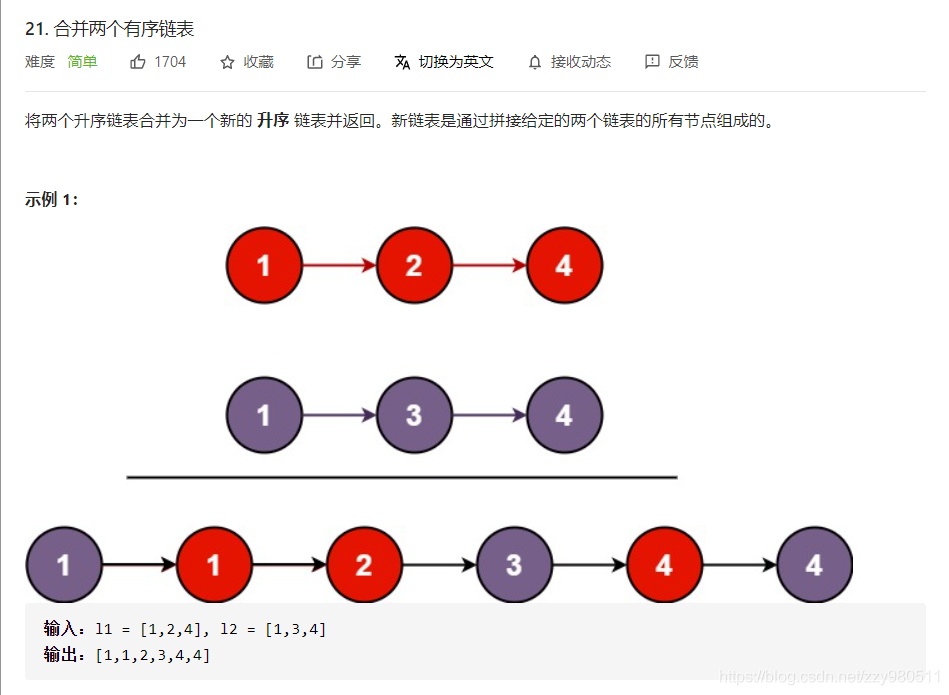

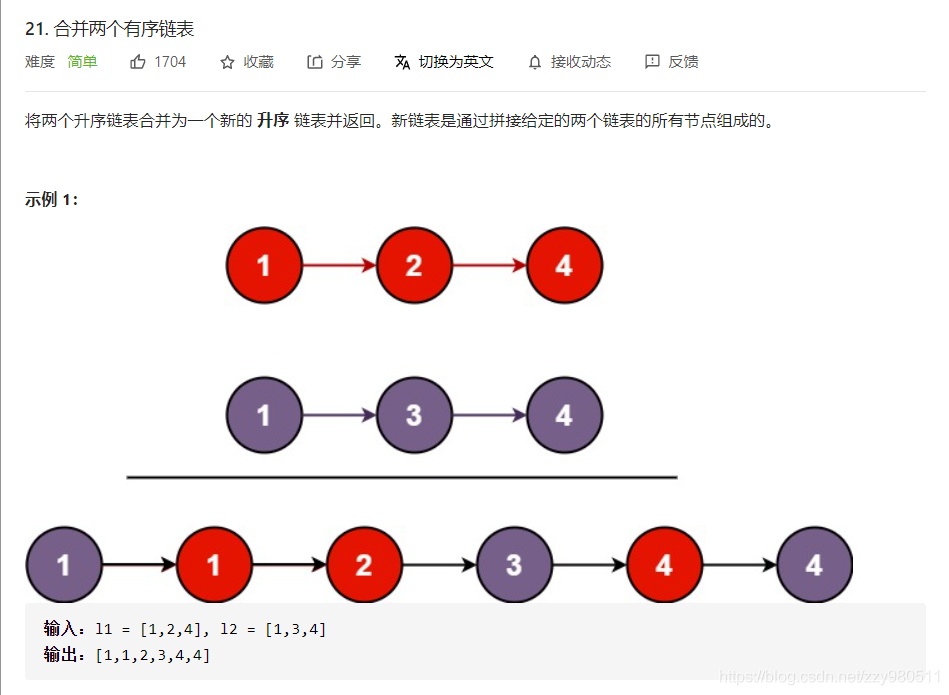

LeetCode21——Merge two sorted lists——Iteration or recursion

SSM framework integration

LeetCode21——Merge two sorted lists——Iteration or recursion

Her power series 6 - Yang Di 1: when the girl grows up, she can be good at mathematics and chemistry, and scientific research can be very fresh

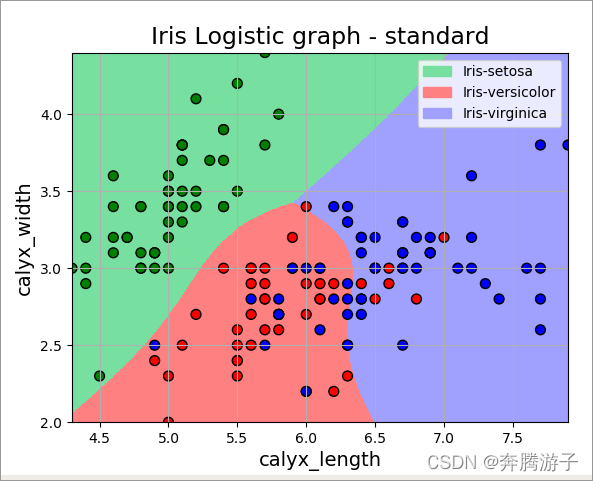

Introduction to machine learning: Logistic regression-2

她力量系列六丨杨笛一:女孩长大后数理化可以很好,科研可以很鲜活

json按格式逐行输出到文件

Rag summary

随机推荐

进程fork

Pre training weekly 39: deep model, prompt learning

JDBC异常SQLException的捕获与处理

Class template parsing

mysql索引

1053 Path of Equal Weight (30 分)

1038 Recover the Smallest Number (30 分)

docker搭建mysql主从复制

Unix C语言POSIX的线程创建、获取线程ID、汇合线程、分离线程、终止线程、线程的比较

AMBert

LeetCode53——Maximum Subarray——3 different methods

Unix C语言 POSIX 互斥锁 线程同步

Advent of code 2020 -- 登机座位问题

Stack implementation (C language)

7-3 Size of Military Unit (25 分)

1057 Stack (30 分)

没有人知道TikTok的最新流行产品Pink Sauce中含有什么成分

1045 Favorite Color Stripe (30 分)

RP file Chrome browser view plug-in

Her power series III holds the current, adheres to love, and is tied to the scientific research road of food image recognition