当前位置:网站首页>DataX environment deployment and test cases

DataX environment deployment and test cases

2022-07-22 00:20:00 【Slim of the Kobayashi family】

DATAX brief introduction ( The profile is adapted from the official website )

DataX

DataX It is an offline data synchronization tool widely used in Alibaba group / platform , Implementation include MySQL、SQL Server、Oracle、PostgreSQL、HDFS、Hive、HBase、OTS、ODPS And other efficient data synchronization functions among various heterogeneous data sources .

Features

DataX As a data synchronization framework , The synchronization of different data sources is abstracted as reading data from the source data source Reader plug-in unit , And write data to the target Writer plug-in unit , Theoretically DataX The framework can support data synchronization of any data source type . meanwhile DataX Plug in system as a set of ecosystem , Each time a new set of data sources is accessed, the newly added data sources can realize interworking with the existing data sources .

System Requirements

Linux- No requirement

JDK(1.8 above , recommend 1.8)

Python( recommend Python2.6.X)( Be careful : must do 2.X, Do not install 3.X Will make mistakes )

Apache Maven 3.x (Compile DataX)

The deployment environment

JDK The deployment environment

To configure

vi /etc/profile

#JDK1.8

JAVA_HOME=/usr/local/jdk

JRE_HOME=/usr/local/jdk/jre

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

export JAVA_HOME JRE_HOME CLASSPATH PATH

Refresh environment variable , Make it effective .

source /etc/profile

test

java -version

python2.X Environmental Science

linux-centOS It comes with its own 2.X

test

python

Maven The deployment environment

To configure

vi /etc/profile

#MAVEN

M3_HOME=/usr/local/apache-maven-3.3.9

export PATH=$M3_HOME/bin:$PATH

Refresh environment variable , Make it effective .

source /etc/profile

test

mvn -v

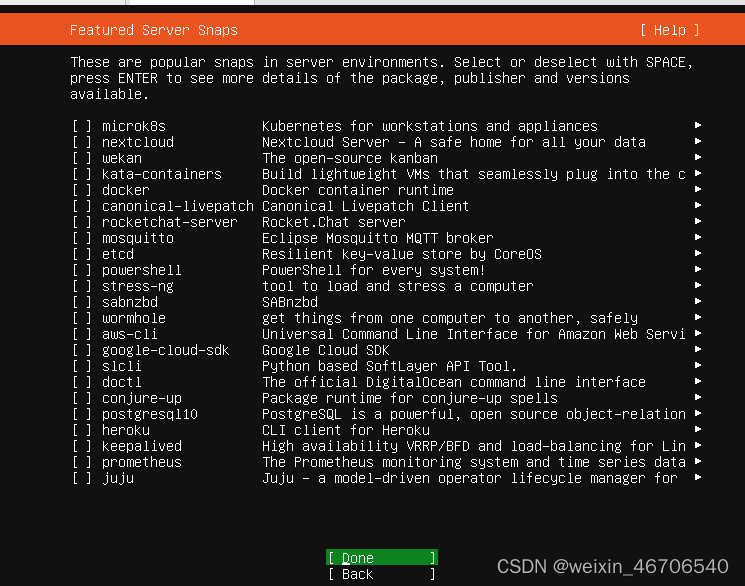

Quick Start

Tool deployment

Method 1 、 Direct download DataX tool kit :DataX Download address ( It is recommended to use )

Download and unzip to a local directory , Get into bin Catalog , You can run the synchronization job :

$ cd {YOUR_DATAX_HOME}/bin

$ python datax.py {YOUR_JOB.json}

Self test script : python {YOUR_DATAX_HOME}/bin/datax.py {YOUR_DATAX_HOME}/job/job.json

for example :

MY_DATAX_HOME=/usr/local/datax

cd /usr/local/datax

./bin/datax.py ./job/job.json

The following happens , indicate dataX Environmental Science Completion of construction .

Method 2 、 download DataX Source code , Compile it yourself :DataX Source code

(1)、 download DataX Source code :

$ git clone [email protected]:alibaba/DataX.git

(2)、 adopt maven pack :

$ cd {DataX_source_code_home}

$ mvn -U clean package assembly:assembly -Dmaven.test.skip=true

Package successfully , The log shows :

[INFO] BUILD SUCCESS

[INFO] -----------------------------------------------------------------

[INFO] Total time: 08:12 min

[INFO] Finished at: 2015-12-13T16:26:48+08:00

[INFO] Final Memory: 133M/960M

[INFO] -----------------------------------------------------------------

After successful packaging DataX Package is located in the {DataX_source_code_home}/target/datax/datax/ , The structure is as follows :

$ cd {DataX_source_code_home}

$ ls ./target/datax/datax/

bin conf job lib log log_perf plugin script tmp

Configuration example : from stream Read data and print to console

Support Data Channels

DataX At present, there is a relatively comprehensive plug-in system , Mainstream RDBMS database 、NOSQL、 Big data computing systems have been connected , The current supporting data is shown in the figure below , Please click :DataX Data source reference guide

Hive And MySQL Interactive case

take MySQL Table import Hive

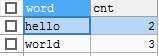

0. stay mysql Zhongzao data

test database .

establish test_test_table surface

Table structure

Table data

1. stay Hive CSCEC table ( Save as text file type )

hive> create table mysql_table(word string, cnt int) row format delimited fields terminated by ',' STORED AS TEXTFILE;

OK

Time taken: 0.194 seconds

hive> select * from mysql_table limit 10;

OK

Time taken: 0.162 seconds

2. stay {YOUR_DATAX_PATH}/job below , To write mysql2hive.json The configuration file

{

"job": {

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "123",

"column": [

"word",

"cnt"

],

"splitPk": "cnt",

"connection": [

{

"table": [

"test_table"

],

"jdbcUrl": [

"jdbc:mysql://192.168.231.1:3306/test"

]

}

]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://192.168.10.3:9000",

"fileType": "text",

"path": "/user/hive/warehouse/mysql_table",

"fileName": "mysql_table",

"column": [

{

"name": "word",

"type": "string"

},

{

"name": "cnt",

"type": "int"

}

],

"writeMode": "append",

"fieldDelimiter": ",",

"compress":"gzip"

}

}

}

]

}

}

3. Run script

cd /usr/local/datax

./bin/datax.py ./job/mysql2hive.json

4. see hive Whether there is data in the table

select * from mysql_table;

Try and try and try again !!!

边栏推荐

- 使用JasperReports时,报出 ReportExpressionEvaluationData 异常

- JVM运行原理解析

- 归并排序

- 什么是哈希冲突?哈希冲突怎么解决?

- MySQL experiment

- 物联网嵌入式——学习分享

- Lamp Architecture - mysq cluster and master-slave replication (2)

- 奇怪的响应数据

- Intranet detection 1- working group information collection & intra domain information collection

- Deploy tidb in multiple data centers in the same city

猜你喜欢

随机推荐

MySQL实验

FlinkCDC

Connections and differences between three-level dispatching

Amazon cloud technology training and Certification Course in August is wonderful and can't be missed!

Lamp Architecture - MySQL installation and deployment, MySQL Cluster and master-slave structure (1)

Oracle中Delete数据之后想恢复?来看这里[只要三步]

Steps and precautions for building ha for 5 machines

solr部署以及ik中文分词案例

zabbix5.0的安装与实现

Installation and implementation of zabbix5.0

Synchronization and mutual exclusion of processes

JasperReports不兼容高版本itext引发的异常

Intel 实习mentor布置问题1

AcWing 94. 递归实现排列型枚举

Zabbx6.0(生产实战)

centos7部署mysql8

Oracle grouped data

LNMP ------php7 installation

TZC 1283: simple sort merge sort

原码与补码