当前位置:网站首页>Deep learning - deep understanding of normalization and batchnorm (theoretical part)

Deep learning - deep understanding of normalization and batchnorm (theoretical part)

2022-07-20 19:31:00 【Program meow;】

normalization

List of articles

The role of normalization

Normalized yield (?? Of ) Similar distribution , Make the model converge faster , You can use a greater learning rate

Why do we need normalization

Before neural network training , We need to do a normalization of the input data . The reason lies in The essence of neural network learning process is to learn data distribution , Once the training data and test data The distribution is different , Then the generalization ability of the network is greatly reduced ; On the other hand , once Every batch The distribution of training data varies , Then the network should learn in every iteration Adapt to different distributions , This will greatly reduce the speed of network training , That's why we need to do a normalization preprocessing for all the data .

Normalization also allows deep neural networks to be trained , The deeper the hidden layer , The smaller the gradient , It may cause that different layers cannot converge at the same time

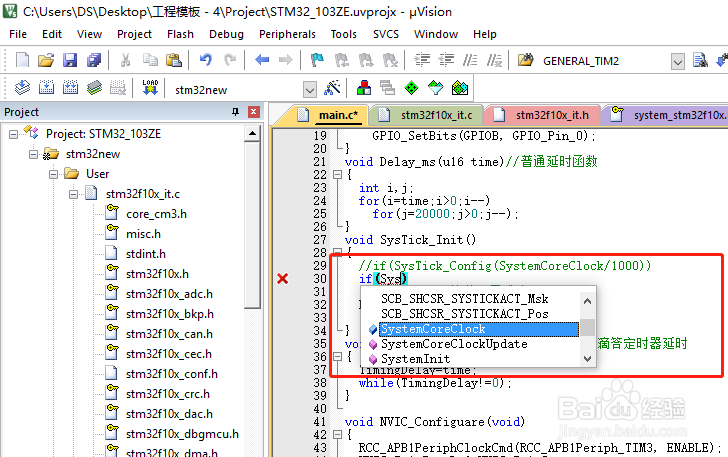

BatchNorm Implementation of normalization

B It's a batch The input of

The parameters to learn are delta and beta

μ B = 1 m ∑ i = 1 m x i σ B 2 = D ( X ) x i ^ = x i − μ B σ B 2 + ε y i = δ x ^ i + β \mu_B = \frac{1}{m} \sum_{i=1}^m x_i \\ \\ \sigma_B^2 = D(X) \\ \\ \hat {x_i} = \frac{x_i - \mu_B}{\sqrt{\sigma^2_B + \varepsilon}}\\ \\ y_i = \delta\hat x_i + \beta μB=m1i=1∑mxiσB2=D(X)xi^=σB2+εxi−μByi=δx^i+β

The use of normalization

1. Order of use

Convolution Come back Activate Pooling

2. The training set is different from the test set

The training set is to get every batch To normalize the mean and variance of

If the test set is carried out in the same way, there may be one batch There is only one training set , Cannot normalize

If the test set is not normalized, the output will be very different from the training set ( Training set normalization , But the test set is not normalized )

The mean and variance of the test set should be the mean and variance of the whole data set

The specific update method of the global mean variance :

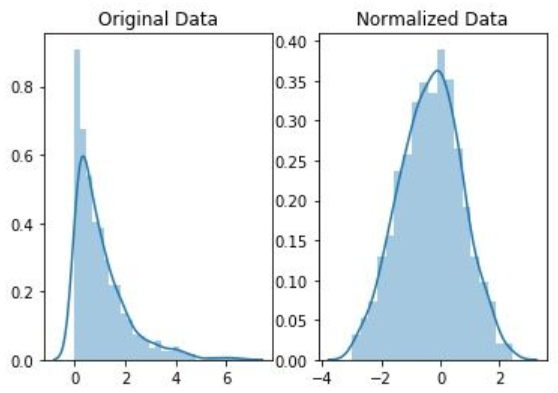

The difference between normalization and standardization

The difference between standardization and normalization lies in the final linear transformation ( So normalization requires that linear transformation )

Both standardization and normalization can compress data to (0~1) perhaps (-1~1) Within the interval , But standardization will change the distribution of the original data , Standardization will not , Standardization only deflates the original data

Normalization only scales the range of data , Tagging is to make the data conform to a new distribution ( Like the normal distribution )PyTorch—— Resolve errors “RuntimeError: running_mean should contain * elements not *”_ Mo men -CSDN Blog

Fundamentals—— Neural network BN Location of the layer Empirical Study - You know (zhihu.com)

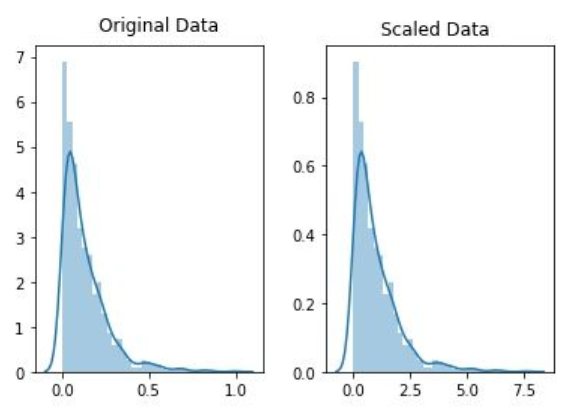

e.g.

1. normalization

2. Standardization

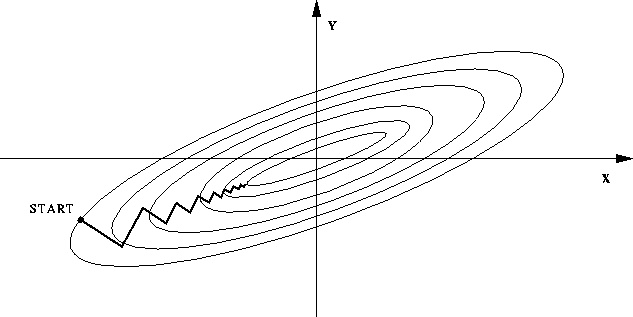

Why can normalization speed up the convergence of the model

Suppose there are two variables , Are evenly distributed ,x1 The scope is [10000,20000],x2 The scope is [1,2]. There are many points on the same line , We call this line L. If we want to make a classification now ,x2 Can be almost ignored ,x2 It was innocently killed , Just because of the so-called dimensional problem . Even if x2 Not to be killed , Now continue to solve , To do gradient descent . Obviously , If the descent direction we get at a certain step is not in a straight line L On , It is almost certain that this step will not fall . This will lead to non convergence , Or convergence is slow .

No normalization :

After normalization :

边栏推荐

- bl 和 ldr跳转程序的区别

- 【开源】MagicData-RAMC :180小时中文对话式语音数据集正式发布

- uboot-的start.S详细注解及分析

- 最全的多线程应用教程---总结详细

- The difference between threads / processes / coroutines and the unified exception handling of multithreaded states / multithreads

- EXCEL

- 【CANN训练营】玩转CANN目标检测与识别一站式方案——学习笔记一(初体验)

- 数组的一些经典练习回顾

- The difference between BL and LDR jump program

- Win10 completely uninstall mysql8.0

猜你喜欢

随机推荐

数组-sort用法(排序) 可以使用函数

DML在图形界面化工具的使用

WPF DataGrid realizes the effect of selecting cells

S3c2440 u-boot migration - norflash driver support - s29al016u-boot version: 2008.10 development board: mini2440

P5023 [NOIP2018 提高组] 填数游戏 题解

uboot-的start.S详细注解及分析

【CANN训练营】玩转CANN目标检测与识别一站式方案——学习笔记一(初体验)

学习笔记:12864液晶模块的详细使用

LDR指令和LDR伪指令区别

木犀草素甘草酸共轭牛血清白蛋白纳米粒/牛血清白蛋白包覆紫杉醇脂质纳米粒的研究制备

社区上新 | MagicHub.io开源这波数据相当“奥利给”

The difference between threads / processes / coroutines and the unified exception handling of multithreaded states / multithreads

Mysql中explain用法和结果字段的含义介绍

redis集群搭建(一主两从三哨兵)完整版带验证报告

bl 和 ldr跳转程序的区别

关于IO流和String常见的一些面试题

程序员怎么写bug

MagicData-RAMC数据集测评 | 西北工业大学冠军队分享

DTX-GA-BSA NPs 载多西他赛和藤黄酸白蛋白纳米粒/硫鸟嘌呤白蛋白纳米粒

PDA-RBCs-NPs 聚多巴胺修饰的红细胞-纳米粒子复合/透明质酸包裹马钱子碱牛血清蛋白纳米粒的制备