当前位置:网站首页>Kuberntes cloud native combat high availability deployment architecture

Kuberntes cloud native combat high availability deployment architecture

2022-07-22 02:47:00 【Young】

Kubernets Core components

Kubernetes There are many components in , It is estimated to write a thick book if you want to introduce it completely and clearly , Our actual combat series mainly remember several core components , That is, two kinds of nodes , Three IP, Four resources .

Two kinds of nodes

The two nodes are the control plane master Nodes and work nodes worker node , among master There are several core components in the node that need to be focused

- kube-apiserver : Provides an increase in resources 、 Delete 、 Change 、 Check the only access to the operation , And provide certification 、 to grant authorization 、 Access control 、API Mechanisms such as registration and discovery , all worker Nodes can only pass through apiserver And master Node interaction ;

- etcd : Distributed KV database , Saves the state of the entire cluster ;

- kube-scheduler : Responsible for resource scheduling , According to the scheduled scheduling policy Pod Dispatch to the corresponding machine ;

- kube-controller-manager: Responsible for maintaining the status of the cluster , Automatic control center of resource object , Like fault detection 、 Automatic extension 、 Scroll to update 、 Service account and token controller ;

worker Node components :

- kubelet : be responsible for Pod Creation of corresponding container 、 Start stop task , And Master Close collaboration of nodes , Achieve the basic functions of cluster management .

- kube-proxy: Responsible for Service Provide cluster Internal service discovery and load balancing ;

- Container Runtime : Responsible for mirror management and Pod And the actual running of the container (CRI)

Three IP

Node IP :Node node IP Address ,Node IP yes Kubernetes Of the physical network card of each node in the cluster IP Address

Pod IP : Pod Of IP Address , It is a virtual layer-2 network

Cluster IP:Service Of IP Address , It is also a virtual IP.

Four resources

| Category | Resource objects |

|---|---|

| Resource objects | Pod、ReplicaSet、ReplicationController、Deployment、StatefulSet、DaemonSet、Job、HorizontalPodAutoscaling |

| Configuration object | Node、Namespace、Service、Secret、ConfigMap、Ingress、Label、 ServiceAccount |

| Store the object | Volume、Persistent Volume |

| Policy object | SecurityContext、ResourceQuota、LimitRange |

Although only the core components are mentioned here , But the number does not seem to be large , It's not easy to remember for a while . But that's okay , We only need to remember these things here , It will be mentioned repeatedly in the cloud combat chapter , Use it and remember .

Next , Let's see. Kubernetes High availability architecture .

High availability Architecture

Kubernetes High availability of mainly refers to the control plane (Master) High availability , That is to say, more than one set Master Node components and Etcd Components , The work node is connected to each through the load balancer Master node .

HA There are usually two architectures :

High availability architecture one :etcd And Master Node components are mixed .

High availability Architecture II : Use independent Etcd colony , Not with Master Node mixed fabric .

The similarity of the two methods is that they provide redundancy of the control plane , Realize the high availability of cluster , The difference lies in :

- Etcd Mixed cloth way

- Less machine resources needed

- Simple deployment , Good for management

- Easy to scale out

- It's a big risk , A host computer is hung up ,master and etcd There is only one set missing , Cluster redundancy is greatly affected .

- Etcd Independent deployment :

- More machine resources needed ( according to Etcd The odd principle of clustering , The cluster control plane of this topology needs at least 6 I'm on the host )

- Deployment is relatively complex , To manage... Independently etcd Clustering and master colony

- Decoupling the control plane and Etcd, Cluster risk is small and robust , There's a single one master or etcd The impact on clusters is very small

Tips : We use a highly available architecture Realization Kubernetes High availability .

Here's a special explanation ,Scheduler and Controller-manager Although in Master Multiple nodes are deployed in the middle , But there is only one node working at the same time , because Scheduler and Controller-manager It belongs to stateful service , To prevent repeated scheduling , Of multiple nodes Scheduler and Controller-manager The main selection work was carried out , Work node information is saved in Scheduler and Controller-manager Of EndPoint in , It can be done by

kubectl get leases -n kube-systemsee .

Load Balancer

Whether it is scheme 1 or scheme 2 , Need a load balancer , Load balancer can use software load balancer Nginx/LVS/HAProxy+KeepAlibed Or hardware load balancer F5 etc. , Through the load balancer Kube-APIServer Provided VIP That is to say Master High availability of nodes , Other components pass this VIP link Kube-APIServer.

What we choose here is HAProxy+KeepAlibed Built load balancer .

The final deployment architecture is shown below :

Architecture description

- etcd Follow master Nodes are deployed together , rely on master Nodes achieve high availability

- adopt keepalived and haproxy Realization apiServer High availability

With the above deployment architecture , Then we can plan the machine .

Machine planning

| Host name | IP Address | To configure (CPU- Memory - Hard disk ) | System version | explain |

|---|---|---|---|---|

| k8s-slb1 | 172.30.15.*** | 2C-4G-50G | Centos7.8 | Keepalived & HAProxy |

| k8s-slb2 | 172.30.15.*** | 2C-4G-50G | Centos7.8 | |

| k8s-master1 | 172.30.15.*** | 8C-32G-150G | Centos7.8 | master+etcd |

| k8s-master2 | 172.30.15.*** | 8C-32G-150G | Centos7.8 | |

| k8s-master3 | 172.30.15.*** | 8C-32G-150G | Centos7.8 | |

| k8s-worker1 | 172.30.15.*** | 8C-32G-500G | Centos7.8 | CICD + Storage |

| k8s-worker2 | 172.30.15.*** | 8C-32G-500G | Centos7.8 | |

| k8s-worker3 | 172.30.15.*** | 8C-32G-500G | Centos7.8 |

explain : Because some applications or middleware have the need to persist data , Storage is also taken into account in the above table , Follow worker Put the nodes together , Later, we will talk about storage .

Don't be nervous when you see here , I think I need so many machines at once , After your application goes to the cloud, these machines can be completely saved .

Frame selection

Next, let's look at the overall framework selection , Including container platform 、 Storage 、Kubernetes Build tools .

In the early stage, we spent a lot of energy on research when making technology selection , The research process will not be shown , Let's just draw a conclusion .

Container platform

| Container platform scheme | advantage | shortcoming | explain |

|---|---|---|---|

| KubeSphere | The code is all open source 、 The community is active 、UI Experience good 、 Behind it is the support of Qingyun listed company team | Multi cluster management is not perfect It is still a little small in the process of use bug | Learning materials include videos + Document form , Suitable for teams to learn quickly , At the same time, the official has a fixed biweekly meeting , Can participate in understanding the development of the project |

| Kuboard | Relevant documents are more detailed 、 It can be used as learning Learn to use materials | Personal open source projects , Document opening Source , Code is not open source | The document is relatively complete , Suitable for this project A preliminary understanding k8s, It's a good match build k8s Learning materials platform , Production use is not recommended . |

| Rancher | The development team is strong 、 The community is active 、 Strengths Integrate cloud platform resources 、 Foreign companies | The document is mainly in English 、WebUI There is always a kind of jam in use Feeling | Some domestic companies are using , The main feedback is that the product experience is not good , The technical team is strong , Chinese documents lag . |

The winner :KubeSphere

Selection reasons : Simple installation , Easy to use

- Have the ability to build a one-stop enterprise DevOps Architecture and visual operation and maintenance capability ( Save yourself building blocks manually with open source tools )

- Provide logs from platform to application dimension 、 monitor 、 event 、 Audit 、 Alarms and notifications , Realize centralized and multi tenant Observability of isolation

- Simplify continuous integration of applications 、 test 、 to examine 、 Release 、 Upgrade and elastic expansion and contraction

- Provide gray-scale publishing based on microservices for cloud native applications 、 Traffic management 、 Network topology and tracing Provide easy-to-use interface command terminal and graphical operation panel , Operation and maintenance personnel who meet different usage habits

- It can be easily decoupled , Avoid vendor binding

Storage options

Only distributed storage components are considered here , Such as local storage OpenEBS We directly pass 了 .

| Storage plan | advantage | shortcoming | explain |

|---|---|---|---|

| Ceph | More resources , Most container platforms support Ceph. | The cost of operation and maintenance is high , None of them Ceph Cluster fault handling can force , Better not touch | once , experienced 3 All copies are damaged The painful experience of data loss , Therefore, you will not be able to deal with all kinds of faults before Easy choice ( Feedback from community personnel ). |

| GlusterFS | Deployment and maintenance are simple 、 Multiple copies are highly available | There is relatively little information ; I haven't updated for a long time | Simple to deploy and maintain , If something goes wrong, it is more likely to retrieve the data |

| NFS | Widely used | Single point network jitter | It is not recommended that you use... In a production environment NFS save Store ( especially Its yes stay Kubernetes 1.20 or With On edition Ben ), This may cause failed to obtain lock and input/output error etc. ask topic , from and guide Cause Pod CrashLoopBackOff . this Outside , Some applications are incompatible NFS, example Such as Prometheus etc. |

The winner :Ceph

Selection reasons :

- Can pass ROOK Fast build Ceph High availability cluster , Many users

- Supports multiple storage types , Block storage 、 File store 、 Object storage , Very convenient

K8S Build tools

| Storage plan | advantage | shortcoming |

|---|---|---|

| Kubeadm | K8s Officially recommended cluster building tool | Need to renew manually k8s Set Group Certificate |

| Kubekey | In more convenient 、 Fast 、 Install efficiently and flexibly loading Kubernetes And KubeSphere. Support separate Kubernetes Or overall safety loading KubeSphere. Automatic renewal k8s Set Group Certificate | No k8s Official tools |

| Binary installation | Meet personal learning needs | Complex deployment |

The winner :Kubekey

Selection reasons :

Simple and easy to use , Is in kubeadm Tools born on the basis of , Mainly focus on k8s Cluster certificate automatic renewal function , There is no need to renew manually before the expiration of operation and maintenance . Although this tool is not k8s Official tools , But through CNCF Tools for validation ,CNCF kubernetes conformance verification.

Software version

| Software name | Software version | explain |

|---|---|---|

| operating system | centos7.8 | Pay attention to the operating system kernel 3.10 The kernel has no stability , Kernel upgrade to 4.19+ # View kernel version uname -sr At present 3.10 |

| KubeSphere | 3.2.1 | The latest version as of the time of publication |

| Kubekey | v2.0.0 | The latest version as of the time of publication |

| Docker | 20.10.9 | Seeking stability |

| Kubernetes | 1.21.5 | Kubekey2.0.0 Maximum version supported |

Summary

Today, I will briefly introduce Kubernetes The core components and Kubernetes High availability deployment scheme and related technology selection .Kubernetes It is difficult to , Learn to learn Kubernetes It is unrealistic to read books completely , We must practice repeatedly , Students who have conditions suggest that they practice with this series of courses .

边栏推荐

- MySQL性能优化(一):MySQL架构与核心问题

- 70. 爬楼梯:假设你正在爬楼梯。需要 n 阶你才能到达楼顶。 每次你可以爬 1 或 2 个台阶。你有多少种不同的方法可以爬到楼顶呢?

- Grafana visual configuration chart table

- 阿里二面:什么是mmap ?(不是mmp)

- 【微信小程序】camera系统相机(79/100)

- Static routing principle and configuration

- thymeleaf应用笔记

- MobileViT:挑战MobileNet端侧霸主

- 一个非常简单的函数为什么会崩溃

- [214] PHP reads the writing method of all files in the directory

猜你喜欢

Implementing DDD based on ABP -- domain service, application service and dto practice

ASUS Adu 14 Pro is equipped with the new generation 12 core standard voltage processor

小米12S Ultra产品力这么强老外却买不到 雷军:先专心做好中国市场

荐号 | 真正的卓越者,都在践行“人生最优策略”,推荐这几个优质号

这价格够香!灵耀14 2022影青釉秒杀:12代酷睿+2.8K OLED屏

07.01 Huffman tree

网络层协议介绍

交换机DHCP服务器配置方法(命令行版)

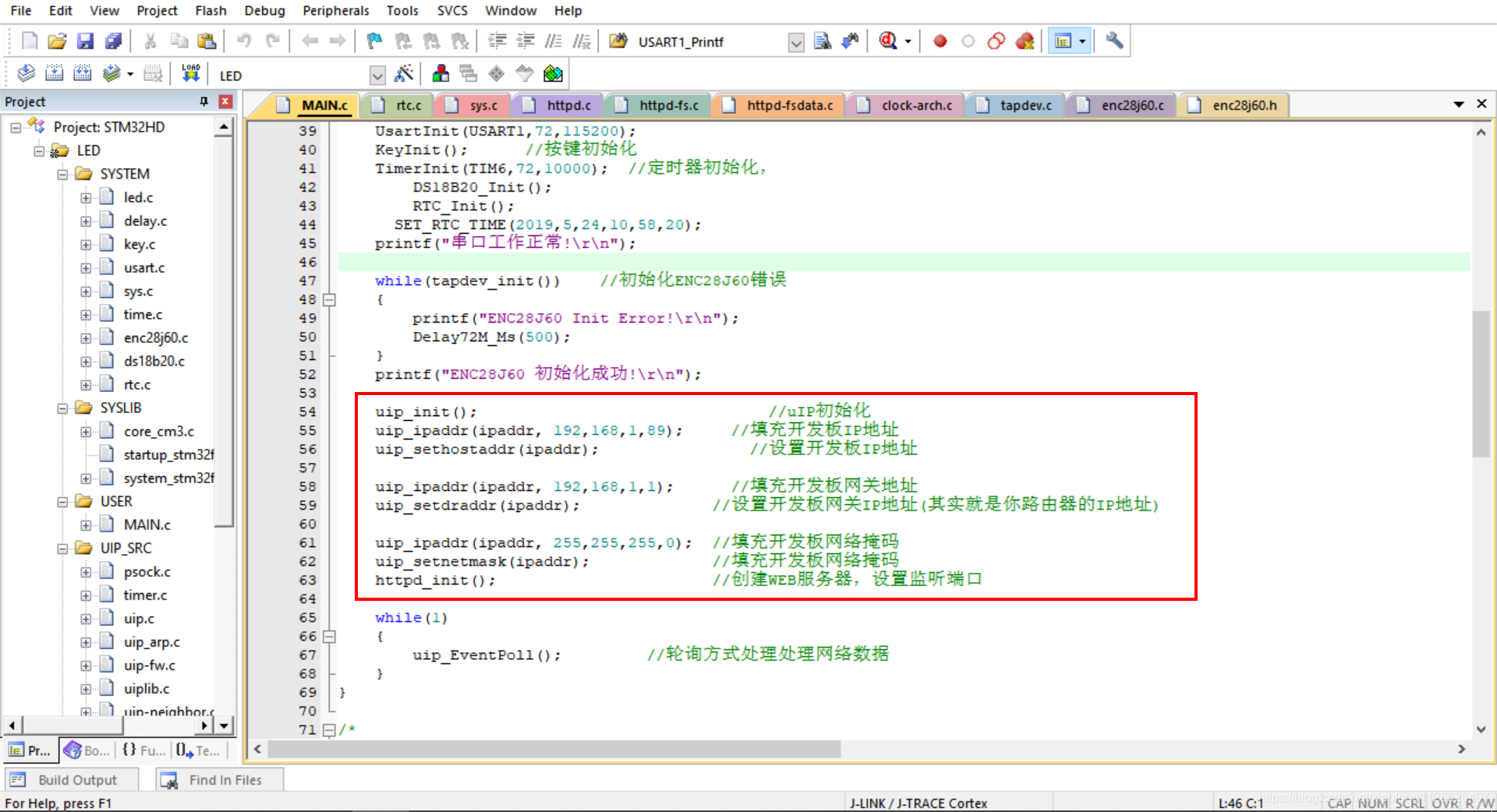

Example of implementing web server with stm32+enc28j60+uip protocol stack

In the cloud native era, developers should have these five capabilities

随机推荐

Utilisation simple de quartz et de ses emplois

【sklearn】数据集拆分 sklearn.moduel_selection.train_test_split

three hundred and thirteen billion one hundred and thirty-one million three hundred and thirteen thousand one hundred and twenty-three

Leetcode 111. Minimum depth of binary tree

mysql.h: No such file or directory

ASUS Adu 14 Pro is equipped with the new generation 12 core standard voltage processor

maya咖啡机建模

Did someone cut someone with a knife on Shanghai Metro Line 9? Rail transit public security: safety drill

6. < tag dynamic planning and housebreaking collection (tree DP) > lt.198 Home raiding + lt.213 Looting II + lt.337 Looting III DBC

In the cloud native era, developers should have these five capabilities

网络安全(4)

Redis基础知识、应用场景、集群安装

leetcode:169. Most elements

Transport layer protocol

利用二分寻找峰值

hi和hello两个请求引发的@RequestBody思考

别乱用UUID了,自增ID和UUID性能差距你测试过吗?

VMware Workstation Pro virtual machine network three types of network cards and their usage

Basic principle and configuration of switch

这价格够香!灵耀14 2022影青釉秒杀:12代酷睿+2.8K OLED屏