当前位置:网站首页>Li Hongyi, machine learning 3 Gradient descent

Li Hongyi, machine learning 3 Gradient descent

2022-07-20 17:05:00 【InfoQ】

One 、 Source of error

1.1 Under fitting and over fitting

- If the deviation of the model in the training set is too large , That is, under fitting . resolvent : Redesign the model ; Consider more powers 、 More complex models .

- If the model gets a small error on the training set , But we get a big error in the test set , This means that the model may have a large variance , It's over fitting . resolvent : Add more data ; Regularization processing .

1.2 Model selection

- Cross validation (Cross Validation): Divide the training set into two parts , Part of it is a training set , Part as validation set . Train the model with the training set , Then compare... On the validation set , Choose the best model , Then train the best model with all the training sets .

- N- Crossover verification (N-fold Cross Validation): Divide the training set into N Share , Will this N Training sets train separately , Then find out Average error , choice Average The model with the least error , All training sets will be used to train the model with the minimum average error .

Two 、 gradient descent

- : Loss function (Loss Function)

- : Parameters (parameters)( Represents a set of parameters , There may be more than one )

2.1 Adjust the learning rate

2.2 Gradient descent optimization

- SGD(Stochastic Gradient Descent, Stochastic gradient descent )

- Adagrad(Adaptive gradient, Adaptive gradient )Learning principles : Add the squares of the respective historical gradients of each dimension , Then when updating, divide by the historical gradient value, so that the learning rate of each parameter is related to their gradient , Then the learning rate of each parameter is different : Vulnerable to past gradients , This leads to a rapid decline in the learning rate , The ability to learn more knowledge is getting weaker and weaker , Will stop learning ahead of time .

- RMSProp(root mean square prop, Root mean square )Learning principles ∶ The attenuation factor is introduced on the basis of adaptive gradient , When the gradient accumulates , Would be right “ In the past ” And “ Now? ” Make a balance , Adjust the attenuation through super parameters . Suitable for dealing with non-stationary targets ( That is, time related ), about RNN The effect is very good .

- Adam(Adaptive momentum optimization, Adaptive momentum optimization )It is the most popular optimization method in deep learning , It combines adaptive gradientsHandle sparse gradientsAnd root mean squareGood at handling non-stationary targetsThe advantages of , It is suitable for large data sets and high-dimensional space .

2.3 Feature scaling

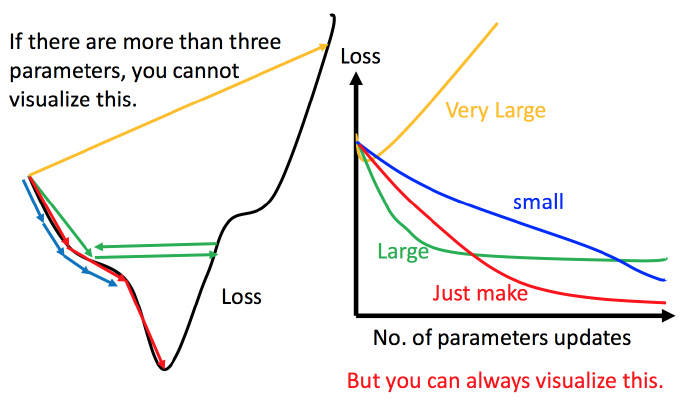

3、 ... and 、 Limitation of gradient descent

- It is easy to fall into local extremum (local minimal);

- Stuck is not extreme , But the differential value is 0 The place of ( Stagnation point );

- The differential value is close to 0 Just stop , But it's just gentle here , It's not the extreme point .

Four 、 summary

边栏推荐

- Comparison of RDB and AOF persistence methods in redis

- Banyan loan atlas mainly has the following core components, which are customized through rest API

- rtsp转h5播放

- 振动信号的采集与预处理

- ProSci 15-PGDH重组蛋白说明书

- Human cell research: prosci LAG-3 recombinant protein scheme

- The best realization of mobile end adaptation with rem/vw units

- OpenSMax: Unknown Domain Generation Algorithm Detection ECAI2020开放集识别论文解读

- Enhancing The Reliability of Out-of-distribution Image Detection in Neural Networks解读

- ProSci人细胞系 I 印迹丨人类免疫研究

猜你喜欢

Worthington核心酶——脱氧核糖核酸酶 I

Leetcode57-插入区间详解

ProSci 15-PGDH重组蛋白说明书

Interactive drawing of complex tables from the perspective of app

Worthington核心酶——胰蛋白酶的应用领域

服务器运维需要24小时在线吗?需要周末加班吗?

2018的锅让2019来悲

The advantages and disadvantages of the implementation of ID generator and the principle analysis of the optimal ID generator

Characteristics and related applications of Worthington core enzyme papain

Virtual human live broadcast - how far is the metauniverse from us?

随机推荐

Zhongchuang salon | digital collection "broken circle" attack

mongodb数据库

uni-app

深入揭秘 epoll 是如何实现 IO 多路复用的

LabView实验——温度检测系统(实验学习版)

The top ten domestic open source projects in 2019 are coming fiercely

Prototypical Matching and Open Set Rejection for Zero-Shot SemanticSegmentation

【综合笔试题】难度 4/5,字符处理的线段树经典运用

Thoroughly uncover how epoll realizes IO multiplexing

How many rows of data can b+ tree algorithm store in MySQL?

In depth understanding of viewport, layout viewport, visual viewport and ideal viewport

振动信号的采集与预处理

RTSP to H5 playback

OOM内存溢出实战不得不看的经典

How does redis analyze slow query operations?

Task+server will forward missed calls to the official number

Redis 常见经典面试题

A novel network training approach for open set image recognition

Zero copy is really important!!!

B. Mark the dust sweeper (thinking)