当前位置:网站首页>Smpl model

Smpl model

2022-07-20 09:33:00 【Early lunar month in Pingqiu】

SMPL Of python There are two versions on the official website , Namely SMPL_python_v.1.0.0,SMPL_python_v.1.1.0. The difference is that :SMPL_python_v.1.0.0 Incomplete , Provided only 10 individual shape PCA coefficients Model of .SMPL_python_v.1.1.0 Provides 3 Personality 300shape PCA coefficients Model of .

With SMPL_python_v1.1.0 For example , It contains three models And operation models Basic script for .

The three models are male,female,netrual Of pkl Format model , With netrual For example , Let's take a look at the data structure .

import pickle

with open(model_path, 'rb') as f:

smpl = pickle.load(f, encoding='latin1')

'J_regressor_prior': [24, 6890], scipy.sparse.csc.csc_matrix

# face

'f': [13776, 3], numpy.ndarray

# regressor array that is used to calculate the 3d joints from the position of the vertices

'J_regressor': [24, 6890], scipy.sparse.csc.csc_matrix

# indices of parents for each joints

'kintree_table': [2, 24], numpy.ndarray

'J': [24, 3], numpy.ndarray

'weights_prior': [6890, 24], numpy.ndarray

# linear blend skinning weights that represent how much the rotation matrix of each parr affects each vertex

'weights': [6890, 24], numpy.ndarray

# pose blend shape basis, pose PCA coefficients

'posedirs': [6890, 3, 207], numpy.ndarray

'bs_style': 'lbs'

# the vertices of the template model

'v_template': [6890, 3], numpy.ndarray

# tensor of PCA shape displacements

'shapedirs': [6890, 3, 300], chumpy.ch.Ch

'bs_type': 'lrotmin'

def forward_shape(self, betas):

v_shaped = self.v_template + blend_shapes(betas, self.shapedirs)

return SMPLOutput(vertices=v_shaped, betas=betas, v_shaped=v_shaped)

def forward(self, betas, body_pose, global_orient, transl,

return_verts=True, return_full_pose=False, pose2rot=True, **kwargs):

full_pose = torch.cat([global_orient, body_pose], dim=1)

vertices, joints = lbs(beta, full_pose, self.v_template, self.shapedirs,

self.posedirs, self.J_regressor, self.parents, self.lbs_weights,

pose2rot=pose2rot)

return SMPLOutput(vertices, global_orient=global_orient, body_pose=body_pose,

joints=joints, betas=betas, full_pose=full_pose)

The core is in lbs.py in .

# add shape contribution

v_shaped = v_template + blend_shapes(betas, shapedirs)

# Get the joints

J = vertices2joints(J_regressor, v_shaped)

# add pose blend shapes

ident = torch.eye(3, dtype=dtype, device=device)

pose_feature = pose[:, 1:].view(batch_size, -1, 3, 3) - ident

# [N, P] * [P, V*3] -> [N, V, 3]

pose_offsets = torch.matmul(pose_feature.view(batch_size, -1),

posedirs).view(batch_size, -1, 3)

v_posed = pose_offsets + v_shaped

# Get the global joint location

rot_mats = pose.view(batch_size, -1, 3, 3)

J_transformed, A = batch_rigid_transform(rot_mats, J, parents, dtype=dtype)

# Do skinning

W = lbs_weights.unsqueeze(dim=0).expand([batch_size, -1, -1])

T = torch.matmul(W, A.view(batch_size, num_joints, 16)) \

.view(batch_size, -1, 4, 4)

homogen_coord = torch.ones([batch_size, v_posed.shape[1], 1],

dtype=dtype, device=device)

v_posed_homo = torch.cat([v_posed, homogen_coord], dim=2)

v_homo = torch.matmul(T, torch.unsqueeze(v_posed_homo, dim=-1))

verts = v_homo[:, :, :3, 0]

The code order is shape blend shape + pose blend shape -> skinning. The return is 6890*3 Model vertices and n individual 3d Key point coordinates .

For s c i p y . s p a r s e . c s c . c s c _ m a t r i x scipy.sparse.csc.csc\_matrix scipy.sparse.csc.csc_matrix type , In the process of processing, it can be converted to n u m p y . n d a r r a y numpy.ndarray numpy.ndarray type .

def to_np(array, dtype=np.float32):

if 'scipy.sparse' in str(type(array)):

array = array.todense()

return np.array(array, dtype=dtype)

SMPL and SMPL-H The topology of is the same .

python3 Unable to identify in chumpy.ch.Ch Format , For compatibility python3, You need to convert the data in this format into numpy.ndarray Format .

output_dict = {

}

for key, data in body_data.iteritems():

if 'chumpy' in str(type(data)):

output_dict[key] = np.array(data)

else:

output_dict[key] = data

with open(out_path, 'wb') as f:

pickle.dump(output_dict, f)

Some projects will use J _ r e g r e s s o r _ e x t r a . n p y J\_regressor\_extra.npy J_regressor_extra.npy Add additional key points .

J_regressor_extra: [9, 6890], numpy.ndarray

extra_joints = vertice2joints(J_regressor_extra, smpl_output.vertices)

joints = torch.cat([smpl_output.joints, extra_joints], dim=1)

边栏推荐

猜你喜欢

【Mindspore】【安装】无可用的Ascend 910 AI处理器软件配套包

Mindspore依赖库找不到

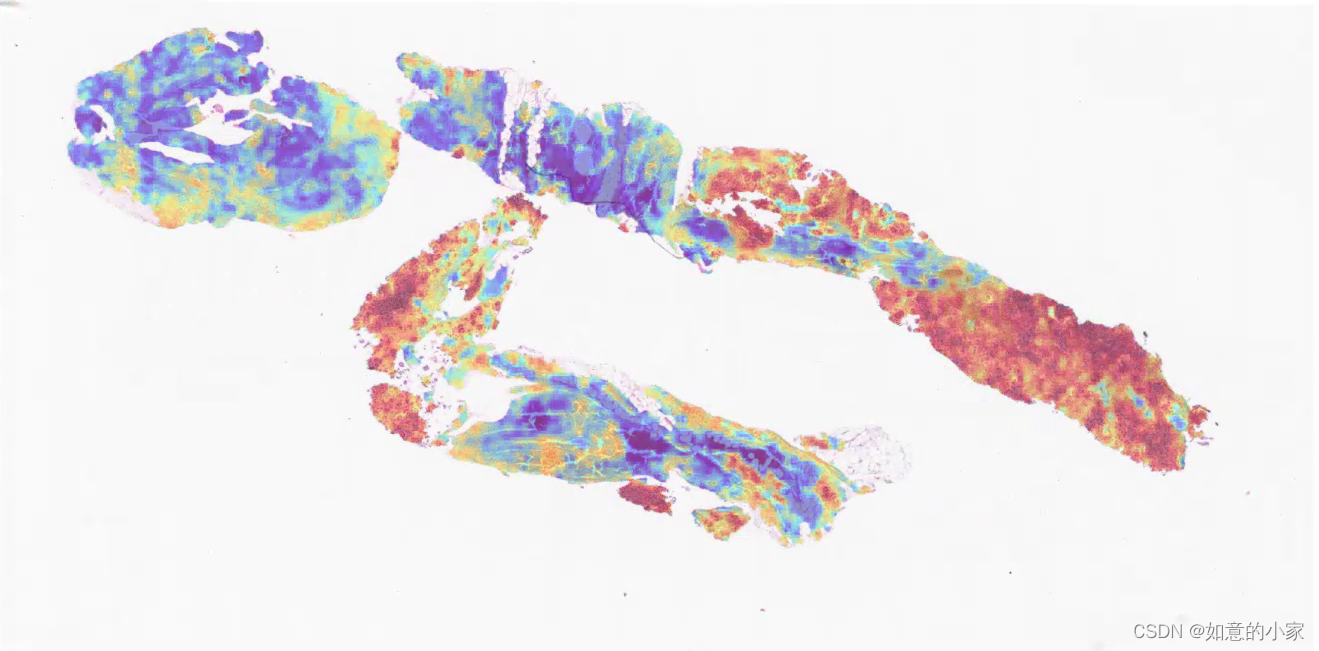

Thermogram display of pathological tissue section (floating on the surface of tissue section)

Golang: some operations that are easy to misunderstand

2D&3D Pose数据集

yolov3

VSFTP服务器搭建

上采样和上卷积的区别

FPGA刷题P4:使用8-3优先编码器实现16-4优先编码器、 使用3-8译码器实现全减器、 实现3-8译码器、使用3-8译码器实现逻辑函数、数据选择器实现逻辑电路

r-cnn

随机推荐

Vector exception thrown by opencv

算子Concat 拼接包含多个 tensor 的元组出错

Rstudio mapping

DGF网络

【CVPR2020】文章、代码和数据链接

smplify-x笔记

FPGA刷题P3: 4位数值比较器电路、4bit超前进位加法器电路、优先编码器电路、 优先编码器

使用pynative模式如何进行迁移学习?

Opencv learning (4) color conversion processing image rendering random number

Fast RCNN and fast RCNN

论文解读《基于伪标签自训练的局部对比损失半监督医学图像分割》

[mindspore learning] [multi label classification] how to build a multi label mindrecord format data set of images

Jwt+rsa stateless SSO principle

Difference between up sampling and up convolution

Samba的搭建

torch的使用须知

基于深度学习的病理图片研究进展【含论文及其概述】

有趣的torch.einsum

Enterprise wechat self built application

论文解读《Protein subcellular localization based on deep image features and criterion learning strategy》