当前位置:网站首页>MySQL is migrated to Dameng through DTS

MySQL is migrated to Dameng through DTS

2022-07-21 03:26:00 【qq_ thirty-eight million one hundred and thirteen thousand four】

One 、 Analyze the system to be transplanted , Identify transplant objects .

1. Through data migration tools DTS Complete the migration of general database objects and data .

2. It's done manually MSQL Transplantation , Like stored procedures , Functions and other non table objects .

3. After the transplantation, verify the result of the transplantation , Ensure the integrity and correctness of the migration .

4. Transplant the application system .

5. The application system starts to test and optimize .

6. The application system is officially launched .

Two 、 Preparation before migration

Confirm the application user or database to be migrated , It needs to be created in Dameng database in advance . It should be noted that ,MYSQL One A library in Dameng is equivalent to a user , And you need to create an independent table space for each user .

for example : To put MYSQL Medium TEST Library migration to dream , Then you need to execute the following commands in Dameng in advance :

1. Create tablespace

create tablespace test datafile ‘test.dbf’ size 128 autoextend on;

2. Create user

create user test identified by “[email protected]” default tablespace test;

3. to grant authorization

grant resource,public,vti to test;

3、 ... and 、 Data migration steps

1. New project

2. New migration

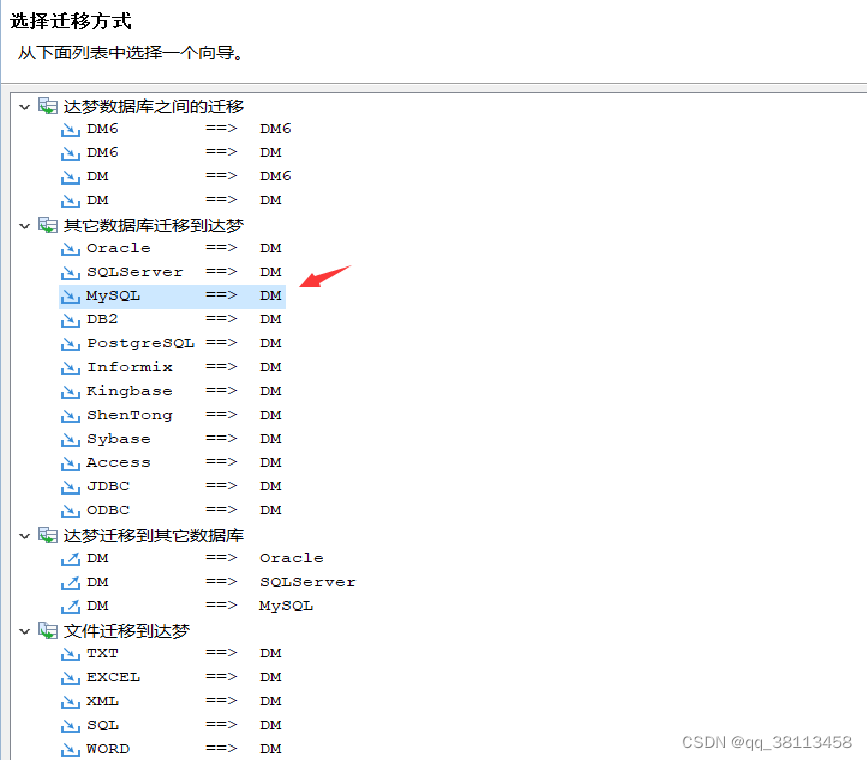

3. choice MYSQL Migrate to DM

4. Input mysql Database source side information

5. Input DM Destination information

6. Select the migration object

7. Review migration tasks

8. Perform the migration task

9. For non table objects , Like stored procedures 、 trigger 、 Custom type 、 Functions and other objects . From the source MYSQL The library is exported as sql writing Pieces of , And it has been manually modified to meet the grammar of dream sql file , Then import into Dameng database .

for example :mysqldump -u Database user name -p -n -t -d -R Database name > xx.sql

5、 ... and 、 Check the database migration results

1. Statistics source MYSQL The number of objects in the database to be migrated :

2. Statistics of the object and table data volume of the target enddream database :

3. After the data is checked and there is no problem , The statistical information of the whole database should be updated . The purpose of updating statistics is mass migration After the data , It may cause the database optimizer to get the wrong query plan according to the wrong statistics , Serious impact on query performance . The command to collect statistics is as follows :

DBMS_STATS.GATHER_SCHEMA_STATS(‘ user name ’,100,TRUE,‘FOR ALL COLUMNS SIZE AUTO’);

6、 ... and 、 Common migration error reporting and handling

1. Invalid data type

for example :mysql The data type of a table field is int(10), When migration arrives, invalid data types will be reported . Because in Da Meng

int The data type does not need to set precision . So just put int(10) Change it to int.

2. Invalid time type data

for example : stay mysql Medium time type TIMESTAMP Default default Set to ‘0000-00-00 00:00:00’, And in the DM in TIMESTAMP Type data cannot be ‘0000-00-00 00:00:00’, stay DM China is illegal , Must be in ‘0001- 01-01 00:00:00.000000’ To ‘9999-12-31 23:59:59.999999’ Between . So the treatment method , You can arrive at

Dream changes this field to varchar2, After migrating data . Deal with these illegal data in Dameng , Then change the field type to TIMESTAMP.

3. The record is too long

When initializing the Library , The selected page size affects the length of each row of data in the following table , The sum of the lengths of each row of the table ( General data type ) Cannot exceed one page , If exceeded 1 Page size means that the record is too long . terms of settlement : Initialize the library , Choose the right page size Small ; Can also be varchar The type is relatively long ( Such as varchar2(8000) such ), Modified into text type ; Or enable super Long record alter table Table name enable using long row;

4. String truncation

This is a problem , Generally, it is a character set 、 The table field is not long enough . So choose the appropriate character set according to the source library , Following the source library is What character set , The target side also selects this character set . The length of the destination table field is insufficient , You need to manually increase the length of this field , or When choosing the migration method , Select the implicit relation of character length as 2( That is, the destination string type field is automatically expanded 2 Times the length ).

5. Violation of uniqueness constraint

This is because the uniqueness constraint or primary key constraint is set in the table , But there are duplicate records in the data . In this case, it is possible that the constraints of the original library are disabled , Or caused by repeated data migration .

6. Violation of reference constraints

This problem is mainly caused by foreign key constraints , The data of the parent table is not migrated , First migrate the data of the sub table . So we are migrating data When , It can be done in three steps , First migrate the table structure , Then migrate the data , Finally, migrate the index 、 Constraints etc. .

7. The order problem in view migration : Invalid user object

This problem is usually caused by before migrating views , The tables that the view depends on are not migrated . So we should follow the first migration table , Relocating The order of moving views .

边栏推荐

- (四)PyTorch深度学习:PytTorch实现线性回归

- [personal summary] end of July 17, 2022

- Antd mobile form validation RC form usage

- The real topic of the 11th national competition of Bluebridge cup 2020 - tiangan dizhi

- 找一个数介于两个斐波那契数列之间

- [dish of learning notes dog learning C] evaluation expression

- SQL事务

- (六)PyTorch深度学习:加载数据集

- kettle_配置数据库连接_报错

- JDBC 和 ODBC 的区别

猜你喜欢

在Pycharm中打包(.py)文件成(.exe)文件

Centos8 (Linux) 安装 redis

Redis发布与订阅

js事件流 (捕获阶段、目标阶段、冒泡阶段)取消浏览器的默认冒泡行为

Five basic data types of redis (super detailed)

![[dish of learning notes dog learning C] chain access, function declaration and definition, goto statement](/img/c0/1fce4c99f240683460b8ef98e1daf8.png)

[dish of learning notes dog learning C] chain access, function declaration and definition, goto statement

Introduction to microservice theory

Flink DataStream API (一)执行环境

数仓技术实现

任务调度:常见类型和工具

随机推荐

如何用两个队列模拟实现一个栈

(二)PyTorch深度学习:梯度下降

统一返回数据格式

mongoose使用validate验证, 获取自定义验证信息

Mongoose uses validate validation to obtain custom validation information

Redis五种基本数据类型(超级详细)

node 查询目标 目录下所有(文件或文件夹)名为 filename 的文件路径

在Pycharm中打包(.py)文件成(.exe)文件

数仓基本架构--分层 各层功能

(三)PyTorch深度学习:反向传播梯度下降

从输入URL到页面展示

xcode编译 build号自增

ES6——学习笔记

JS event flow (capture phase, target phase, bubble phase) cancels the default bubble behavior of the browser

(七)PyTorch深度学习:全连接层网络

使用反射的方式将RDD转换为DataFrame

计算前五个数阶乘之和

Question B: the real topic of the 11th Bluebridge cup 2020 - palindrome date

ECMAScript新特性

Parameters and calls of the [learning notes dish dog learning C] function