当前位置:网站首页>Only es works well when checking the log? You didn't use Clickhouse like this

Only es works well when checking the log? You didn't use Clickhouse like this

2022-07-22 15:53:00 【java_ beautiful】

One 、 background

Graphite documents are all applied and deployed in Kubernetes On , There will be a lot of log output all the time , We mainly used SLS and ES Stored as a log . But when we use these components , Some problems were found .

1) The question of cost

- SLS Personally, I think it is a very excellent product , Fast , Easy interaction , however SLS Indexing costs are expensive

- We want to reduce SLS When indexing costs , It is found that cloud vendors do not support analyzing the cost of a single index , As a result, we cannot know which indexes are not built reasonably

- ES Use a lot of storage , And consume a lot of memory

2) General problems

- If the business is a hybrid Cloud Architecture , Or the business form has SAAS And privatization , that SLS It's not universal

- Logs and links , Need to use two sets of cloud products , Not very convenient

3) Accuracy problem

- SLS The accuracy of storage can only be up to seconds , But we actually log to milliseconds , If there is traceid,SLS Cannot pass according to traceid Information , Sort logs in milliseconds , It's not good for troubleshooting

After some research , Discover the use of Clickhouse It can solve the above problems well , also Clickhouse Save storage space , Very economical , So we chose Clickhouse Scheme storage log . But when we look deeper ,Clickhouse As a log storage, there are many landing details , However, the industry has not well explained the relevant Clickhouse The whole process of collecting logs , And there is no good Clickhouse The log query tool helps analyze logs , To this end, we wrote a set of Clickhouse The logging system contributes to the open source community , And will Clickhouse The experience of log collection architecture is summarized . On a first Clickhouse Log query interface , Let's feel that graphite is the back-end programmer who knows the front-end best .

Two 、 Architecture diagram

We divide the log system into four parts : Log collection 、 Log transfer 、 The logging stored 、 Log management .

- Log collection : LogCollector use Daemonset Mode deployment , Mount the host log directory to LogCollector In a container ,LogCollector The application log can be collected through the mounted directory 、 system log 、K8S Audit log, etc

- Log transfer : Through difference Logstore Mapping to Kafka Different from Topic, Separate logs with different data structures

- The logging stored : Use Clickhouse Two engine data tables and materialized views in

- Log management : Open source Mogo System , Be able to query logs , Set log index , Set up LogCollector To configure , Set up Clickhouse surface , Set alarm, etc

Below we will follow these four parts , Explain the architecture principle .

3、 ... and 、 Log collection

1、 Collection method

Kubernetes There are usually three ways to collect logs in containers .

- DaemonSet Way to collect : At every node Deploy on node LogCollector, And mount the directory of the host as the log directory of the container ,LogCollector Read the log content , Collect to the log Center .

- Network collection : Through the application log SDK, Directly collect the log content to the log center .

- SideCar Way to collect : At every pod Internal department LogCollector,LogCollector Just read this pod Log content in , Collect to the log Center .

Here are the advantages and disadvantages of three acquisition methods :

We mainly use DaemonSet And network to collect logs .DaemonSet The method is used for ingress、 Collection of application logs , Network mode is used to collect big data logs . Let's mainly introduce DeamonSet Collection mode of mode .

2、 Log output

As can be seen from the above introduction , our DaemonSet There are two ways to collect log types , One is standard output , One is files .

Quote the description of yuanb : Although the use of Stdout The printed log is Docker Official recommendations , But you need to pay attention to : This recommendation is based on the container as a simple application scenario , In the actual business scenario, we still suggest that you use the file as much as possible , The main reasons are as follows :

- Stdout Performance issues , Output from application stdout To the server , There will be several processes in the middle ( For example, the commonly used JSONLogDriver): application stdout -> DockerEngine -> LogDriver -> Serialized into JSON -> Save to file -> Agent Collect documents -> analysis JSON -> Upload the server . The whole process has a lot more overhead than files , During the pressure test , Per second 10 Ten thousand lines of log output will take up extra DockerEngine 1 individual CPU nucleus ;

- Stdout Classification is not supported , That is, all the output is mixed in one stream , Can't sort output like a file , Usually an application has AccessLog、ErrorLog、InterfaceLog( Call log of external interface )、TraceLog etc. , And the format of these logs 、 There are different uses , If mixed in the same stream, it will be difficult to collect and analyze ;

- Stdout Only the main program output of the container is supported , If it is daemon/fork The program running in mode will not be able to use stdout;

- Of documents Dump Ways to support strategies , For example, synchronization / Asynchronous write 、 Cache size 、 Document rotation strategy 、 Compression strategy 、 Clear strategy, etc , Relatively more flexible .

From this description , We can see that docker It is a better practice to collect the output files in the log Center . All log collection tools support the method of collecting file logs , However, when configuring log collection rules , Find some open source log collection tools , for example fluentbit、filebeat stay DaemonSet The collection of file logs under deployment does not support appending, for example pod、namespace、container_name、container_id etc. label Information , And can't pass these label Do some customized log collection .

Cannot append based on label The reason for the information , We gave up temporarily DeamonSet Deploy the file log collection method , It's based on DeamonSet Deploy the acquisition method of standard output .

3、 Log directory

The basic information of log directory is listed below .

Because we use the standard output mode to collect logs , So according to the above table, our LogCollector Just mount /var/log,/var/lib/docker/containers Two directories .

1) Standard output log directory

The standard output log of the application is stored in /var/log/containers Under the table of contents , The file name is based on K8S Generated by log specification . Here we use nginx-ingress As an example . We go through ls /var/log/containers/ | grep nginx-ingress Instructions , You can see nginx-ingress The name of the file .

nginx-ingress-controller-mt2wx_kube-system_nginx-ingress-controller-be3741043eca1621ec4415fd87546b1beb29480ac74ab1cdd9f52003cf4abf0a.log

We refer to K8S Log specification :/var/log/containers/%{DATA:pod_name}_%{DATA:namespace}_%{GREEDYDATA:container_name}-%{DATA:container_id}.log. Can be nginx-ingress The log resolves to :

- pod_name:nginx-ingress-controller-mt2w

- namespace:kube-system

- container_name:nginx-ingress-controller

- container_id:be3741043eca1621ec4415fd87546b1beb29480ac74ab1cdd9f52003cf4abf0a

Analyze the information through the above log , our LogCollector You can easily add pod、namespace、container_name、container_id Information about .

2) Container information directory

The container information of the application is stored in /var/lib/docker/containers Under the table of contents , Each folder in the directory is a container ID, We can go through cat config.v2.json Get applied docker essential information .

4、LogCollector Collect logs

1) To configure

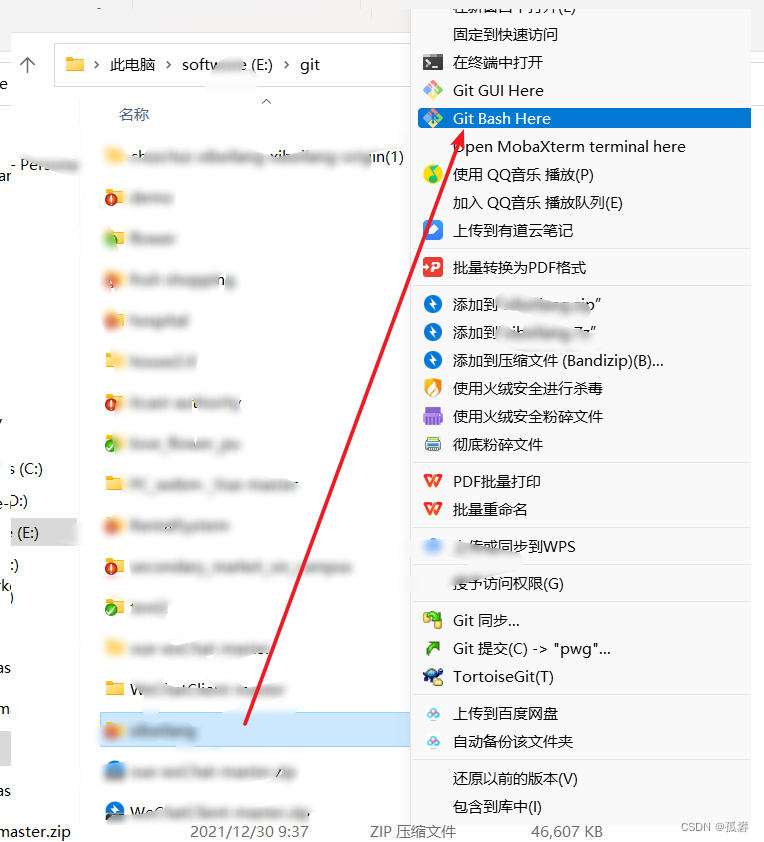

We LogCollector It's using fluent-bit, The tool is cncf Flag , It can be better combined with cloud primitives . adopt Mogo The system can choose Kubernetes colony , Easy to set fluent-bit configmap Configuration rules of .

2) data structure

fluent-bit Default acquisition data structure

- @timestamp Field :string or float, The time used to record the collection log

- log Field :string, Used to record the complete contents of the log

Clickhouse If you use @timestamp When , Because there is @ Special characters , Will deal with problems . So we're dealing with fluent-bit Data collection structure , Can do some mapping , And the double underline is Mogo System log index , Avoid index conflicts with business logs .

- _time_ Field :string or float, The time used to record the collection log

- _log_ Field :string, Used to record the complete contents of the log

For example, your log records {"id":1}, So practical fluent-bit The collected logs will be {"_time_":"2022-01-15...","_log_":"{\"id\":1}" The log structure will be written directly to kafka in ,Mogo According to these two fields _time_、_log_ Set up clickhouse Data table in .

3) collection

If we want to collect ingress journal , We need to be in input In the configuration , Set up ingress Log directory of ,fluent-bit Will be able to ingress Log collection into memory

And then we were in filter In the configuration , take log to _log_

And then we were in ouput In the configuration , Set the additional log collection time to _time_, Set the log write kafka borkers and kafka topics, that fluent-bit The log in the memory will be written to kafka in

Log writes to Kafka in _log_ Need to be for json, If the log written by your app is not json, Then you need to be based on fluent-bit Of parser file , Adjust the data structure written in your log :https://docs.fluentbit.io/manual/pipeline/filters/parser

Four 、 Log transfer

Kafka It is mainly used for log transmission . As mentioned above, we use fluent-bit The default data structure of the collection log , Below kafka In the tool, we can see the contents of log collection .

During log collection , Because the fields in the business log are inconsistent , The parsing method is different . So we are in the log transfer phase , Logs with different data structures need to be , Create different Clickhouse surface , Mapping to Kafka Different Topic. Here we use ingress For example , So we're in Clickhouse You need to create a ingress_stdout_stream Of Kafka Engine watch , Then map to Kafka Of ingress-stdout Topic in .

5、 ... and 、 The logging stored

We will use three kinds of tables , Used to store logs of a business type .

1)Kafka Engine watch

Take data from Kafka Collect to Clickhouse Of ingress_stdout_stream In the data table .

create table logger.ingress_stdout_stream2) Materialized view

Take data from ingress_stdout_stream Read out the data table ,_log_ according to Mogo Configured index , Extract the field and write it to ingress_stdout In the result sheet .

CREATE MATERIALIZED VIEW logger.ingress_stdout_view TO logger.ingress_stdout AS3) Result sheet

Store the final data .

create table logger.ingress_stdout6、 ... and 、 Summary process

1) The log will go through fluent-bit The rules collected by kafka, Here, we will collect the log into two fields .

- _time_ Fields are used to store fluent-bit The time of collection

- _log_ Field is used to store the original log

2) adopt mogo, stay clickhouse There are three tables in the .

- app_stdout_stream: Take data from Kafka Collect to Clickhouse Of Kafka Engine watch

- app_stdout_view: The view table is used to store mogo Set index rules

- app_stdout: according to app_stdout_view Index resolution rules , consumption app_stdout_stream The data in , Stored in app_stdout In the results table

3) Last mogo Of UI Interface , according to app_stdout The data of , Query log information

7、 ... and 、Mogo Interface display

1) Query log interface

2) Set the log collection configuration interface

The above document description is for graphite Kubernetes Log collection of .

> > > >

边栏推荐

- ES6——模块

- 理解JS的三座大山

- 微服务真的不挑数据库吗?如何选择?

- Industry digitalization has accelerated in an all-round way, and Intelligent Cloud 2.0 redefines digital transformation

- EACCES: permission denied, unlink ‘/usr/local/bin/code‘

- Don't look for it, it's all sorted out for you -- complete collection of SQL statements

- Detailed teaching of address book in C language

- How does the 2022 social e-commerce model split marketing? -- Share purchase

- 微信小程序实现PDF预览功能——pdf.js(含源码解析)

- 10 automated test frameworks for test engineers

猜你喜欢

随机推荐

记一次jmeter压测实战总结

10个自动化测试框架,测试工程师用起来

DOM之预加载和懒加载

C语言实现通讯录详细教学

18.redis的持久化机制是什么?各自的优缺点?

Nifi 1.16.3 updates and bugs used in production.

記一次jmeter壓測實戰總結

腾讯计划关停数字藏品业务“幻核”(7月21日)

[07] function call: why does stack overflow happen?

认识垃圾回收

Redis high availability principle master-slave sentinel cluster

【代码笔记】—U-Net

How to improve the efficiency of test case review?

C语言动态分配内存

DOM之style的操作

Comparison between deep convolution and ordinary convolution

Pull daily activities, use cloud functions to send wechat applet subscription messages

Leetcode high frequency question: zigzag (zigzag, zigzag) sequence traversal of binary tree

Cookies and sessions

C language to print a specific * asterisk pattern on the screen