当前位置:网站首页>[deep learning] - model comparison of emotional analysis of IMDB data set (4) - cnn-lstm integrated model

[deep learning] - model comparison of emotional analysis of IMDB data set (4) - cnn-lstm integrated model

2022-07-20 09:17:00 【Your city has my shadow】

【 Deep learning 】-Imdb Model comparison of affective analysis of data sets (4)- CNN-LSTM Integrated model

Preface

【 Deep learning 】-Imdb Model comparison of affective analysis of data sets (3)- CNN

【 Deep learning 】-Imdb Model comparison of affective analysis of data sets (2)- LSTM

【 Deep learning 】-Imdb Model comparison of affective analysis of data sets (1)- RNN

Friends who are interested in the previous content can refer to the above articles , Next, I want to introduce the content of this blog . To observe CNN Extract the advantages of text structure and LSTM Extract the advantages of context , We integrate the two models , Introduced CNN-LSTM Integrated model for experiment . Go first CNN Layer to extract local features , Reuse LSTM Layer extract the long-distance features of these local features , Then input the full connection layer through transformation , Conduct emotional analysis and score quantification , Finally, average the scores of the two models , To get the final score .

One ,CNN What is it? ?

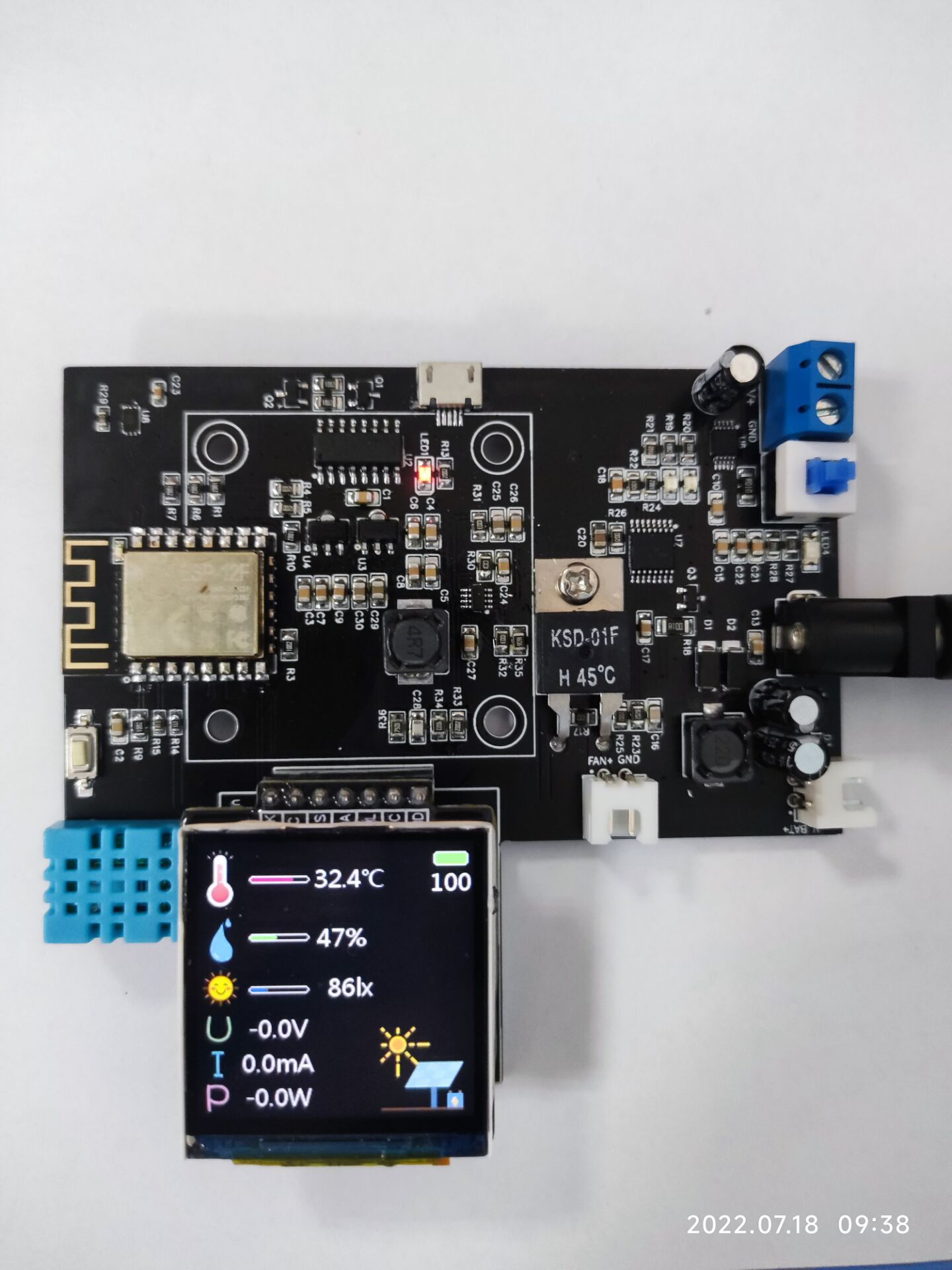

CNN The network is suitable for extracting structured information of data , Therefore, it has been widely used in Feature Engineering , and LSTM The network is more suitable for extracting the temporal correlation of data and dependencies in text fragments , It has the property of expanding in chronological order , It is widely used in time series . meanwhile , This brings us a new idea in the task of emotional analysis , You can combine the two , Build a new integration model CNN-LSTM Classify the text . The model structure is shown in the figure :

Two 、 Training CNN-LSTM Model

1. Data preprocessing

Similar to the above , Please move for details 【 Deep learning 】-Imdb Model comparison of affective analysis of data sets (1)- RNN

Data preprocessing part

2. Construction and training CNN-LSTM Model

Model structure

Set model parameters

filter_length = 5 # Filter length

pool_length = 4 # Pool length

max_features = 4000 # Vocabulary size

maxlen = 400 # Maximum sequence length

embedding_size = 32 # Word vector dimension

nb_filter = 32 # 1 Number of dimensional convolution kernels

filter_length = 3 # Convolution kernel length

hidden_dims = 256 # Hidden layer dimensions

Build a network model

model = Sequential()

model.add(Embedding(max_features, embedding_size, input_length=400)) # Word embedding layer

model.add(Dropout(0.2)) # Dropout layer

lstm_output_size =32 # LSTM Layer output size

# 1D Convolution layer , Convolute the word embedding layer output

model.add(Convolution1D(nb_filter=nb_filter,

filter_length=filter_length,

border_mode='valid',

activation='relu',

subsample_length=1))

# Pooling layer

model.add(MaxPooling1D(pool_length=pool_length))

# LSTM Circulation layer

model.add(LSTM(lstm_output_size))

# Fully connected layer , There's only one neuron , Enter whether it is a positive emotion value

model.add(Dense(1))

model.add(Activation('sigmoid')) # sigmoid Judge emotions

model.summary() # Model overview

Training models

Define the loss function , Optimizer and evaluation matrix , And start training the model .

## Training models

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

train_history =model.fit(x_train, y_train,batch_size=32,

epochs=10,verbose=1,

validation_split=0.2)

3、 ... and 、 Visualization results

import matplotlib.pyplot as plt

def show_train_history(train_history,train,validation):

plt.plot(train_history.history[train])

plt.plot(train_history.history[validation])

plt.title('Train History')

plt.ylabel(train)

plt.xlabel('Epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.show()

show_train_history(train_history,'accuracy','val_accuracy')

show_train_history(train_history,'loss','val_loss')

print('Test score:', score)

print('Test accuracy:', acc)

Because the blogger's data set is deleted, no visual pictures are put , Sure next time .

* Four 、 Model to predict

Please refer to the model prediction code under my blog .

【 Deep learning 】-Imdb Model comparison of affective analysis of data sets (3)- CNN

5、 ... and 、 Evaluate the model and save

Model to evaluate

Use the test set to evaluate the matching accuracy of the model

score, acc = model.evaluate(x_test, y_test,

batch_size=128)

Save the model

model_json = model.to_json()

with open("D:/final_all/1/1.json", "w") as json_file:

json_file.write(model_json)

model.save_weights("D:/final_all/1/2.h5")

print("Saved model to disk")

6、 ... and 、 summary

CNN-LSTM Model training always takes time 670s, The accuracy on the training set is 98.96%, The accuracy on the test set is 90.02%, Loss rate at 7.5%, It is the best model among these models .

The experiment summary can refer to my next blog , There is a comparative analysis and summary of my whole experiment .

Reference material

https://blog.csdn.net/keeppractice/article/details/106145451

边栏推荐

猜你喜欢

Static library A documents and Framework file

float position

MPPT电源控制器设计

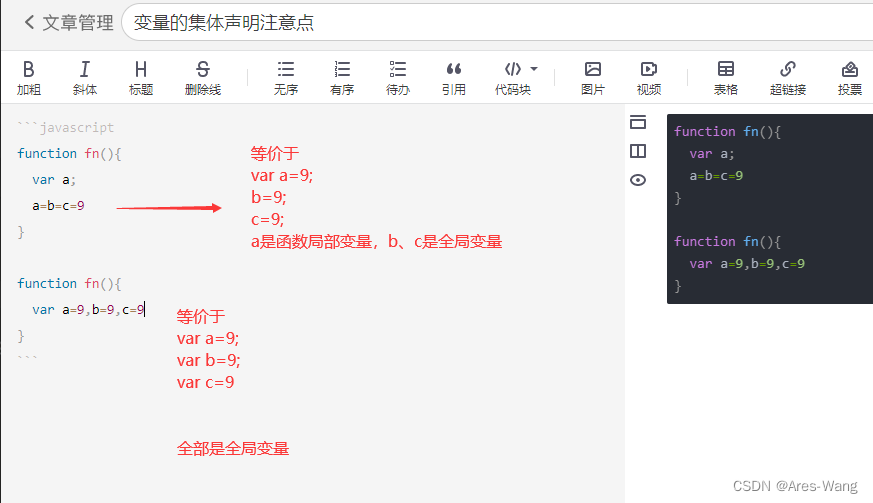

Notes on collective declaration of variables

【论文导读】Learning Bayesian Networks: The Combination of Knowledge and Statistical Data

MPPT power controller design

uniapp小程序底部弹出窗口

电源学习(1)——电源系统测试

Excel 错误含义

【深度学习】-Imdb数据集情感分析之模型对比(4)- CNN-LSTM 集成模型

随机推荐

OpenCV图像位运算

yolov3

Android Studio 执行 Kotlin 抛出 com.android.builder.errors.EvalIssueException 问题的解决方法

获取屏幕分辨率

CPU architecture compatible

Without programming, generate crud based on Microsoft MSSQL database zero code, add, delete, modify and check restful API interface

Google Chrome browser shortcut key description

The problem of data set CSV coding format in machine learning

富文本设置图片大小和字体大小

OpenCV:07图像轮廓

Asp.NET <%=%> <%#%> <% %> <%@%>

Redis data types and application scenarios

Google Chrome 浏览器快捷键说明大全

Summary of Alibaba cloud technology points

MySQL binary solves the case sensitive problem of MySQL data

项目中遇到的一些问题或异常以及处理方法

Write an Aidl

Responsive layout [responsive] and adaptive layout [adaptive], single page [spa] and multi page [MPa]

JS buffer movement

js缓冲运动