当前位置:网站首页>Deep learning (3): evaluation indicators of different classification models (F1, recall, P)

Deep learning (3): evaluation indicators of different classification models (F1, recall, P)

2022-07-20 16:11:00 【Muzichuan】

One 、 introduce

We are in the process of training the model , Unknown data sets are required ( For trained ) Send it to the trained model for verification , To test whether the model is applicable to the project . How to judge ? This requires evaluation indicators . There are many evaluation indicators of the model , such as : Accuracy ( Precision rate )、F1-Score、 Recall rate ( Recall rate )、 Accuracy rate 、P-R curve 、ROC Curves, etc . Here we mainly introduce the accuracy ( Precision rate )、F1-Score、 Recall rate ( Recall rate )、 Accuracy rate .

Two 、 Introduction to evaluation indicators

Different classification indicators have different meanings , For example, in the commodity recommendation system , I hope to understand the customer's needs more accurately , Avoid pushing content that users are not interested in , Accuracy It's more important ; At the time of disease detection , I don't want to leak any disease , At this time Recall rate More important . When both need to be considered ,F1-Score It is a reference index .

Be careful : In the process of evaluating the model , Different evaluation indexes are needed to comprehensively evaluate the model from different angles , Among many evaluation indexes , Most indicators can only reflect part of the performance of the model one sidedly , If we can't use the evaluation index reasonably , Not only can't we find problems with the model itself , And come to the wrong conclusion .

Accuracy ( Precision rate )、F1-Score、 Recall rate ( Recall rate )、 Accuracy rate It is the four most used evaluation indicators in our classification model , Different evaluation indexes have different calculation formulas .

accuracy = The prediction is correct / The total number of forecasts

Which is in these formulas TP、TN、FP、FN What does that mean ? Please see the following content :

Real examples (True Positive,TP): Represents the number of samples that are actually positive and predicted to be positive

Real examples (True Positive,TP): Represents the number of samples that are actually positive and predicted to be positive

True counter example (True Negative,TN): Represents the number of samples that are actually negative and predicted to be negative

False positive example (False Positive,FP): Represents the number of samples that are actually negative but are predicted to be positive

False counter example (False Negative,FN): Represents the number of samples that are actually positive but are predicted to be negative

3、 ... and 、 Two classification

What is secondary classification ? The second category is our prediction results, there are only two categories , And these two categories can be expressed numerically .

Suppose we are now about demand , To correctly judge the cat and dog , So we trained a model , Now we need to judge whether the model can be used normally in practice , So we put the unknown data set [ cat , cat , Dog , Dog , cat ] The prediction result is [ cat , Dog , Dog , cat , cat ].

y_true = [ cat , cat , Dog , Dog , cat ]

y_pred = [ cat , Dog , Dog , cat , cat ]

TP = 1

TN = 2

FP = 1

FN = 1

accuracy = 3/5 = 0.6

precision = 2/(2+1) = 05

recall = 2/(1+1) = 0.5

F1 = (2*0.5*0.5)/(0.5+0.5) = 0.5

from sklearn.metrics import recall_score, f1_score, precision_score, accuracy_score

# [ cat , cat , Dog , Dog , cat ]

y_true = [0, 0, 1, 1, 0]

# [ cat , Dog , Dog , cat , cat ]

y_pred = [0, 1, 1, 0, 0]

print("accuracy:%.2f" % accuracy_score(y_true, y_pred))

print("precision:%.2f" % precision_score(y_true, y_pred))

print("recall:%.2f" % recall_score(y_true, y_pred))

print("f1-score:%.2f" % f1_score(y_true, y_pred))

Four 、 Many classification

Multi classification means that there are more than two kinds of prediction results of the model , There are many kinds of , for instance , Three types of , Four categories, etc . Then how should we find the index ?

Suppose there are three kinds , The true value is [ cat , Dog , Dog , rat , cat , rat ], Predictive value for [ rat , cat , Dog , cat , cat , rat ].

We can regard it as 3(3 class ) Two categories .

first :[ cat ,other]

y_true = [ cat ,other,other,other, cat ,other]

y_pred = [other, cat ,other, cat , cat ,other]

TP = 1 TN = 2 FP = 2 FN = 1

precision = 1/3 = 0.33

recall = 1/2 = 0.5

F1 = (2 * precision * recall )/(precision + recall ) = 0.40

the second :[ Dog ,other]

y_true = [other, Dog , Dog ,other,other,other]

y_pred = [other,other, Dog ,other,other,other]

TP = 1 TN = 4 FP = 0 FN = 1

precision = 1/1 = 1

recall = 1/2 = 0.5

F1 = (2 * precision * recall )/(precision + recall ) = 0.67

Third :[ rat ,other]

y_true = [other,other,other, rat ,other, rat ]

y_pred = [ rat ,other,other,other,other, rat ]

TP = 1 TN = 3 FP = 1 FN = 1

precision = 1/2 = 0.5

recall = 1/2 = 0.5

F1 = (2 * precision * recall )/(precision + recall ) = 0.5

Average the above three categories :

accuracy = 3/6 = 0.5

precision = (0.33+1+0.5)/3 = 0.61

recall = (0.5+0.5+0.5)/3 = 0.5

F1 = (0.4+0.67+0.5)/3 = 0.52

from sklearn.metrics import recall_score, f1_score, precision_score, accuracy_score

from sklearn.metrics import classification_report

# [ cat , Dog , Dog , rat , cat , rat ]

y_true = [0, 1, 1, 2, 0, 2]

# [ rat , cat , Dog , cat , cat , rat ]

y_pred = [2, 0, 1, 0, 0, 2]

measure_result = classification_report(y_true, y_pred)

print('measure_result = \n', measure_result)

print("accuracy:%.2f" % accuracy_score(y_true, y_pred))

print("precision:%.2f" % precision_score(y_true, y_pred, labels=[0, 1, 2], average='macro'))

print("recall:%.2f" % recall_score(y_true, y_pred, labels=[0, 1, 2], average='macro'))

print("f1-score:%.2f" % f1_score(y_true, y_pred, labels=[0, 1, 2], average='macro'))

边栏推荐

- 京津冀综合科技服务平台的建设与思考

- Mysql database deletion failed

- Era journey of operators: plant 5.5G magic beans and climb the Digital Sky Garden

- [常用工具] 基于psutil和GPUtil获取系统状态信息

- 备赛笔记:Opencv学习:直线检测

- php 踩坑 数组访问

- C language simulation to realize character function and memory function

- How to operate international crude oil futures to open an account safely?

- 获取疫情资讯数据

- Immediate assertion and concurrent assertion in SystemVerilog

猜你喜欢

柱塞泵ParKer PVP23363R26A121

2022最新算法分析及手写代码面试解析

![[matlab project practice] Research on UAV image compression technology based on region of interest](/img/ff/b707feb293522019719a1e6b56ab81.png)

[matlab project practice] Research on UAV image compression technology based on region of interest

Obtain epidemic information and data

原来何恺明提出的MAE还是一种数据增强!上交&华为基于MAE提出掩蔽重建数据增强,优于CutMix、Cutout和Mixup!...

Bi skills - same month on month calculation

What is the difference between shallow copy and deep copy?

面试官:3 种缓存更新策略是怎样的?

In depth understanding of JUC concurrent (VIII) thread pool

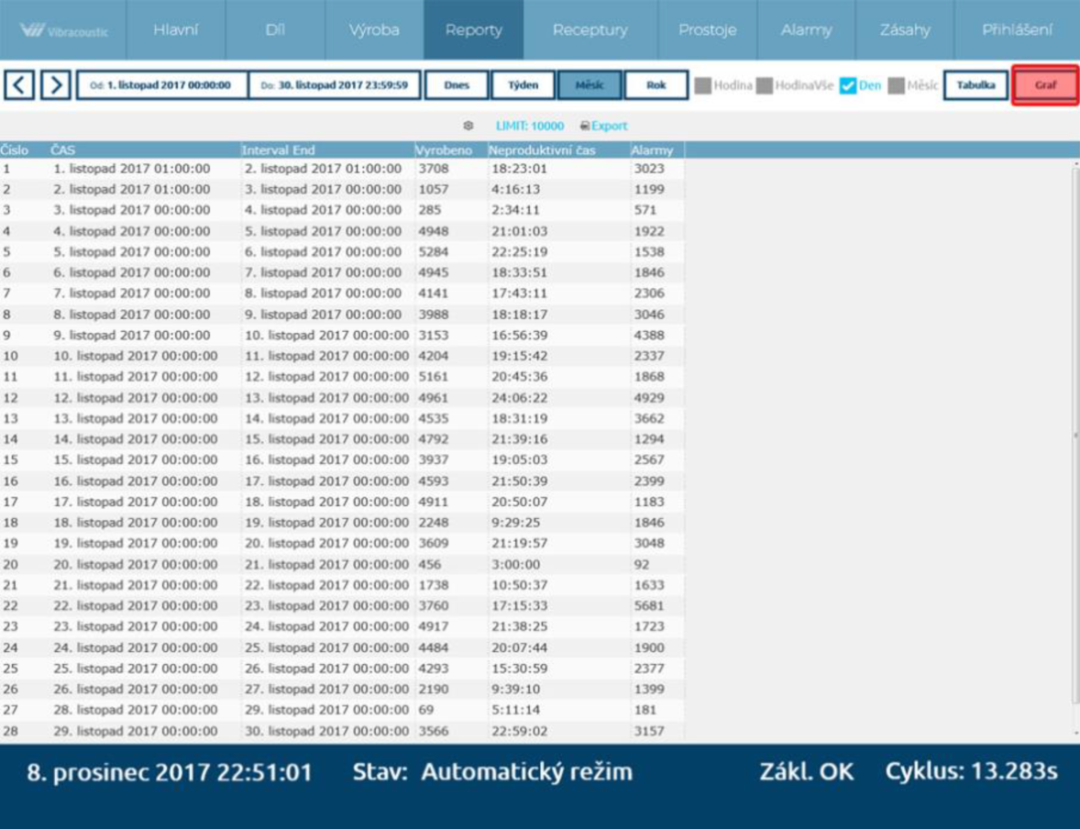

【SCADA案例】mySCADA助力Vib公司实现产线现代化升级

随机推荐

PG数据库安装timescale数据库以及备份配置

Summary of xrun parameter usage in cadence (continuously updated)

数据科学与计算智能:内涵、范式与机遇

Xcelium XRUN用户手册

【大型电商项目开发】缓存-分布式锁-缓存一致性解决-45

Test -- basic knowledge

[ERROR] COLLATION ‘utf8_ unicode_ ci‘ is not valid for CHARACTER SET ‘latin1‘

Study notes - C string delete character

C language simulation to realize character function and memory function

详解redis 中Pipeline流水线机制

Enter the first general codeless development platform - IVX

[matlab project practice] invoice recognition based on MATLAB (including GUI interface)

谷歌请印度标注员给Reddit评论数据集打标签,错误率高达30%?

Understand chisel language thoroughly 18. Detailed explanation of chisel module (V) -- Verilog module used in chisel

错误索引的解决方案

Imx8m rtl8201f Ethernet debugging

程序员不会SQL?骨灰级工程师:全等着被淘汰吧!这是必会技能!

【SCADA案例】mySCADA助力Vib公司实现产线现代化升级

dns劫持是什麼意思?常見的劫持有哪些?

What are the products of Rongyan?