当前位置:网站首页>深度学习——(6)pytorch冻结某些层的参数

深度学习——(6)pytorch冻结某些层的参数

2022-07-21 03:54:00 【柚子味的羊】

深度学习——(6)pytorch冻结某些层的参数

文章目录

在加载预训练模型的时候,有时候想冻结前面几层,使其参数在训练过程中不发生变化(不进行反向传播)

问题出现

在之前的blog——VGG16 图像分类中,使用如下语法

net = vgg(model_name=model_name, num_classes=1000,init_weights=True) # 没有训练好的权重,需要加载ImageNet的权重

net.load_state_dict(torch.load(pre_path, map_location=device))

for parameter in net.parameters():

parameter.required_grad = False

注:上面语句的含义我的理解是将所有的层都冻结

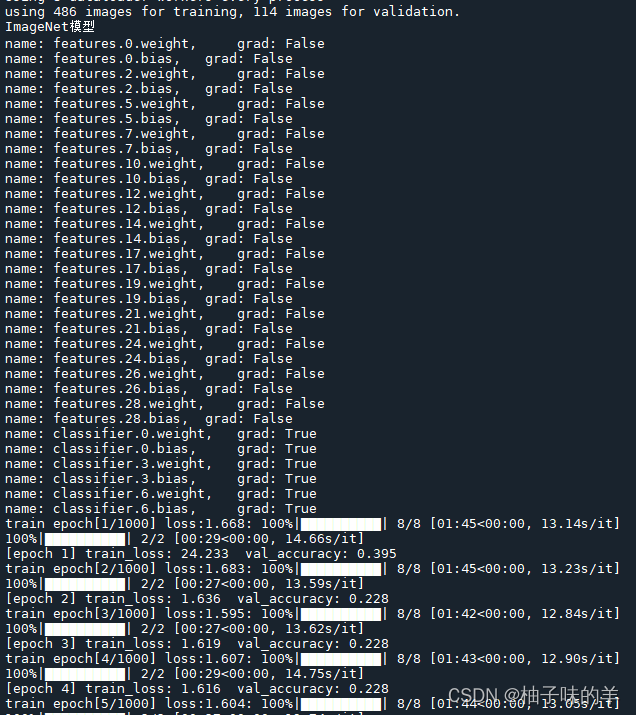

但是,出现了一个神奇的问题,当我打印每一层是否已经冻结,所有层的require_grad都显示为True。**

这,这不就是意思把真个model已知都在反向传播吗??那我加载权重干什么??为了一探究竟,所以做了下面的尝试,想看看到底这个required_grad是怎么一回事?

一探究竟

try 1. 不加required_grad

当不加required_grad的时候(上面代码不加后面两行),默认required_grad=True,训练时候的loss值应该会很大,看看结果如何

确实很大!!!

try 2. 使用原来的格式更改(上面提到的)

如果使用前面提到的,那应该所有的参数都被冻结了,loss应该不会像上面那么大了,但是打印每一层的grad依然显示True。——反正就神奇!

for parameter in net.parameters(): #required_grad==False 才不会反向传播,只训练下面部分(微调)

parameter.required_grad = False

for name, value in net.named_parameters():

print('name: {0},\t grad: {1}'.format(name, value.requires_grad))

try 3. 定义no_grad列表将部分层冻结

这才是我的初衷,师兄也和我说先冻结前面的层,对后面的分类进行整体学习,然后放开全局进行微调。所以我的意愿是先冻结feature层进行学习。

no_grad = ['features.0.weight','features.0.bias',

'features.2.weight','features.2.bias',

'features.5.weight','features.5.bias',

'features.7.weight','features.7.bias',

'features.10.weight','features.10.bias',

'features.12.weight','features.12.bias',

'features.14.weight','features.14.bias',

'features.17.weight','features.17.bias',

'features.19.weight','features.19.bias',

'features.21.weight','features.21.bias',

'features.24.weight','features.24.bias',

'features.26.weight','features.26.bias',

'features.28.weight','features.28.bias'

]

for name, value in net.named_parameters():

if name in no_grad:

value.requires_grad = False

else:

value.requires_grad = True

print('name: {0},\t grad: {1}'.format(name, value.requires_grad))

具体为什么还在和师兄讨论,真正明晰了继续更。也请知道原因的大家帮我解答疑惑,欢迎致电[email protected]

边栏推荐

- QT (37) -mosquitto mqtt client

- Detailed explanation of at mode principle of Seata (3)

- 手把手教你安装MySQL数据库

- Find a single dog -- repeat the number twice to find a single number

- Loop structure: while and do while structures

- 出现“TypeError: ‘str‘ object does not support item assignment”的原因

- Distributed Common architectures and service splitting

- Distributed High availability and high scalability index

- MySQL advanced (b)

- Distributed What index is high concurrency

猜你喜欢

Loop structure -- while loop and do while loop

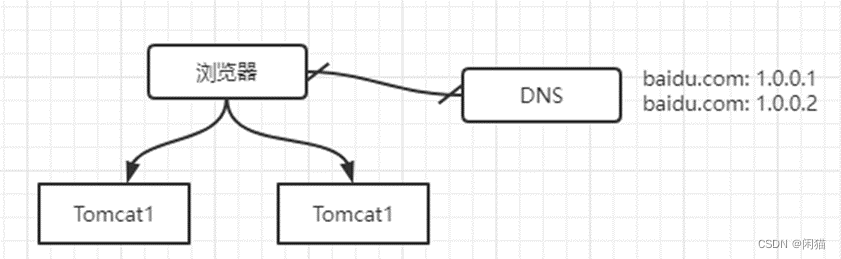

Distributed load balancing

Peripheral driver library development notes 44:ddc114 ADC driver

Distributed High availability and high scalability index

C what are the output points of DSP core resample of digital signal processing toolkit

H5 customized sharing in wechat

分布式.达到什么指标才算高并发

Distributed High concurrency concepts and design goals

分布式.高并发&高可用

05 regular expression syntax

随机推荐

建议收藏 | 可实操,数据中台选型示例

Loop structure -- while loop and do while loop

Part I - Fundamentals of C language_ 7. Pointer

Software test interview question: briefly describe what you have done in your previous work and what you are familiar with. The reference answers are as follows.

5. Customize global AuthenticationManager

函数、方法和接口的区别

The reason why "typeerror: 'STR' object does not support item assignment" appears

hcip第二天实验

Distribué. ID Builder

动态内存管理2之柔性数组

Strcspn, strchr special character verification

Sql优化(九):分页语句优化

How to realize the data structure of nested tables in SQL

DOM 事件流(事件捕获和事件冒泡)

Distributed High availability and high scalability index

C语言文件操作

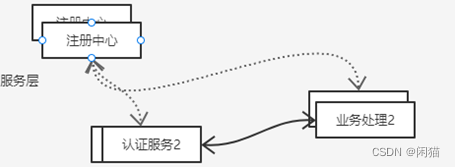

Distributed Common architectures and service splitting

Eight solutions to cross domain problems (the latest and most comprehensive)

JSP custom tag (the custom way of foreach tag and select tag)

Distributed High concurrency concepts and design goals