当前位置:网站首页>Spark RDD, application case of spark SQL

Spark RDD, application case of spark SQL

2022-07-21 19:12:00 【lantianhonghu1】

First step Prepare the environment first

1,IDEA Installed SDK yes 2.13.8 edition

2, newly build maven project , Depends on the following

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.13</artifactId>

<version>3.3.0</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-sql -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.13</artifactId>

<version>3.3.0</version>

<!-- <scope>provided</scope>-->

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-streaming -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.13</artifactId>

<version>3.3.0</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-mllib -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.13</artifactId>

<version>3.3.0</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-hive -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.13</artifactId>

<version>3.3.0</version>

<scope>provided</scope>

</dependency>

Case study 1, Use spark sql Read json data

Get ready json Formatted data

{

"name":"Michael","age":23}

{

"name":"Andy", "age":30}

{

"name":"Justin", "age":19}

The code is as follows

Case study 2 , Use spark sql Joint query of multiple tables

Get ready emp The data table

{

"name": "zhangsan","age": 26,"depId": 1,"gender": " Woman ","salary": 20000}

{

" name" : "lisi", "age": 36,"depld": 2,"gender": " male ","salary": 8500}

{

"name": "wangwu", "age": 23,"depId": 1,"gender": " Woman ","salary": 5000}

{

"name": "zhaoliu","age": 25,"depId": 3,"gender": " male ","salary": 7000}

{

"name": "marry", "age": 19, "depId": 2,"gender": " male ","salary": 6600}

{

"name": "Tom","age": 36,"depId": 4,"gender": " Woman ","salary" : 5000}

{

"name": "kitty", "age": 43,"depId": 2,"gender" : " Woman ","salary": 6000}

Prepare data of department table

{

"id": 1,"name": "Tech Department"}

{

"id": 2,"name": "Fina Department"}

{

"id": 3,"name": "HR Department"}

The code is as follows

package T6

import org.apache.spark.sql.SparkSession

object demo5 {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession.builder().appName("demo5").master("local").getOrCreate()

import sparkSession.implicits._

// Reading data

val emp_rdd = sparkSession.read.json("data/sql5.txt")

val dept_rdd = sparkSession.read.json("data/sql6.txt")

// hold json Data format conversion table

emp_rdd.createOrReplaceTempView("emp")

dept_rdd.createOrReplaceTempView("dept")

// Get the name of each employee , Department name , Wages

sparkSession.sql("select e.name,d.name,e.salary from emp e,dept d where e.depId=d.id").show()

// Get the name of the highest paid female employee , Department name , Age

sparkSession.sql("select e.name,d.name,e.salary,e.age from emp e,dept d where e.depId=d.id and salary=" +

" (select max(salary) from emp where gender=' Woman ')").show()

// Get the average salary of female employees

sparkSession.sql("select avg(salary) from emp where gender=' Woman ' ").show()

// select e.name,d.name,e.salary,e.age from emp e,dept d where e.depId=d.id and salary=

}

}

Case study 3

Prepare the data

full name subject 1 subject 2 subject 3 subject 4

Little kara 70 70 75 60

Little black 80 80 70 40

Xiaoxian 60 70 80 45

Xiaohao 70 80 90 100

1, Ask for the total score of each student's subject

2, Calculate the total score of each subject

, The code is as follows

package com.T5

import org.apache.spark.{

SparkConf, SparkContext}

object demo5 {

def main(args: Array[String]): Unit = {

var sparkConf = new SparkConf().setAppName("demo5").setMaster("local")

var sc = new SparkContext(sparkConf)

// Define file path

var filepath = "data/aa.txt"

// Get the first line

var firstfile = sc.textFile(filepath).first()

// Ask for the total score of each student

sc.textFile(filepath).filter(!_.equals(firstfile)).map(line=>{

// Cut each line

var arr = line.split("\t")

var name = arr(0)

var km1 = arr(1).toInt

var km2 = arr(2).toInt

var km3 = arr(3).toInt

var km4 = arr(4).toInt

// Output

(name,km1+km2+km3+km4)

}).foreach(println)

// Find the total score of each subject

var km1 = 0

var km2 = 0

var km3 = 0

var km4 = 0

var i =0

sc.textFile(filepath).filter(!_.equals(firstfile)).map(line=>{

var arr = line.split("\t")

// Output

(arr(1).toInt,arr(2).toInt,arr(3).toInt,arr(4).toInt)

}).foreach(

x=>{

km1+=x._1

km2+=x._2

km3+=x._3

km4+=x._4

i+=1

if (i==4){

println((" subject 1",km1),(" subject 2",km2),(" subject 3",km3),(" subject 4",km4))

}

}

)

}

}

边栏推荐

- [OBS] release signed construction based on cmake

- Ardunio development - use of soil sensors

- swift和OC对比,Swift的核心思想:面向协议编程

- Emuelec Development Notes

- Banks are not Tangseng meat banks are solid barriers to financial security.

- Niu Ke net brushes questions

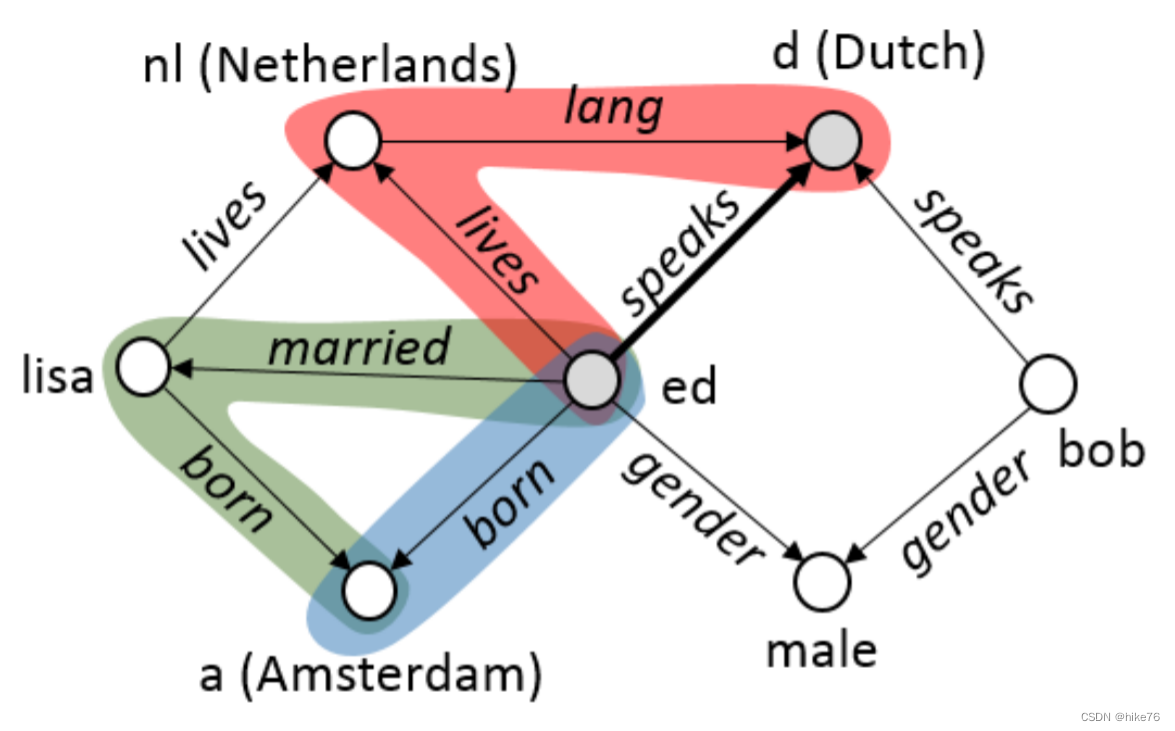

- 论文翻译解读:Logmap:Logic-based and scalable ontology matching

- 软件测试面试题:如何测试一个纸杯?

- Brush notes - find

- C language constants and variables

猜你喜欢

TCP协议

论文翻译解读:Anytime Bottom-Up Rule Learning for Knowledge Graph Completion【AnyBURL】

Obtain the screenshot of the front panel through programming in LabVIEW

Don't be silly to distinguish these kinds of storage volumes of kubernetes

Ardunio开发——I2C协议通讯——控制2x16LCD

High quality WordPress download station template 5play theme source code

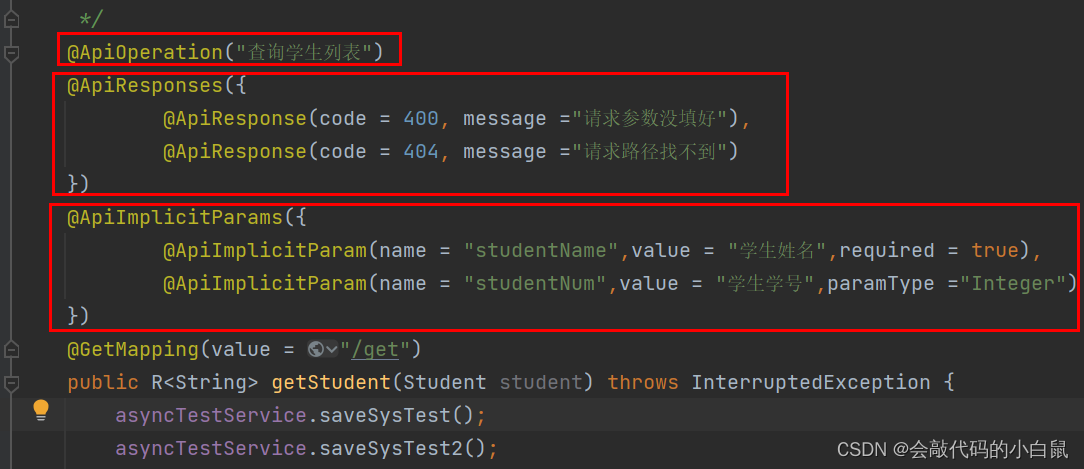

According to the framework's swagger interface document

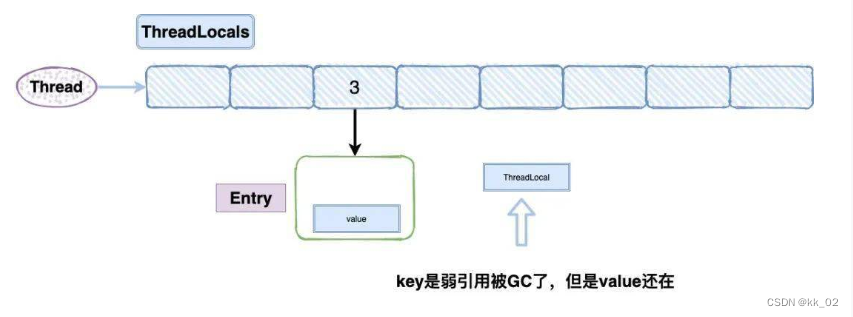

ThreadLocal

Common business interview questions for data analysis

NFS service configuration

随机推荐

Leetcode - prefix sum and difference

软件测试面试题:软件测试的策略是什么?

软件测试面试题:如何测试一个纸杯?

V853 development board hardware data - risc-v core e907 user manual

Essential tools for streamlit Data Science

Rk3128 speaker SPK and headset HP sound size debugging

Streamlit 数据科学必备工具

用VIM正则表达式进行批量替换的小练习

FTP服务配置

4、security之自定义数据源

Ardunio开发——I2C协议通讯——控制2x16LCD

uniapp动态设置占位区高度(配合createSelectorQuery方法)

软件测试面试题:你自认为测试的优势在哪里?

软件测试面试题:你对测试最大的兴趣在哪里?为什么?

2022软件测试技能 Jmeter+Ant+Jenkins持续集成并生成测试报告教程

数据分析常见的业务面试题

教程篇(7.0) 03. FortiClient EMS配置和管理 * FortiClient EMS * Fortinet 网络安全专家 NSE 5

【信息检索】信息检索系统实现

[QNX hypervisor 2.2 user manual]8.6 interrupt

C语言进阶(十四) - 文件管理