当前位置:网站首页>Dense passage retrieval for open domain question answering notes

Dense passage retrieval for open domain question answering notes

2022-07-22 20:29:00 【cyz0202】

DPR note

A- background

- be used for QA Relevant document retrieval in , Improve traditional retrieval methods BM25 Some of the disadvantages of ( Such as BM25 More dependent on token perhaps phrase The matching of , and DPR Improve the ability of semantic based matching ), As below QA,BM25 It's hard to find this answer( The yellow part ):

- question:“Who is the bad guy in lord of the rings?”

- answer: "Sala Baker is best known for portraying the villain Sauron in the Lord of the Rings trilogy.”

- ORQA(Lee et al.,2019) First, prove that you are doing open QA when ,Dense retrieval methods Comparable TF-IDF/BM25 Better , however ORQA There are drawbacks , Include :

- The amount of pre training calculation used is very large

- Use some common sentences replace question, It is not a clear and feasible method ;(?)

- context encoder( Retrieve content encoder ) There is no use (question,answer) Conduct finetune, The search results may be sub optimal ;

B- Method

- B1- Architecture design :

- dual-encoders, Use dual encoders , Code separately question( q q q) and context(or passage p p p), And get E Q E_Q EQ、 E P E_P EP; Both encoders use Bert(base,uncased); Through each [CLS] Output dense representation(dr);

- Calculate the above two dr Of dot product( The calculation method is as follows ), As a measure question and passage The similarity ; You can also choose other metric, But let a lot of passages Can calculate in advance , Avoid time-consuming online calculations ; Experimental proof dot product Simple and excellent performance ;

s i m ( q , p ) = E Q ( q ) T E P ( p ) sim(q,p)=E_Q(q)^TE_P(p) sim(q,p)=EQ(q)TEP(p) - Inference: This stage , Will use first E P E_P EP Calculate all passages Of dr, And offline use FAISS(Johnson et al., 2017) Index ;FAISS It is an open source and efficient dense vectors Similarity search and clustering Library , It can easily cope with billions of vectors; At this point, a q q q, Calculation v q = E Q ( q ) v_q=E_Q(q) vq=EQ(q), Then you can index it in passages corpus Search near q q q Of top k individual passages;

- B2- Experimental design :

- Training: The problem is essentially a metric learning problem , Relevant ( q q q, p p p) Yes, there is a minimum distance ( Higher similarity ), uncorrelated pair Try to minimize the similarity ; It can be designed as follows loss:

- Positive passage choice 1 Articles related passage that will do ,n strip negative passages Yes 3 Three acquisition schemes , Experiments have proved the best n strip negative The choice is n-1 strip Gold add 1 strip BM25; utilize Gold This method , It can effectively increase training examples ;

- Random: Random access n strip passages

- BM25: utilize BM25 Select exclude answer The closest n Samples

- Gold: Training focuses on others question Corresponding positive passage, Random selection n strip ;

- Data preprocessing

- about wiki, Remove semi-structured data , Such as tables,info-boxes,lists And unclear pages ;

- Each one article Cut into several 100 words Of blocks, As passages;

- Every passage Add corresponding wiki article title The prefix , Tail add [SEP] identifier ;

- For no passages Data set of , Such as TREC/WebQuestions/TriviaQA, Use BM25 return top100 Of passage, Choose to include answer The highest score passage As positive passage; If none of them contain answer, Discard the question;

- The processed experimental data set is as follows :

- Training: The problem is essentially a metric learning problem , Relevant ( q q q, p p p) Yes, there is a minimum distance ( Higher similarity ), uncorrelated pair Try to minimize the similarity ; It can be designed as follows loss:

- B3- experimental result

- In the above single data set (Single) And a mixed dataset of the above datasets (Multi, Not included SQuAD) Training on , And conduct recall accuracy test ,BM25+DPR use B M 25 ( q , p ) + 1.1 ∗ s i m ( q , p ) BM25(q,p)+1.1*sim(q,p) BM25(q,p)+1.1∗sim(q,p) Calculation method of ; The results are shown in the following figure ; You can see DPR It's an improvement , And the training result of mixed data set is better than that of single data set ;SQuAD because question and passage There are many overlaps token, therefore BM25 It is better to ;

- In-batch negative training: Test of training sample setting ;#N 31+32 Express batch=32 when , Every workout question Except for the original 31 strip In-batch negative, Add... In addition 32 A difficult negative sample ; This part of negative samples is made use of BM25, from passages Get with each workout question Most relevant 、 But it doesn't include answer Of passage; The experimental results are as follows ; You can see In-batch negative than Random good ; Adding a small number of difficult negative samples can further improve the effect ;

- Cross-dataset generalization: Experimental proof DPR Generalization ratio BM25 good

- Run-time Efficiency:

- DPR It needs to be calculated in advance passages Of dense embeddings, And use FAISS Indexes , Time consuming , But it belongs to disposable , It can be processed offline in parallel ;BM25/Lucene Handle passages Much faster ;

- With the above calculation and index , With the help of FAISS,DPR Subsequent retrieval and its efficiency , The speed is roughly BM25/Lucene Of 40 times ;

- take DPR be used for End2End QA

- except DPR, Add candidates passages Scoring model P s e l e c t e d P_{selected} Pselected, And from each passage In order to get answer span Model of P s p a n ( have body yes P s t a r t / P e n d P_{span}( The concrete is P_{start}/P_{end} Pspan( have body yes Pstart/Pend;

- The author mentioned P s e l e c t e d P_{selected} Pselected Calculation (q, p) Between cross attention, But I don't see how to calculate ; P s e l e c t e d / P s t a r t / P e n d P_{selected}/P_{start}/P_{end} Pselected/Pstart/Pend The specific calculation method is as follows :

- The training process is set as follows , It should be noted that the optimization objectives are combined answer-span Of log-likelihood and positive-passage Of log-likelihood; Another one passage in answer-span There may be more than one , It should also be combined ( The specific author did not say , The average should be ok )

- The experimental results are as follows :

- In the above single data set (Single) And a mixed dataset of the above datasets (Multi, Not included SQuAD) Training on , And conduct recall accuracy test ,BM25+DPR use B M 25 ( q , p ) + 1.1 ∗ s i m ( q , p ) BM25(q,p)+1.1*sim(q,p) BM25(q,p)+1.1∗sim(q,p) Calculation method of ; The results are shown in the following figure ; You can see DPR It's an improvement , And the training result of mixed data set is better than that of single data set ;SQuAD because question and passage There are many overlaps token, therefore BM25 It is better to ;

C- summary

- The article puts forward DPR, Experiments show that it is better than traditional retrieval methods such as BM25 Provides more semantics , It obviously improves the retrieval effect of many cases ( stay SQUAD The effect is worse than BM25), At the same time E2E-QA Has also made significant improvements ;

- DPR Design ideas and skills :

- DPR Used dual-encoder, Using the pre training model finetune, Make full use of the massive knowledge of the pre training model ;

- utilize dot-product and FAISS Pre process massive passages, Greatly improve the online computing speed , Achieve a balance between effect and speed ( How to pre calculate mass passages Of embeddings It's a difficult point );

- The training process uses In-batch negative Sampling negative samples , Use BM25 Add more difficult negative samples , Significantly improve the performance of the model ;

- Above E2E-QA Experiment except DPR, Other parts are relatively simple , article Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks( Yes DPR author ) Use trainable seq2seq Improved this part , Get a better open-QA System ;

边栏推荐

- 机器学习入门:线性回归-1

- How to solve the blue screen problem in win8.1 system and how to solve the malicious hijacking of IE home page?

- 深入理解mmap函数

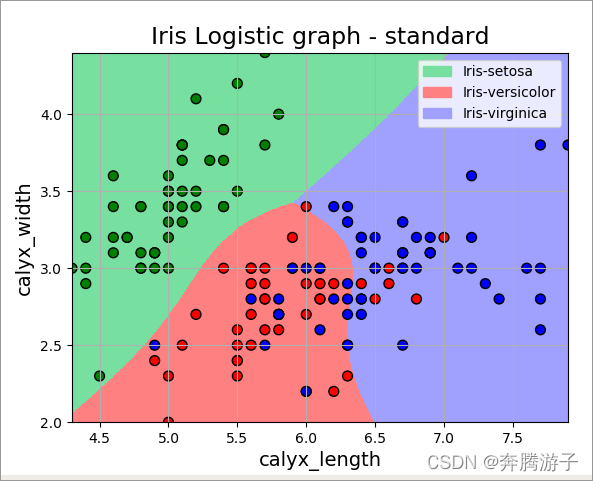

- 机器学习入门:逻辑回归-2

- Introduction to machine learning: Logistic regression-2

- Common tools for data development - regular sending of query results email

- 网页被劫持了该怎么办?dns被劫持如何修复?网页劫持介绍

- dns 劫持什么意思、dns 劫持原理及几种解决方法

- 达梦数据库安装使用避坑指南

- Leetcode0002——Add Two Numbers——Linked List

猜你喜欢

她力量系列八丨陈丹琦:我希望女生能够得到更多的机会,男生和女生之间的gap会逐渐不存在的

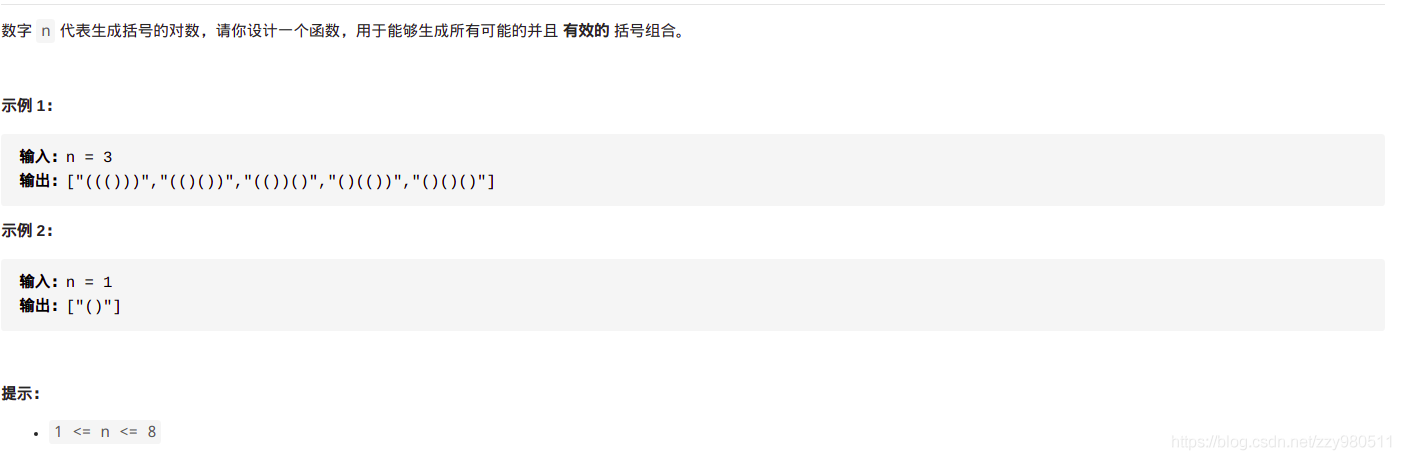

Leetcode0022 - bracket generation - DFS

专访Women in AI学者黄惠:绘图形之梦,寻突破之门

JDBC异常SQLException的捕获与处理

mysql引擎

CSDN博客去除上传的图片水印

Introduction to machine learning: Logistic regression-2

基于canny的亚像素的Devernay Algorithm

Deep understanding of MMAP function

What are the common ways for websites to be hacked and hijacked? What are the DNS hijacking tools?

随机推荐

Leetcode0002——Add Two Numbers——Linked List

CentOS安装mysql数据库

Unix C语言POSIX的线程创建、获取线程ID、汇合线程、分离线程、终止线程、线程的比较

RP file Chrome browser view plug-in

LeetCode103——zigzagLevelOrder of binary tree

Her power series seven LAN Yanyan: ideal warm 10-year scientific research road, women can be gentle, more confident and professional | women's Day Special

Her power series II UCLA Li Jingyi: the last thing women need to do is "doubt themselves"

她力量系列三丨把握当下,坚持热爱,与食物图像识别结缘的科研之路

Spark data search

LeetCode53——Maximum Subarray——3 different methods

《因果学习周刊》第10期:ICLR2022中最新Causal Discovery相关论文介绍

本地镜像发布到私有库

YOLO v1、v2、v3

How to deal with DNS hijacked? Five ways to deal with it

She studied in the fourth series of strength, changed her tutor twice in six years, and with a little "stubbornness", Yu Zhou became one of the pioneers of social Chatbot

Leetcode0002——Add Two Numbers——Linked List

redis集群搭建

进程的互斥、同步

Common tools for data development - regular sending of query results email

网站莫名跳转,从百度谈什么是网站劫持?百度快照劫持怎么解决