当前位置:网站首页>MySQL imports 300million data

MySQL imports 300million data

2022-07-22 21:04:00 【Bug Hu Hansan】

Preface

Some time ago , Former colleagues asked me ,Mysql Can I import it 3 Billion of data . My mind was in a daze , I'm not sure that it should be able to import ! Just import it in , The query may be too slow .

So Baidu immediately , I found that someone had done such a thing , stay Mysql Import 100 million level data above ( The article links ). According to the introduction of this article , I know the original pits .

The first thing to note : Indexes

The first thing to note is the index . When creating a table, do not index other fields except the primary key . Because the index also needs to be created , When we have too much data, we should pay attention to , Creating an index at this time will cause our data import time to be infinitely longer . Just leave a self increasing ID Just make the primary key , If your data has a primary key ( Absolutely unique ), Just use this primary key , There is no need to increase ID 了 . When the import is complete , We are adding indexes .

The second note : Storage engine

Mysql Generally, it is recommended to use InnoDB, But here we have no business needs , Therefore, a more efficient query MyISAM As a storage engine . It should be noted that MyISAM There is no business , Just insert , There is no saying of rollback . It should be noted that the table is locked when writing 、 So when writing , Don't query the data of the table at the same time .

The third note : disk space

Be sure to leave enough space for your disk before inserting . It should be noted that there are large transactions in the import , exceed binlog_cache_size, High and send to generate a large number of temporary files ( Links to articles ), If we do not specify a temporary file directory , So if you are windows System , Your temporary file directory is probably in C disc . have access to show variables like "tmpdir"; Command to see where your own temporary directory is . If there is not enough space, an error will be reported :1598 - Binary logging not possible. Message: An error occurred during flush stage of the commit. 'binlog_error_action' is set to 'ABORT_SERVER'. Hence aborting the server. So it's best to specify the directory of temporary files tmpdir="D:/Program Files/db/mysql-8.0.20-winx64/tmp"

Data preparation

Okay 、 Start the import journey . Before importing, we need to prepare the corresponding data , Here, first post the table structure :

create table test_user_tab(

id bigint comment ' Primary key ',

now_date varchar(20) comment ' Insert time ',

tel varchar(11) comment ' Telephone ',

card_num varchar(18) comment ' ID number ',

sex int comment ' Gender :1 male ,0 Woman ',

mz varchar(20) comment ' Famous people ',

user_name varchar(20) comment ' full name ',

address_str varchar(50) comment ' Address ',

PRIMARY KEY (`id`)

) ENGINE=MyISAM DEFAULT CHARSET=utf8 comment ' User test form ';We can use code to generate corresponding data , Randomly generated phone 、 ID number 、 Gender 、 nation 、 full name 、 Address . Generate 300 million data at a time .

java Generate the data

Generate code warehouse : Test data generation : Generate test data

import com.hzw.code.util.IDCardUtil;

import com.hzw.code.util.RandomValue;

import com.hzw.code.util.WeightRandomMz;

import org.apache.commons.lang3.time.DateFormatUtils;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.channels.FileChannel;

import java.util.Date;

public class Test {

public static void main(String[] args) {

int sex = 0;

String card = null;

int c = 1;

for(int k=1;k<=3000; k++) {

StringBuffer sbText = new StringBuffer();

for (int i = 1; i <= 100000; i++) {

card = IDCardUtil.generateID();

if (Integer.parseInt(card.substring(16, 17)) % 2 == 1) {

sex = 1;

} else {

sex = 0;

}

sbText.append(c++).append(",").

append(DateFormatUtils.format(new Date(), "yyyy-MM-dd HH:mm:ss")).

append(",").append(RandomValue.getTel()).

append(",").append(card).

append(",").append(sex).

append(",").append(WeightRandomMz.getMz()).

append(",").append(RandomValue.getChineseName()).

append(",").append(RandomValue.getRoad()).append("\n");

System.out.println(" The current number :"+c);

}

write("D:\\app\\ld\\users_data.txt", sbText.toString());

}

}

/**

* Append write file

* @param file File path

* @param text Appending the content of writing

*/

public static void write(String file,String text) {

FileOutputStream fos = null;

FileChannel channel = null;

try {

fos = new FileOutputStream(file,true);

channel = fos.getChannel();

byte[] array = text.getBytes();

ByteBuffer buffer = ByteBuffer.wrap(array);

channel.write(buffer);

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

channel.close();

fos.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}Import data

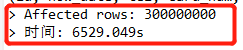

According to the above java Code , We got 3 100 million data , pure txt File size is :27.7GB. At this time, you can start importing . The table structure has been posted on it , Import according to the above structure .

load data local infile 'D:/app/ld/users_data.txt' into table test_user_tab

CHARACTER SET utf8 -- Optional , Avoid the problem of Chinese random code

FIELDS TERMINATED BY ',' -- Field separator , Each field ( Column ) What characters are used to separate , The default is \t

OPTIONALLY ENCLOSED BY '' -- Text qualifier , What characters are enclosed in each field , The default is null

ESCAPED BY '\\' -- Escape character , The default is \

LINES TERMINATED BY '\n' -- Record separator , For example, the field itself also contains \n, Then you should remove , otherwise load data It will be mistakenly imported as another line of record

(id, now_date, tel, card_num, sex,mz,user_name,address_str) -- The table fields corresponding to each line of text in order , It is recommended not to omit After executing the above code, an error may be reported Loading local data is disabled; this must be enabled on both the, You need to enable global local file settings

set global local_infile=on;So this edition needs to wait

After import 、 The size of the table :

Index length (4.03GB)、 Data length (29.13GB)、 Maximum data length (256TB)

Notebook configuration :

Memory :16G

CPU:AMD RYZEN 5 3500U 4 nucleus

Solid state disk :500G

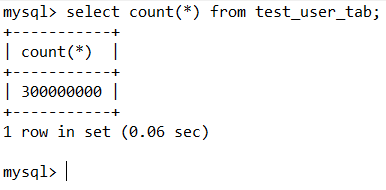

SQL test :Count Why can the whole watch be spicy so fast 、 Because MyISAM The engine will store the number of data entries of the whole table ,Count The whole table will be stored directly count Get it .

mysql> select * from test_user_tab

order by id desc limit 0,10;

+-----------+---------------------+-------------+--------------------+-----+--------+-----------+----------------------+

| id | now_date | tel | card_num | sex | mz | user_name | address_str |

+-----------+---------------------+-------------+--------------------+-----+--------+-----------+----------------------+

| 300000000 | 2021-06-30 10:28:34 | 13501545216 | 410101196604012797 | 1 | dulong | Huqidong | Market 2 building 15 Number -15-8 |

| 299999999 | 2021-06-30 10:28:34 | 13203729048 | 140101201101183795 | 1 | Bouyei | Lu Cheng | Minjiang second square 50 Number -1-6 |

| 299999998 | 2021-06-30 10:28:34 | 13107976886 | 220101201409017312 | 1 | Bouyei | Xu Yan | Zhangping road 65 Number -11-5 |

| 299999997 | 2021-06-30 10:28:34 | 13405130128 | 530101198901051473 | 1 | Maonan | Gong guohan | Dagang Weisi Street 122 Number -5-10 |

| 299999996 | 2021-06-30 10:28:34 | 13500788582 | 310101195005203737 | 1 | han | Guo Fang | Macau Plaza 118 Number -16-1 |

| 299999995 | 2021-06-30 10:28:34 | 13500938547 | 540101200801079200 | 0 | Naxi | Gongyun | Weixian square 46 Number -6-7 |

| 299999994 | 2021-06-30 10:28:34 | 15301675632 | 810101195409066773 | 1 | Tatar | Che Qian | Fukuyama building 13 Number -15-5 |

| 299999993 | 2021-06-30 10:28:34 | 15502277286 | 120101200506094119 | 1 | Maonan | Xuan Yanguang | Xiaogang Second Street 111 Number -17-2 |

| 299999992 | 2021-06-30 10:28:34 | 15307933706 | 620101199804149598 | 1 | han | Su Qi | Wusong Road 101 Number -20-4 |

| 299999991 | 2021-06-30 10:28:34 | 13808373641 | 320101199212197480 | 0 | hidden | Fan Jihua | Wuding Road 142 Number -9-5 |

+-----------+---------------------+-------------+--------------------+-----+--------+-----------+----------------------+

10 rows in set (0.19 sec)mysql> select * from test_user_tab where id in (569866,5656,23565,44,6,6467,8979);

+--------+---------------------+-------------+--------------------+-----+------+-----------+---------------------+

| id | now_date | tel | card_num | sex | mz | user_name | address_str |

+--------+---------------------+-------------+--------------------+-----+------+-----------+---------------------+

| 6 | 2021-06-30 09:14:24 | 13704555009 | 110101199102178730 | 1 | return | Xu Liang | Xinchang Street 148 Number -1-2 |

| 44 | 2021-06-30 09:14:24 | 15101051329 | 430101200205272631 | 1 | Susu | Chu Huijia | Haiyang Road 76 Number -11-6 |

| 5656 | 2021-06-30 09:14:24 | 15604920061 | 440101201702236208 | 0 | han | Ru Yongtian | Wuxian 1st Street 119 Number -5-3 |

| 6467 | 2021-06-30 09:14:24 | 15505384583 | 520101196903158281 | 0 | North Korea | Yangguangsheng | Xinzhan road 133 Number -8-5 |

| 8979 | 2021-06-30 09:14:24 | 13007372278 | 110101202107103898 | 1 | full | Gong Yun | Huimin South Road 65 Number -9-6 |

| 23565 | 2021-06-30 09:14:24 | 13103003467 | 440101197306079977 | 1 | Dai nationality | Ge Fengting | Macao 4th Street 51 Number -17-9 |

| 569866 | 2021-06-30 09:14:33 | 15603042860 | 32010119830925634X | 0 | han | Rong juanlu | Dagang Weiyi road 52 Number -14-9 |

+--------+---------------------+-------------+--------------------+-----+------+-----------+---------------------+

7 rows in set (0.21 sec)Just use id As a condition , Because of the index , Pretty fast . Other fields are not so hot . Need to do where Add an index to the field of the condition .

边栏推荐

- spark常见问题

- RPM包管理—YUM在线管理-IP地址配置和网络YUM源

- 微信小程序入门教程学习笔记

- 微信小程序Cannot read property 'setData' of null错误

- BUUCTF闯关日记--[CISCN2019 华北赛区 Day2 Web1]Hack World

- 使用js写个3d banner

- Spark Learning sparksql

- Multithreading 03 -- synchronized and lock escalation

- Chapter 2: configure data sources, redis, security, swagger and other tools jar for the project

- mysql 连接查询在on中使用convert 导致扫描行数增长

猜你喜欢

随机推荐

Multithreading 03 -- synchronized and lock escalation

微信小程序入门教程学习笔记——UI篇之操作反馈

BUUCTF闯关日记--[MRCTF2020]你传你呢(超详解)

BUUCTF闯关日记04--[ACTF2020 新生赛]Include1

使用js写个3d banner

When the project goes online, the old data needs to be modified, and writing SQL is too troublesome. Look at the powerful function of Excel with simple SQL

Servlet

多线程05--ReentrantLock 原理

Desensitization of mobile phone, name and ID card information in the project in the log and response data

Set colSpan invalidation for TD of table

Write a 3D banner using JS

Style writing in next

[LTTng学习之旅]------core concepts 拾遗

Buuctf breakthrough diary -- [ciscn2019 North China division Day2 web1]hack world

MATLAB2017a环境下使用libsvm-3.23出现的问题与解决方案

Wechat applet cannot read property'setdata'of null error

Bash基本功能—通配符和其他特殊符号

xshell、CRT上使用vbscript更高效连接定位到服务器以及目录、数据库

脚本执行方式

Multithreading 06 -- countdownlatch, cyclicbarrier, semaphore

![[LTTng学习之旅]------Trace View初探](/img/72/d3e46a820796a48b458cd2d0a18f8f.png)

![[LTTng学习之旅]------Components of LTTng 解构](/img/67/640a4da10d20e56d8e97d472b8b1c8.png)